People generally consider A15>Krait>A9. A15 processors are faster than Krait processors. Qualcomm would counter that the extra performance A15 processors bring is not worth the extra power consumption. It's up to you to decide if that statement is true.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Cortex A-15 v Bulldozer/Piledriver

- Thread starter french toast

- Start date

People generally consider A15>Krait>A9. A15 processors are faster than Krait processors. Qualcomm would counter that the extra performance A15 processors brings is not worth the extra power consumption. It's up to you to decide if that statement is true.

Ahh OK... is there an A15 processor in the wild yet? By that I mean in a mobile phone/tablet that has been released?

Love to see some benchmarks if so.

Talking about power consumption - the HTC S does have a better battery life vs the HTC One X which is based on the Tegra 3 and uses a different screen.

I was considering a HTC S over the HTC One X precisely because of a concern with power consumption.

Nope. I think people expect the performance advantage of A15 over Krait to be more than that of Krait over A9 - it's definitely "fatter" on paper and also is designed to run at quite a bit higher frequency. The perf/power side of things aren't obvious though so the advantage in smartphones might not be that big. Haven't followed it closely but last I heard expected release time for A15 SoCs was late 2012 (for final silicon not engeneering samples which are floating around for half a year or so) hence I'd not be surprised if we don't see any products in 2012. Well should definitely be released earlier than Atom Silvermont at least...Ahh OK... is there an A15 processor in the wild yet? By that I mean in a mobile phone/tablet that has been released?

It is a pretty decent advantage Qualcomm have picked up then with Krait.

I read somewhere Krait manages 3.3 DMIPS/MHz whereas we can expect 3.5 DMIPS/MHz for a full A15. Not much in it by that metric but then again DMIPS may not be a useful yardstick... aha, found a reference.

From Anandtech:

I read somewhere Krait manages 3.3 DMIPS/MHz whereas we can expect 3.5 DMIPS/MHz for a full A15. Not much in it by that metric but then again DMIPS may not be a useful yardstick... aha, found a reference.

From Anandtech:

At 3.3, Krait should be around 30% faster than a Cortex A9 running at the same frequency. At launch Krait will run 25% faster than most A9s on the market today, a gap that will only grow as Qualcomm introduces subsequent versions of the core. It's not unreasonable to expect a 30 - 50% gain in performance over existing smartphone designs. ARM hasn't published DMIPS/MHz numbers for the Cortex A15, although rumors place its performance around 3.5 DMIPS/MHz.

dmips is indeed pretty bogus. On that metric a A8 isn't much slower than a A9 neither. Things like in-order/OOO design, instruction latencies, branch prediction, or memory/cache subsystem don't factor in there at all (past A9 I don't think just adding more execution units is really going to do much without improving other aspects).It is a pretty decent advantage Qualcomm have picked up then with Krait.

I read somewhere Krait manages 3.3 DMIPS/MHz whereas we can expect 3.5 DMIPS/MHz for a full A15. Not much in it by that metric but then again DMIPS may not be a useful yardstick... aha, found a reference.

From Anandtech:

But even if A15 isn't much faster per clock than Krait, it is supposed to run well past 2Ghz.

TI have demoed the new OMAP5 in this video:

http://www.electronista.com/articles/12/02/23/ti.omap5.demo.shows.a15.more.than.2x.faster/

Apparently the OMAP5 was running at 800MHz and (in this task, at least), it was much faster than a quad-core A9 which I assume must have been the Tegra 3. Whether the Tegra 3 was running at stock clocks or not, I'm not sure.

If OMAP5 was running at just 800MHz a couple of months ago, I'd imagine it may be some time until we see the final chips in devices. As A15 is reportedly going to scale up to 2GHz, it is encouraging performance, however.

http://www.electronista.com/articles/12/02/23/ti.omap5.demo.shows.a15.more.than.2x.faster/

Apparently the OMAP5 was running at 800MHz and (in this task, at least), it was much faster than a quad-core A9 which I assume must have been the Tegra 3. Whether the Tegra 3 was running at stock clocks or not, I'm not sure.

If OMAP5 was running at just 800MHz a couple of months ago, I'd imagine it may be some time until we see the final chips in devices. As A15 is reportedly going to scale up to 2GHz, it is encouraging performance, however.

I don't really trust that benchmark much. First, I bet this is measuring single-thread performance (neither mp3 nor video download require any noteworthy cpu cycles, and I don't think the browser can make use of multiple threads), hence if that's against a quad-core or dual-core A9 doesn't matter one bit. That would of course still be a very good result even then though.Apparently the OMAP5 was running at 800MHz and (in this task, at least), it was much faster than a quad-core A9 which I assume must have been the Tegra 3. Whether the Tegra 3 was running at stock clocks or not, I'm not sure.

Second, the mentioning of video download in the background makes me suspicious, why was this task chosen? For all we know the performance difference could be because the A15 tablet could have QoS enabled in the network stack or just have a faster connection (well unless the pages were served locally, but I haven't seen specifics of the test setup).

Make no mistake I don't doubt the A15 will be faster at the same clock as a A9, but this demo screams "rigged". More than 3 times faster at the same clock (which this demo suggests) would be quite phenomenal.

On the other hand, when is a quad core of much use in practice with these devices? Single thread performance is definitely what these devices need more of. There's not a lot of video encoding or offline 3D rendering happening here.

It will be interesting to see what the more complex A15 design does to power usage though. I'm curious if Intel's new Atom design will be hard competition for it.

It will be interesting to see what the more complex A15 design does to power usage though. I'm curious if Intel's new Atom design will be hard competition for it.

Yes I don't disagree with that. Still it's obvious why a quad A9 was chosen as a comparison. They could have used a single core A9 for comparison, most likely arrive with the same numbers and it would have looked a lot less impressiveOn the other hand, when is a quad core of much use in practice with these devices? Single thread performance is definitely what these devices need more of. There's not a lot of video encoding or offline 3D rendering happening here.

But in any case I really think this test was about network bandwidth, not cpu performance...

intel has kept Silvermont internals quite a secret (ok it's got some Ivy Bridge derived graphics but haven't seen anything specific about the cpu other than it should be a significantly different core). They might end up with similar performance (and performance/w).It will be interesting to see what the more complex A15 design does to power usage though. I'm curious if Intel's new Atom design will be hard competition for it.

Sorry, are you asking or informing?

AFAIK the Qualcomm Snapdragon S4 is based on the A15 architecture. Qualcomm design their own cores under license from ARM.

Qualcomm has arm architecture license. This is different than licence to some arm ip block.

Scorpion is based on A15 as much as AMD Athlon is based on Intel Pentium Pro.

ie. not based on at all, just uses same instruction set and has similar features, and quite similar performance level.

Last edited by a moderator:

french toast

Veteran

Qualcomm has arm architecture license. This is different than licence to some arm ip block.

Scorpion is based on A15 as much as AMD Athlon is based on Intel Pentium Pro.

ie. not based on at all, just uses same instruction set and has similar features, and quite similar performance level.

Im expecting A15 to be 20% faster clock for clock than Krait, AND scale to higher frequencies from the get go.

A15 and Krait are completely different processors, its like saying a Marvel armada sheeva core is the same as a Cortex A7.

Seriously though, if Cortex A9's can hit 3.1ghz over at TSMC, then if Cortex A15 ends up 60% higher IPC and clocks anywhere near 2.5ghz, then it would seriously give Piledriver a run for its money.

Silverthorne according to Charlie D, is going to be 'semi OoO' dual issue which brings around 20-25% IPC gains over Saltwell, and enables higher clock speeds, probably some efficiency improvements as well, the 22nm node will also improve things significantly, the thing is are we at a point where any improvement in cpu power is not even going to be noticeable in a smartphone?? havn't we hit the limit already?

Tablets could do with a boost, but even the ipad is not exactly laggy with just 2 A9's @ 1ghz!??

Blazkowicz

Legend

CPU power can always be wasted on javascript. some websites are amazingly slow. worse if they use html5 canvas for a "turning pages" reader, or anything else.

if I had such hardware I would probably load it with emulators (qemu, dosbox), OS level virtualization, Ubuntu on Android etc. . Or, I will run audio encoding on the phone rather than on my PC if I wish to shift some .flac to .ogg

for hardware assisted media playback, client apps, notetaking, caldendar etc.? sure the CPU power will be useless. plus, Android apps are made to run on the $130 phones.

if I had such hardware I would probably load it with emulators (qemu, dosbox), OS level virtualization, Ubuntu on Android etc. . Or, I will run audio encoding on the phone rather than on my PC if I wish to shift some .flac to .ogg

for hardware assisted media playback, client apps, notetaking, caldendar etc.? sure the CPU power will be useless. plus, Android apps are made to run on the $130 phones.

french toast

Veteran

Also Intel first gen 22nm hasn't exactly set the world alight, when you consider the improvements made to Trinity, whilst increasing batterylife all on the same 32nm process, compared to IVYBRIDGE on the world saving 'trigate', then Intel better hope that process matures nicely over the next 12 months.

I know 'trigate' is supposed to be much better at lower voltages, but it will have to be as 28HKMG will be very mature next year, combined with quad Cortex A15's clocking in excess of 2ghz is going to set some bar to hurdle.

I think we are getting very close to a point where changing nodes is so expensive to research and tool out, with likely poor yields to start off with, that it will become more of an expensive ball ache to start to rush that through, especially if you can only expect a minor improvement to power and performance like we got from Ivybridge.

Could be that first gen process was the tough learning part, and some real improvements can be had from this point forwards.

I know 'trigate' is supposed to be much better at lower voltages, but it will have to be as 28HKMG will be very mature next year, combined with quad Cortex A15's clocking in excess of 2ghz is going to set some bar to hurdle.

I think we are getting very close to a point where changing nodes is so expensive to research and tool out, with likely poor yields to start off with, that it will become more of an expensive ball ache to start to rush that through, especially if you can only expect a minor improvement to power and performance like we got from Ivybridge.

Could be that first gen process was the tough learning part, and some real improvements can be had from this point forwards.

I don't think you understand exactly how much more efficient Ivy Bridge really is......especially if you can only expect a minor improvement to power and performance like we got from Ivybridge.

http://www.lostcircuits.com/mambo//...ask=view&id=105&Itemid=1&limit=1&limitstart=7

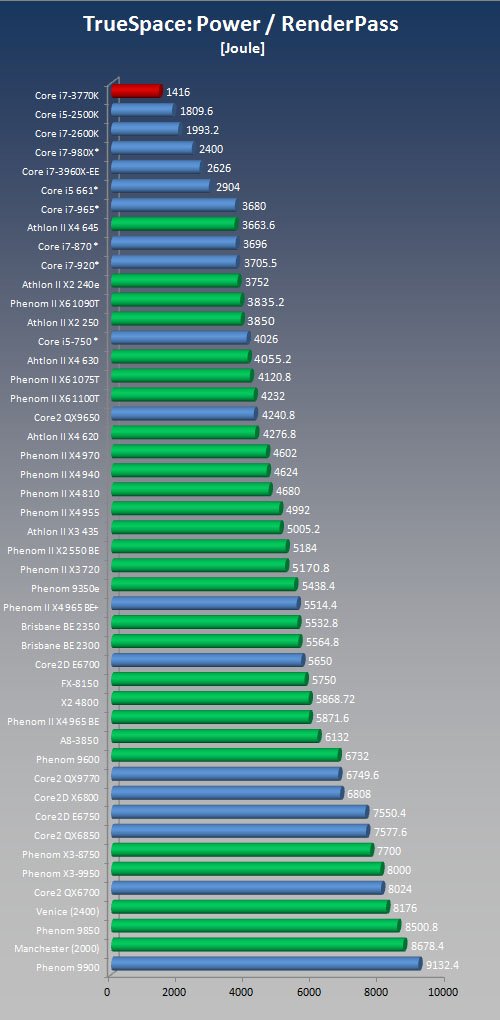

Ivy Bridge 3770k enjoys a 30% increase in power efficiency (work output vs power consumption) when compared to it's nearest competitor: the Sandy Bridge i7-2600k. This is also considering that the IVB chip has a 3% higher clock across the board.

It's possible that some of this efficiency increase is more intelligent management of onboard resources, but 22nm has a significant amount to do with it. And much to your earlier point, even bigger gains should be expected in the next round of 22nm products as the lithography process gets through it's first major pass.

french toast

Veteran

Only 3.7% faster with 4% better power consumption. (note i pulled that info from James off SA.)

http://www.tomshardware.com/reviews/ivy-bridge-benchmark-core-i7-3770k,3181-23.html

So according to that, which seems to be about the most comprehensive efficiency test out there from toms hardware, Ivybridge is an improvement yes, but is not the kind of improvement we all were expecting, 'trigate' and a full node drop was expected to bring massive gains, instead what we got was a modest improvement, i think in Anandtechs review on the mobile side batterylife didn't improve at all, it may have even been a bit worse( havn't got time to dig it up), not to mention the increased heat and lack of overclocking.

But like i said i think once the difficulty of starting that architecture is out of the way, then we should see some better numbers, although they are starting another 'tock' with Haswell which will bring yet more challanges.

Tom's link is total power draw for the entire system, which is not reflective of the individual gains that IVB can bring. If you want to compare the two lithography processes for power efficiency, it doesn't do you much good to measure the power draw of the busy drives, memory, chipset, the external video card and power supply inefficiencies... Does it?

french toast,

http://techreport.com/articles.x/22835/5

http://techreport.com/articles.x/22835/5

Similar threads

- Replies

- 9

- Views

- 4K

- Replies

- 220

- Views

- 93K

- Replies

- 605

- Views

- 94K

- Replies

- 90

- Views

- 18K