Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD: Speculation, Rumors, and Discussion (Archive)

- Thread starter iMacmatician

- Start date

- Status

- Not open for further replies.

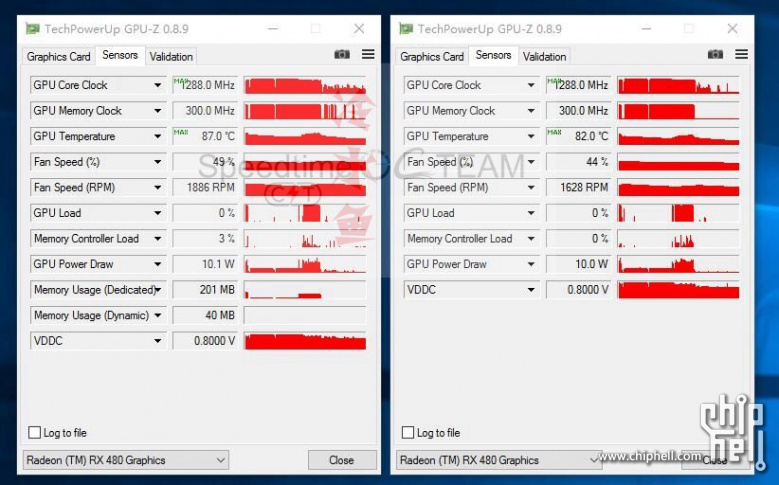

Well, the fan profile doesn't appear to be very aggressive, the fan barely speeds up (but it appears to be a pretty short load on the GPU). I guess the target temperature was set relatively high combined with a low fan speed so the cooling solution works quieter. One question will be at which temperature the GPU starts to clock down. If it's again 94°C or something, I wouldn't be worried at all. And if someone is worried about 80ish °C temperatures, that someone can still crank up the fan speed. The pictures of the new overclocking settings in the control panel show that it can be done there.

Last edited:

If newer process chips are designed to run at higher temps, does this mean we'll see a push by AIB partners for more blower designs rather than case dissipation? If it's not an issue for the GPU to run higher temps, it can still be detrimental for raising case thermals.

D

Deleted member 2197

Guest

http://videocardz.com/61396/new-amd...exclusive-first-look-at-new-overclocking-toolHere’s first detailed view on RX 480 overclocking tool. The user will be able to control the GPU frequency and voltage for each power state. It is also possible to create similar states for memory clocks. User has control over maximum and minimum values for temperature and fan speeds.

Anarchist4000

Veteran

Doesn't really matter as temperature and power dissipation aren't necessarily linked. It could be designed to consume 10W and run at 100C. High temp just means you're not dissipating the heat, not that there is more of it.If newer process chips are designed to run at higher temps, does this mean we'll see a push by AIB partners for more blower designs rather than case dissipation? If it's not an issue for the GPU to run higher temps, it can still be detrimental for raising case thermals.

Alessio1989

Regular

ohhh that would be interesting. about the PCI lanes you don't really need 16 lanes, with 8 lanes there is no difference in gaming(about 1% which is within the error margin) so you can use a 8+8 lines without a problem.

Although wouldn't this be an enterprise feature? or could gamers would really get a remarkable improve in performance?

PCI-E bandwitdh limit is a serious problem on multi-GPU (particularly on more then 2 GPUs) and especially VR.... And of course 4K involved in those scenarios. Actually is not a problem at all on 60Hz scenarios..

That's the effect of the framebuffer compression. Radeon ROPs can do full speed 4xFP16 for quite some time already (even with blending), they are usually limited by available bandwidth in throughput tests. Look at Tahiti for instance with its 384bit interface for just 32 ROPs. It also gives you a higher FP16 rate compared to Hawaii. And with framebuffer compression, the effective bandwidth is obviously high enough to saturate the ROPs in this test.

That make sense. The thought occurred to me later that it might be bandwidth-related, but I wasn't sure enough about it.

Generally better, although FinFET should have a benefit in terms of just having lower static leakage in general, at least until that particular one-time adjustment in the scaling curve is eclipsed at later nodes.Right but running at higher temps does have a direct correlation with increased power usage, so its always best to dissipate the heat as quickly as possible if possible.

Heat dissipation when dealing with other constraints like cost and noise sometimes means leaving things warmer than they could be. For reliability purposes, keeping temperatures consistent can keep the chip alive longer at the cost of some efficiency.

Wait, GPU-Z can monitor power draw, now?

What do you mean by now

What do you mean by nowProviding the card has appropriate power sensors, of course.

Haha sorry, apparently I'm just completely out of the loop. I guess that's just what happens when you haven't upgraded your graphics card since 2010.

Not joking: That AMD RX480 tool appears to be designed as an underclocking tool to optimize wattage in low load situations, not an overclocking tool to push more performance beyond the normal limits. The GPU frequency limits are only 0 to 1265 MHz. The memory frequencies are 0 to 2000.

This interpretation is wrong if you can manually edit in higher clock limits for both, but the memory does have an explicit "2000 MHz Max" label, and the voltage of 1.15 volts for 1265 MHz already seems high for a 14nm process.

This interpretation is wrong if you can manually edit in higher clock limits for both, but the memory does have an explicit "2000 MHz Max" label, and the voltage of 1.15 volts for 1265 MHz already seems high for a 14nm process.

One thing I noted when looking at the voltage and clock numbers is that if we go with a coarse comparison based on V^2*C, the resulting products give the highest set point ~4x the result of most efficient point.

State 4, which seems to match the purported base clock, is ~3x higher than the lowest point.

The next 10% of clock speed to get to the top state is an additional 33% of over that state.

Another numeric coincidence is that state 2 at 910 MHz is just about the clock speed rumored for the PS4 refresh GPU, for what it's worth.

State 4, which seems to match the purported base clock, is ~3x higher than the lowest point.

The next 10% of clock speed to get to the top state is an additional 33% of over that state.

Another numeric coincidence is that state 2 at 910 MHz is just about the clock speed rumored for the PS4 refresh GPU, for what it's worth.

D

Deleted member 87499

Guest

Wait, GPU-Z can monitor power draw, now?

I think that not, maybe it just read a sensor RX 480 offers. Mine version is 0.8.9 and it don't reads the board power total, just gives GPU voltage and Current measure.

Love_In_Rio

Veteran

Good catch.In fact state 7 is 1265 also instead of 1266, so it must be a rounding thing. If Sony tries to push clocks for final version maybe state 3 at 1075(6) Ghz is a good clue.It would give Neo almost 5 Tflops.Another numeric coincidence is that state 2 at 910 MHz is just about the clock speed rumored for the PS4 refresh GPU, for what it's worth.

Last edited:

Not joking: That AMD RX480 tool appears to be designed as an underclocking tool to optimize wattage in low load situations, not an overclocking tool to push more performance beyond the normal limits. The GPU frequency limits are only 0 to 1265 MHz. The memory frequencies are 0 to 2000.

This interpretation is wrong if you can manually edit in higher clock limits for both, but the memory does have an explicit "2000 MHz Max" label, and the voltage of 1.15 volts for 1265 MHz already seems high for a 14nm process.

What make you think that ? the 1265 slider seems set at half the way... Theres a good margin for up it. ( even i doubt the max is set at 2500mhz ( i mean it could be 1600-1500 or 1800 who know )

The screenshot only show the actual "clock "state if set on dynamic mode.. ( i will not read to much on the 1265mhz ) ( could be reported instead of 1265.99mhz ). Theres too different mode you can set it seems ( dynamic, or manual ( set the default boost clock on ), as i can imagine , need set 7 different states will loose peoples mind ).

I know nvidia overclocking tool have become so much simple because they are extremey limited on control, but what you see here is just the default profile. dont read the clock as been 0 to 1266.. actually the profile is set for a max boost of 1266mhz ... this is not the max clock speed who is alowed by the tool.

if you look at the memory slider, you will see that it is on the manual speed, so set at 2000mhz, but slider ( who is horizontal when select the memory frequency for set it ) is at far left.. meaning no OC are applied. ( move the slider to the right for set.. 2001-2005-2100mhz or 4500mhz if you are a bit suicidal.

Last edited:

Good catch.In fact state 7 is 1265 also instead of 1266. If Sony tries to push clocks for final version maybe state 3 at 1075 Ghz is a good clue.

That'd put it at around 5 tflops. Not bad for a small increase in voltage.

- Status

- Not open for further replies.

Similar threads

- Replies

- 90

- Views

- 18K

- Replies

- 2K

- Views

- 238K

- Replies

- 20

- Views

- 7K