Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Thanks although it's not mine [emoji23].

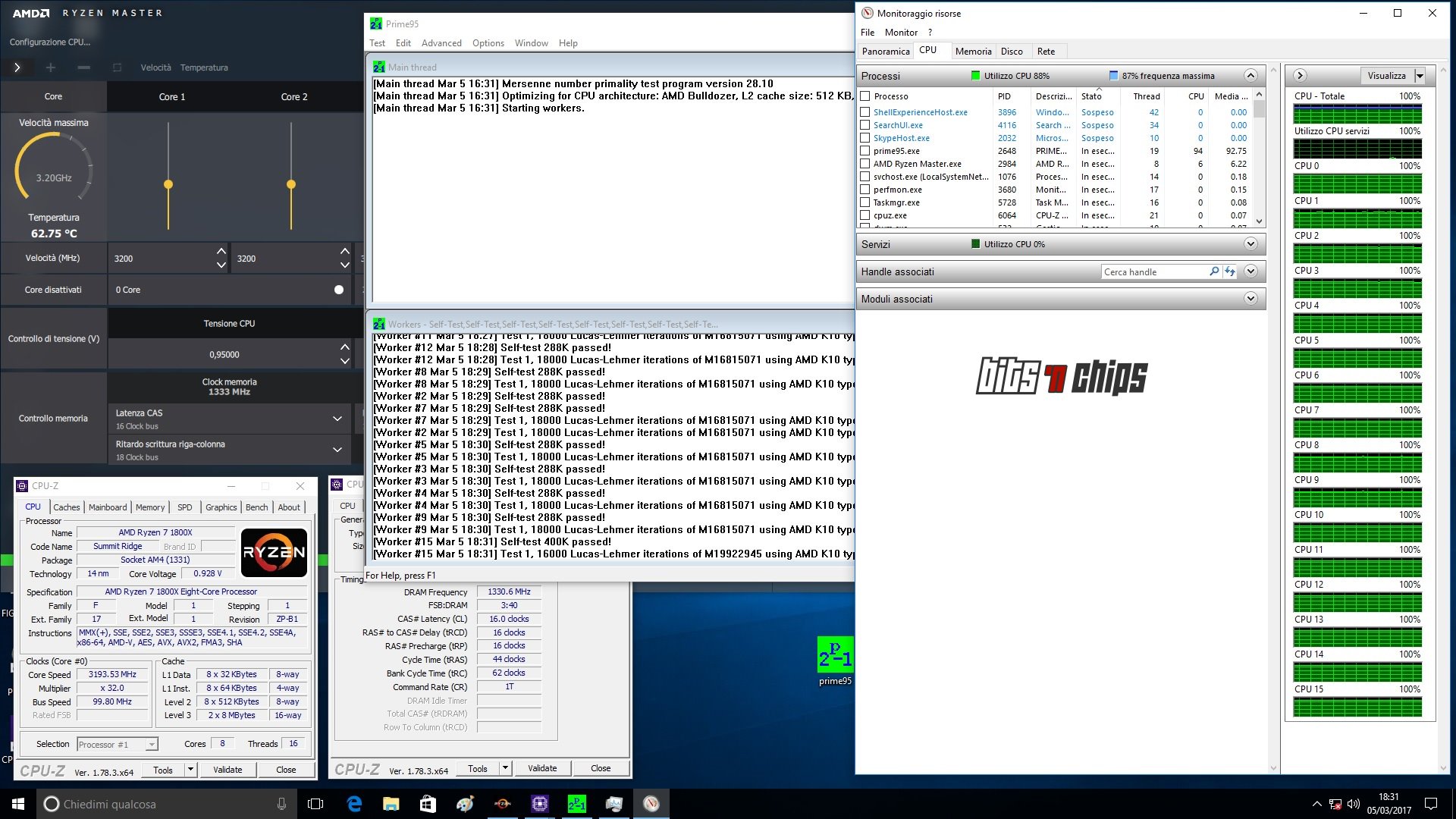

One thing I find actually shocking is how ryzen tdp is on pair with Intel even though it's behind in process tech. This means that amd could compete with Intel even though Intel would have an advantage in process.

Looking at this Intel must have be really worried not about ryzen but about Naples, AMD can compete in equal terms with Intel but with lower prices. Although it's true that ecosystems are a huge part but same performance at less price (less than half maybe?) it's something really appealing.

Enviado desde mi HTC One mediante Tapatalk

One thing I find actually shocking is how ryzen tdp is on pair with Intel even though it's behind in process tech. This means that amd could compete with Intel even though Intel would have an advantage in process.

Looking at this Intel must have be really worried not about ryzen but about Naples, AMD can compete in equal terms with Intel but with lower prices. Although it's true that ecosystems are a huge part but same performance at less price (less than half maybe?) it's something really appealing.

Enviado desde mi HTC One mediante Tapatalk

I read that as the reverse, the data fabric is half of the external DDR clock.Edit: IIRC The Stilt had posted his testing of Ryzen in AnandTech, indicating the fabric is running at twice the memory clock. AMD seemed to have implied before that the data fabric is capable of moving 32B/clk. So the theoretical bandwidth of the data path should be at least twice the data rate of the DRAM, assuming IMC itself is just one node. I am curious in how the "inter-core bandwidth" is tested in this case.

For that matter 32B*1.333GHz/2 = 42.7

42.7 / 2 = 21.3 GB/s.

Coincidence that a 32B link cut in half or alternating directions every cycle is that close the the reported inter-CCX link?

It seems this is caused by AMD packed SMT threads into its ACPI CPU core ID, since the Linux kernel got a patch to fix the SMT topology for the exact same situation earlier. Why it did not push the driver patches earlier to be ready at launch is beyond me though.

Perhaps an oversight, since the first time AMD had to correct for the there being double the IDs per CCX due to SMT was in 2015.

https://lkml.org/lkml/2015/11/3/634

Preliminary test sugest SMT problem may be part of W10 and you can have better performance on W7

"Windows 10 - 1080 Ultra DX11:

8C/16T - 49.39fps (Min), 72.36fps (Avg)

8C/8T - 57.16fps (Min), 72.46fps (Avg)

Windows 7 - 1080 Ultra DX11:

8C/16T - 62.33fps (Min), 78.18fps (Avg)

8C/8T - 62.00fps (Min), 73.22fps (Avg)

At the moment this is just pure speculation as there were variables, which could not be isolated.

Windows 10 figures were recorded using PresentMon (OCAT), however with Windows 7 it was necessary to use Fraps."

https://forums.anandtech.com/threads/ryzen-strictly-technical.2500572/page-8#post-38775732

"Windows 10 - 1080 Ultra DX11:

8C/16T - 49.39fps (Min), 72.36fps (Avg)

8C/8T - 57.16fps (Min), 72.46fps (Avg)

Windows 7 - 1080 Ultra DX11:

8C/16T - 62.33fps (Min), 78.18fps (Avg)

8C/8T - 62.00fps (Min), 73.22fps (Avg)

At the moment this is just pure speculation as there were variables, which could not be isolated.

Windows 10 figures were recorded using PresentMon (OCAT), however with Windows 7 it was necessary to use Fraps."

https://forums.anandtech.com/threads/ryzen-strictly-technical.2500572/page-8#post-38775732

Anarchist4000

Veteran

I believe AMD said in an interview that Infinity could route around congested links. That bandwidth figure would imply a two node mesh or no routing capability.Coincidence that a 32B link cut in half or alternating directions every cycle is that close the the reported inter-CCX link?

Saw elsewhere from an AMD engineer that review hardware was likely pulled prior to BIOS updates. Some boards tested with only a handful (two, slow and too fast) of memory timings. Processors are more available than boards still and memory likely accounts for some of the difference.

There are two kinds of enthusiast gamers who own top of the line GPUs and CPUs. A) Gamers who play competitively on 120Hz/144Hz gaming displays @ 1080p using low/medium settings. Ryzen is not a good choice for them. B) Gamers who play at maximum detail settings at 1440p or 4K. Ryzen frame rate differs from 7700K by 1-2 fps in this scenario. Ryzen might be the best CPU for some of these gamers. Many gamers use their computer for other purposes, and 8-core might save lots of time. Examples: editing/rendering their gaming videos with After Effects, compiling Unreal Engine / Unity source code for their own mod/indie projects, etc.Because for a pure gamer, the 7700k is $150 less than the 1800X and trounces it in gaming. So in that respect yes the 1800X doesn't appear to be a very good investment for an enthusiast gamer looking for the highest fps in current games.

That 150$ price difference is obviously relevant for both A and B style gamers. But I wouldn't say "trounces in gaming" when the downside mostly applies to small percentage of competitive players. Only a small minority of players own a 120Hz/144Hz display and choose to play at reduced quality settings at reduced resolution (and own top of the line GPU).

Last edited:

Completely agree and it's something that many of the reviews simply ignored. Thankfully there are many gamers who do understand and won't simply base their decision for their upgrade on the numbers alone. Was just pointing out that many will see it that way.There are two kinds of enthusiast gamers who own top of the line GPUs and CPUs. A) Gamers who play competitively on 120Hz/144Hz gaming displays @ 1080p using low/medium settings. Ryzen is not a good choice for them. B) Gamers who play at maximum detail settings at 1440p or 4K. Ryzen frame rate differs from 7700K by 1-2 fps in this scenario. Ryzen might be the best CPU for some of these gamers. Many gamers use their computer for other purposes, and 8-core might save lots of time. Examples: editing/rendering their gaming videos with After Effects, compiling Unreal Engine / Unity source code for their own mod/indie projects, etc.

That 150$ price difference is obviously relevant for both A and B style gamers. But I wouldn't say "trounces in gaming" when the downside mostly applies to small percentage of competitive players. Only a small minority of players own a 120Hz/144Hz display.

Personally I'm a 60Hz gamer on a 2560x1080 screen who does a bunch of multitasking in various applications. The 1700 is going to be a very nice upgrade for me. If motherboards are actually ever available to buy lol

Silent_Buddha

Legend

Yeah, moving up to 8 cores would be nice. I'm almost always maxed out at 100% on all 4 cores of my i5 2500k @4.4 GHz. Could be annoying but wasn't too bad with my previous graphics card. But my current card, a GTX 1070, seems to max out the CPU much faster than my previous card.

Regards,

SB

Regards,

SB

There are two kinds of enthusiast gamers who own top of the line GPUs and CPUs. A) Gamers who play competitively on 120Hz/144Hz gaming displays @ 1080p using low/medium settings. Ryzen is not a good choice for them.[…]

I think we need more multiplayer benchmarks to make that assessment with certainty:

On console given lots of big budget games already distributing cpu workloads across 6 and/or 7 console cores, is the hurdle to take advantage of similar amounts of physical cores on PC not that big?There are already some games that show noticeable gains on 6-core and 8-core i7 over higher clocked 4-core i7. But most curren gen games still favor higher clocked quad. For current gen games, a 4.2 GHz quad is (and will likely continue to be) a slightly better choice than 3.6 GHz 8-core. But 8-core will of course be more future proof. Cheaper 6-core and 8-core chips mean that more gamers will have them. Intel's Coffee Lake also has 6-core mainstream i7 models. More incentive for developers to optimize their engines for larger parallelism. DX12 and Vulkan also helps.

Lower clocked 8-core is significantly more power efficient than higher clocked 4-core. For example: 8-core 2.1 GHz Xeon D is 45W, while 4-core 4.0 GHz Skylake i7 is 91W. Both have similar theoretical peak multi-core throughput. I would expect that next gen consoles (PS5/XB4) will have at least 8 cores, and possibly more. AMD also has SMT now, so 16+ threads is highly probable. Cores will also certainly be faster (higher clock rate and/or higher IPC). I would expect 8-core PC CPUs to become more important for gaming when next console generation launches.

Even if on an 8 core pc the workload is un-optimally distributed identically to how its done on console, isn't that still better than not taking advantage of those 4 extra physical cores at all?

90hz is obviously quite important for VR. That's the minimum I'd be aiming for from a new CPU these days.

D

Deleted member 13524

Guest

There are two kinds of enthusiast gamers who own top of the line GPUs and CPUs. A) Gamers who play competitively on 120Hz/144Hz gaming displays @ 1080p using low/medium settings. Ryzen is not a good choice for them. B) Gamers who play at maximum detail settings at 1440p or 4K. Ryzen frame rate differs from 7700K by 1-2 fps in this scenario. Ryzen might be the best CPU for some of these gamers. Many gamers use their computer for other purposes, and 8-core might save lots of time. Examples: editing/rendering their gaming videos with After Effects, compiling Unreal Engine / Unity source code for their own mod/indie projects, etc.

That 150$ price difference is obviously relevant for both A and B style gamers. But I wouldn't say "trounces in gaming" when the downside mostly applies to small percentage of competitive players. Only a small minority of players own a 120Hz/144Hz display and choose to play at reduced quality settings at reduced resolution (and own top of the line GPU).

I play games on a 3440*1440 freesync monitor that ranges between 40Hz and 75Hz. I used to have a Core i7 4820k CPU that would run comfortably at 4.2GHz, and the X79 platform was chosen in order to get multi-gpu options. In the meanwhile I bought a 10-core/20-thread 25MB L3 cache Xeon E5 that was ultra cheap on ebay but only goes up to 2.9GHz with all cores connected, so I can run some FEM simulations, raytracing renders, etc. during the weekend.

That said, I don't have any use for a CPU that provides above 75 FPS and I really gain a lot with more cores and cache. If I was buying a new system today, that Ryzen 1700 would definitely be my best bet.

As for someone using their PC mainly for gaming, I'd say waiting for Ryzen 5 would be their best bet. If anything, because Intel should be forced to lower their i5 and i7 prices when that happens.

To those who play CS:GO or Battlefield 1 competitively on a 1080p 144Hz monitor, then I'd say the Skylake and Kaby Lake i3 or i5 will still be the best bet.

Though I do think some reviewers seem to be disproportionately putting that very small niche audience over all the other PC gamers.

The only thing I don't like about Ryzen is the rather low amount of PCIe lanes (considering X79 and X99 offer 48 lanes vs. 16 lanes on Ryzen). But I'm a mGPU guy so I'm a bit biased about that.

60Hz + reprojection seems to be almost just as good IMO.90hz is obviously quite important for VR. That's the minimum I'd be aiming for from a new CPU these days.

Last edited by a moderator:

I'd have to agree here as many gamers don't usually spend $350+ on a CPU, sticking with decently performing i5's. The R5 is going to be a fantastic sweet spot with 6C/12T imo.As for someone using their PC mainly for gaming, I'd say waiting for Ryzen 5 would be their best bet. If anything, because Intel should be forced to lower their i5 and i7 prices when that happens.

60Hz + reprojection seems to be almost just as good IMO.

Given the choice, clearly native 90hz is better. And PC headsets don't support 60Hz anyway.

D

Deleted member 13524

Guest

Given the choice, clearly native 90hz is better. And PC headsets don't support 60Hz anyway.

I just don't notice much difference in PSVR titles between 60Hz reprojected to 120Hz and native 90Hz ones, if any at all.

I don't know how much the reprojection costs to the GPU, but the performance delta between 60 and 90Hz is pretty big.

I just don't notice much difference in PSVR titles between 60Hz reprojected to 120Hz and native 90Hz ones, if any at all.

I don't know how much the reprojection costs to the GPU, but the performance delta between 60 and 90Hz is pretty big.

Not sure this has to do with Ryzen. On PC, you'll want native 90fps. 45 FPS re-projected to 90 is an option, but it's rubbish by comparison. Maybe next gen headsets will be 120hz giving a 60hz re-projection option, but at that point, 120hz native will be better so you'll still want a CPU capable of that. A 60fps target for VR on PC is pointless today, or in the future, unless it's as a fall back option.

I think next gen will be 3D 90Hz with eye tracking, but who knows.Not sure this has to do with Ryzen. On PC, you'll want native 90fps. 45 FPS re-projected to 90 is an option, but it's rubbish by comparison. Maybe next gen headsets will be 120hz giving a 60hz re-projection option, but at that point, 120hz native will be better so you'll still want a CPU capable of that. A 60fps target for VR on PC is pointless today, or in the future, unless it's as a fall back option.

Looking at the TDP I think AMD could make a 4core cpu with 35W or less.

Similar threads

- Replies

- 70

- Views

- 14K

- Replies

- 90

- Views

- 18K

- Replies

- 220

- Views

- 93K