Let us know how the voltage/thermals pans out when you decide to either push all cores to higher fixed frequency or general overclocking.

The core voltage as standard seems pretty nice relative to previous Ryzen generation.

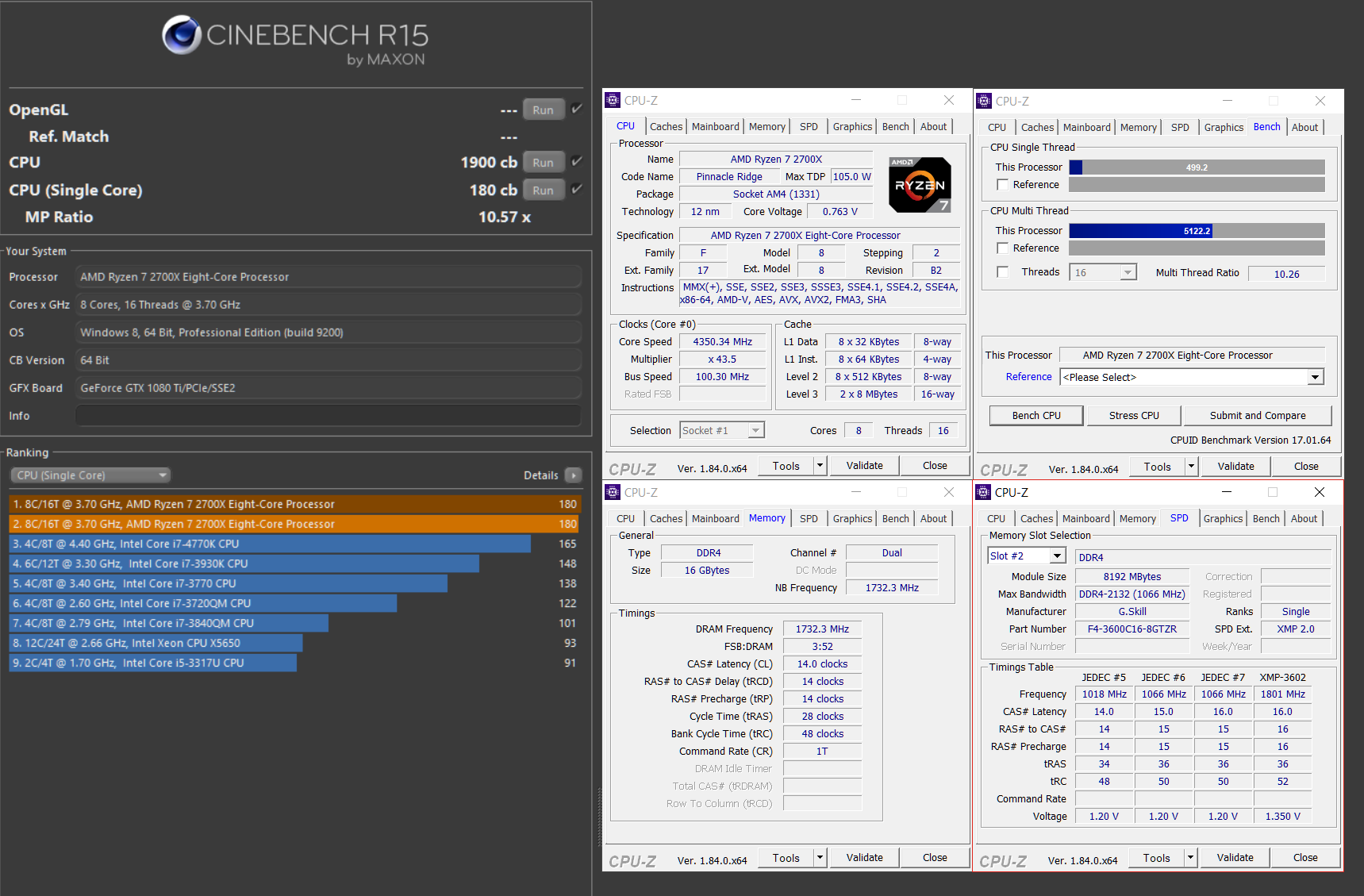

Overclocking is not really worth it unless you want to undervolt (4.0GHz at 1.15 vcore is stable on the 2700X). XFR2 is pretty smart, better to just focus on memory overclocking instead imo. I was able to run all cores at 4.35 at 1.45 vcore but that's only for benchmarking