DavidGraham

Veteran

Yes, the 6900XT LC is very slightly faster.Does the 6900XT LC and 6950XT have the same performance?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Yes, the 6900XT LC is very slightly faster.Does the 6900XT LC and 6950XT have the same performance?

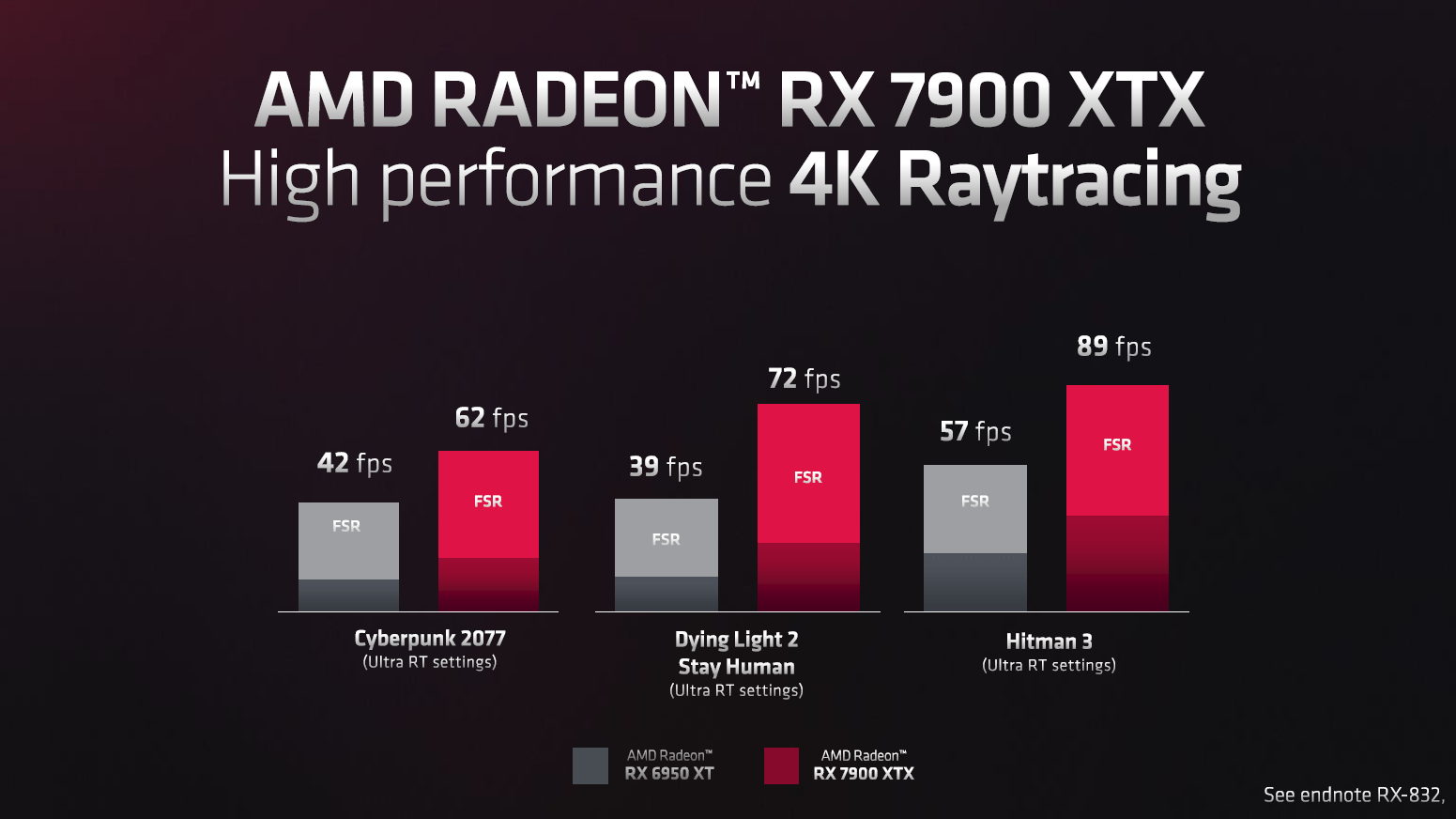

AMD published numbers for three additional games with raytracing:

And Doom is not "relatively lightweights ray traced game". And even here the 4090 will be ~80% faster while only beeing 15% bigger. Perf/W will be 50%+ better. Navi31 is just bad. Worse than RDNA2 but here you could give AMD credit for at least supporting Raytracing.

People that want high framerates on highres displays, who else?Who on Earth buys a $900+ GPU to not play games at their maxed out settings?

Well, I was wrong to give AMD credit for being able to improve ray tracing efficiency. It looks like they went backwards and RDNA 3 is even less efficient than RDNA 2 for RT.This was always the only logical outcome. People somehow expect fantasy numbers every generation.

"AI engines" are just new instructions

I find it incredibly depressing that immediately after RDNA3's launch we are still seeing the "RT performance doesn't matter" arguments hauled out to justify it's underwhelming performance. I'd truly hoped this launch would see an end to such arguments.

Who on Earth buys a $900+ GPU to not play games at their maxed out settings? If you don't care about maxing games out (or at least turning on settings that provide a genuine, noticeable improvement to the overall presentation), then don't get a $900 GPU, get a console. As far as I'm concerned, pure raster performance is mostly irrelevant at this performance tier - it's already more than fast enough at 3080/6800XT levels. The only time those GPU's are really challenged is when RT it turned on, especially with modern upscaling capabilities.

So they call it Fluid Motion Frames, huh? I wonder if it will be available on other cards too. And then I wonder if you could combine it with DLSS. That would be pretty awesome.I found endnote RX835 interesting too, over and above their misspelling of their own technology (FRS2):

It does make a perfect sense. Memory controllers and sram don't scale well with new tech processes, so they did an obvious stuff and moved them to cheaper nodes. Though, packaging is not free, so whether they were able to cut down costs is beyond me.This layout make no sense.

What's about huge perf dips in Cyberpunk 2077 when you simply stand in front of a mirror with a single quarter res planar reflection?

Gosh, noise comes from physically correct brdf with stochastic sampling. RT reflections obviously don't produce any noise for absolutely flat surfaces, but they do for rough ones. Planar reflections don't support stochastic sampling due to rasterization limitations and thus can't be physically correct at all.

Who on Earth buys a $900+ GPU to not play games at their maxed out settings?

If you don't care about maxing games out (or at least turning on settings that provide a genuine, noticeable improvement to the overall presentation), then don't get a $900 GPU, get a console.

BVH allows to handle all that stuffThat's let's us elegantly handle any dynamic and deformable geometry (sans reflective surface itself) happening in the scene

I just don't get it why would somebody want to get rid of BVH and bolt on tons of hacks in the meantime.There are still some more hacks left to be had in rasterization to increase quality and they're becoming appealing alternatives in the face of RT ...

Must not be looking hard enough tbh, Ive seen multiple people complaining that AMD is following the fake frame Nvidia with FSR3.Think positive. For instance, I haven't seen a single example of people complaining about "fake frames" or "fake pixels" now that AMD has announced FSR3. I wonder why..

I just re-watched the part, it seems a bit shady. They're saying "dedicated, 2 per CU" which are sharing all the register files, caches and instruction scheduling as streamprocessors. But whatever they are, they're not matrix accelerators like Tensor, Matrix and XMX cores.Huh, I assumed they were dedicated from what I was reading. After all RDNA2 already supports Int8, just adding BF16 would be a bit lame.

They are just as bullcrap with AMD as they are with NVIDIA, this is literally repeat of FSR debut, some people trying to paint the picture that people would somehow be OK with it now that AMD did it, while those who actually were pissing over DLSS were doing exactly same over FSR (me included). Steve at GN also pissed at this framegen-BS.Think positive. For instance, I haven't seen a single example of people complaining about "fake frames" or "fake pixels" now that AMD has announced FSR3. I wonder why..

That is where I am at. I will bitch and moan when we get details about exactly what they are doing.Probably because they haven't actually showed anything to talk about.