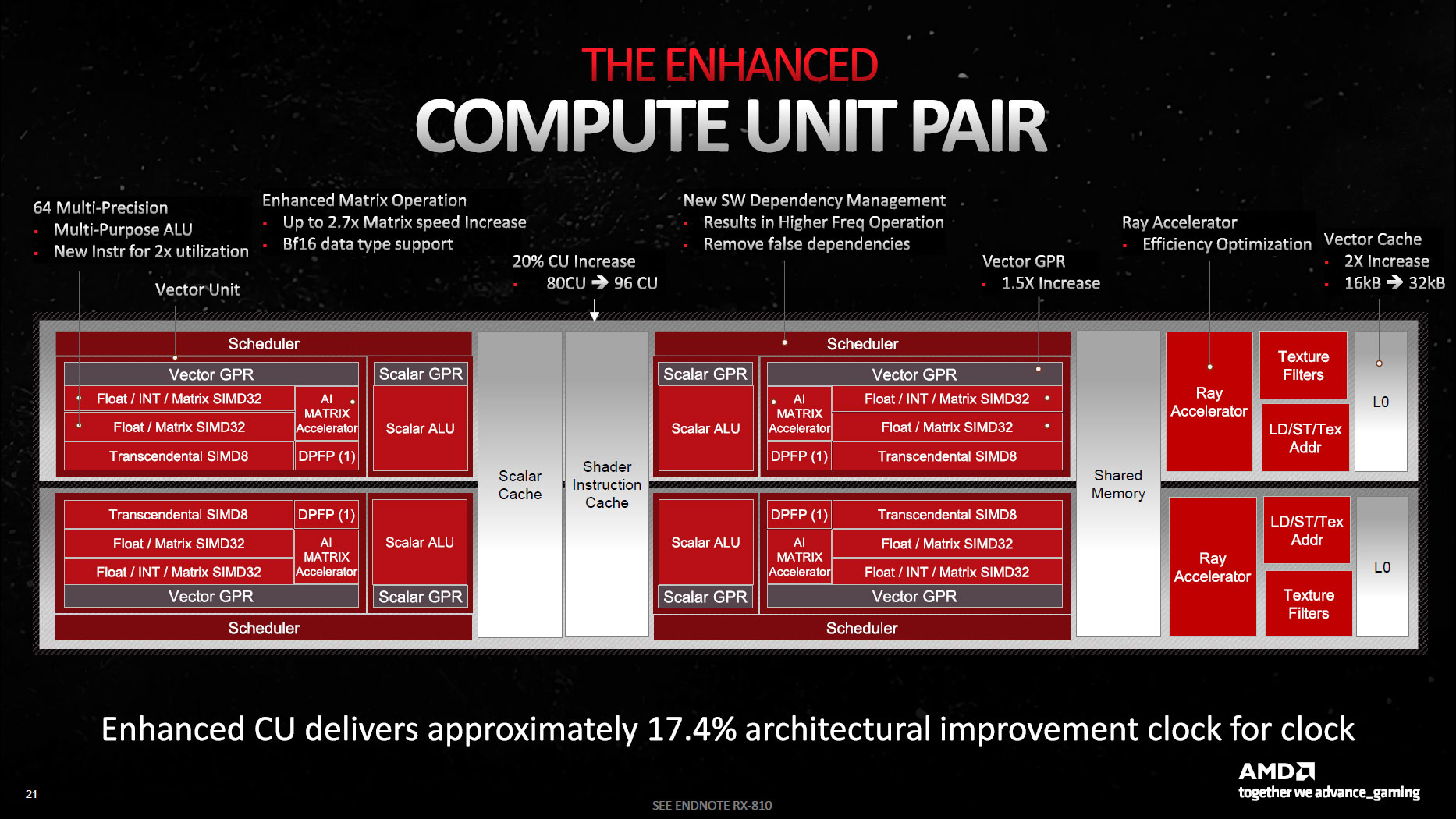

Then of course there are supercalar dual-issue ALUs capable of processing FP32 and FP32/Int8 register / immediate operands in one clock, using either V_DUAL instructions in VOPD encoding for Wave32 threads, or

Wave64 threads which would transparently dual-issue all VOPD instructions as proved by Chips&Cheese benchmarks below (but bizarrely

Wave64 mode is not supported in HIP/ROCm due to hardware limitations, and there are further limits such as source VGPRs from different banks and cache ports, even/odd register numbers etc.).

In practice Wave32 is the prevalent mode, so dual-issue relies on compilers/optimizers which do a very poor job of automatically scheduling parallel multiple-operand instructions (Intel and HP will testify to the spectacular failure of the much touted

Explicitly Parallel Instruction Computing (EPIC) architecture for the

Itanium (IA64) processors, which relied on exactly the same kind of compiler optimizations in order to extract 6-way parallelism from its VLIW instruction bundles).

Having spent a lot of time optimising Imagination's Rogue architecture with Dual FMA and Vec4 FP16 (which I personally spent a few months optimising the compiler for), I don't think the problem is Dual-FMA per-se, it's the fact they have an insane number of interdependent restrictions that the compiler needs to optimise for at the same time. One fundamental problem with VLIW is conditional execution and short basic blocks resulting in partially filled instructions because there just isn't enough work to do with the same execution predicate, and that is probably a bigger problem for modern graphics/compute algorithms today than it was 10 years ago (and it's potentially really bad on CPUs which is one of the many reasons why Itanium was doomed from the start), but I don't think that's the main problem here.

It's surprisingly easy for a good compiler to solve one very hard problem, and surprisingly hard to solve many "not-so-hard-but-interdependent" problems. The fact dual-issue is so strongly dependent on register allocation due to heavy bank/port restrictions is horrific to me, it's so much cleaner if you can do register allocation after scheduling without any interdependence. If that part of the design was over-specced so that the compiler could assume it would probably be OK for the initial pass that'd be one thing, but 1 read port per SRC per bank is not over-specced at all... I know what the area/power cost of 2R+1W latch/flop arrays is versus 1R+1W, and I'd be shocked if this was worth the HW savings.

Also, the fact they can only have 4 unique operands in Wave32 mode feels like it might be because they are restricting the VOPD instructions to still only be 64-bit (or 96-bit with a 32-bit immediate) which is just insane when NVIDIA's single FMA instructions are all 128-bit. You'd have more than enough space for 6 unique operands with a 96-bit instruction + 32-bit immediate which still ends up at only the same 128-bit as NVIDIA's instructions for 2x as many FMAs (so 2x density). AMD's instruction set has plenty of very long instructions but not for the main VOP/FMA pipeline, so it sounds like they just reuse the same fetch/decode unit without any major changes instead of increasing maximum length from 96-bit to 128-bit... Arguably you really need both changes together to get good utilisation in Wave32 mode.

If you had dual-issue with 2R1W-per-souce-per-bank (or 3R1W-per-bank) and with 128-bit instructions, I suspect you'd get much higher VOPD utilisation. There would be a small area/power penalty for the extra read ports but I don't think it'd be that bad at all (especially if it sometimes allowed you to save an extra RAM read). Alternatively, you could have a more complicated register caching scheme that isn't (fully?) bank-based and/or allow cases with more reads to opportunistically go at full speed if they use the forwarding path etc...

In my opinion, the only way this architecture makes sense ***for Wave32*** is if AMD hacked the whole VOPD thing together in months rather than years as a panic reaction to NVIDIA's A102 dual-FMA design (which is also not going to hit anywhere near 100% peak for other reasons although I suspect it's better than this) without enough modelling or time to do compiler/hardware co-design. Or if they intentionally decided to focus on Wave64 (and they ended up not being able to use it in as many cases as expected).

It's an awful lot better for Wave64 without manual dual-issue though and if you can apply Wave64 to everything then these design decisions don't really matter anymore. I have never managed to get good data on that, but I suspect running two parts of the same wavefront/warp on the same ALU/FMA unit back-to-back will result in noticeably lower power consumption because the input/output data is much more similar than for a random uncorrelated FMA from another wavefront/warp, so you get less switching (lower hamming distance) which is the main component of dynamic power consumption. So there might be an argument in favour of running Wave64 anyway for other reasons for ALU-heavy workloads, while keeping Wave32 for branch/memory-heavy workloads only.

As you point out, Wave64 isn't supported for HIP/ROCm which is not great... the permute limitation is unfortunate but should be easy-ish to fix in future generations. I suspect they can use Wave64 most of the time for most graphics workloads though, so even though it's not ideal, it might not have much to do with their efficiency problems in graphics workloads which is the main thing RDNA3 is being sold for (while CDNA is focused on HIP and doesn't have these problems). And finally, even if it wasn't optimal in graphics, if the main bottleneck with RDNA3 isn't the number of FMAs anyway (*cough* raytracing and power efficiency *cough*) then it doesn't really matter and it's as good a way as any to double the number of

theoretical flops at little area/power and most importantly engineering cost...! So in the end, maybe it wasn't worth it for you to read this analysis at all, but too late now

P.S.: If you're curious about the insanity that is Imagination/PowerVR's Rogue ISA, it is publicly available here (I couldn't find another public link that didn't require downloading the SDK):

https://docplayer.net/99326391-Powervr-instruction-set-reference.html - the Vec4 FP16 instruction is documented(-ish) on Page 22 Section 6.1.13... I find it absolutely hilarious that the example in that section doesn't actually manage to generate a Vec4 SOPMAD (just 2 Vec2 SOPs), probably because that document was created in 2014 before I worked on those compiler improvements...! The one nice thing about that instruction is the muxing was extremely flexible, so you could read any 6 32-bit operands (coming from 8 main banks + other register types) and mux it in any way you want to the 12 16-bit sources. That represents 12x 12:1 muxes which needless to say were extremely expensive in both area/power and in terms of instruction length - I think the largest instructions could get to slightly less than 512-bit *per instruction*!