I think the issue is UE5 lacks a button to do it all automatically for the devs.Yeah I'm a little confused by the complaint to be honest. Complex mesh collision has always been expensive and something you only use in specific places as far as I am aware. Also there's nothing forcing people to use the Nanite fallback mesh as your collision mesh as far as I know.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Unreal Engine 5, [UE5 Developer Availability 2022-04-05]

- Thread starter mpg1

- Start date

Yet.I think the issue is UE5 lacks a button to do it all automatically for the devs.

AI coming!

That's lovely.

Any chance that as an end-user for the final product (there's a final product, right?) that I could get a slider to turn down the barrel distortion?

I know this is supposed to look good but it just looks so fake. The rendering on show is fantastic but it’s just so sterile. Walking on a gravel path, no dust, no rocks moving around. No wind, no trees moving, wildlife is missing, etc. I could go on and on. The closer we move towards hyper realism, the move things stick out.

DavidGraham

Veteran

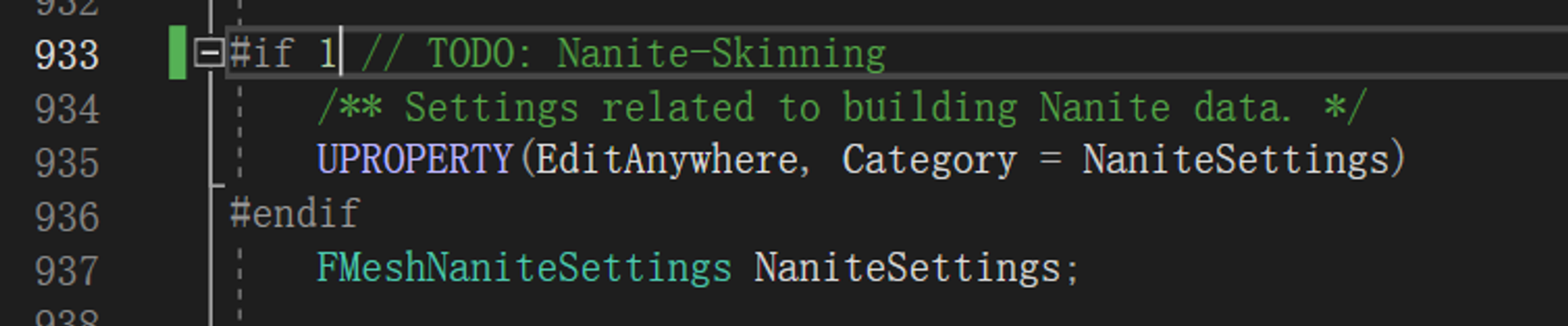

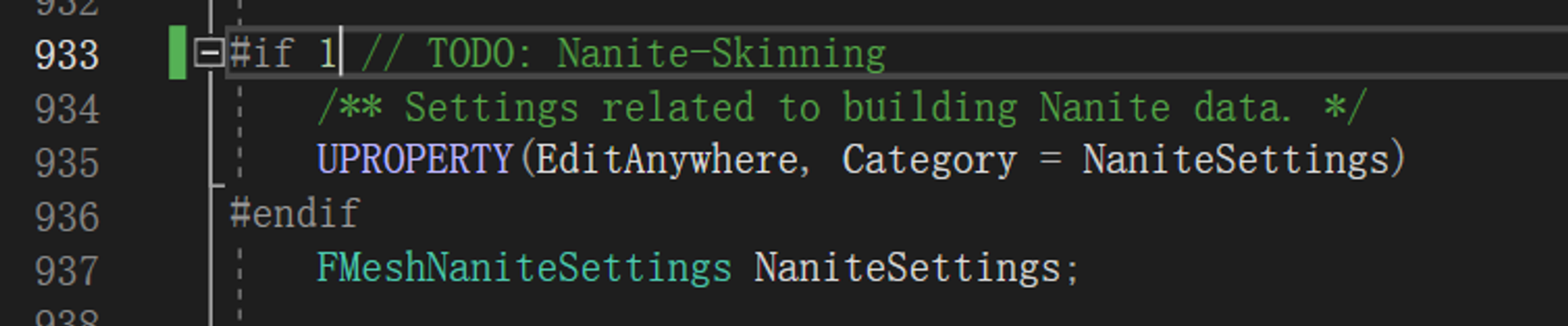

Working Nanite on character models.

Here is how it works.

golden-mandolin-675.notion.site

golden-mandolin-675.notion.site

Here is how it works.

One Step Forward: Early View of Skinned Nanite Mesh | Notion

@Untitled

Last edited:

davis.anthony

Veteran

I wonder if there's anything AMD/Nvidia/Intel can do with their respective architectures to help accelerate Nanite performance further, as I'm hoping that by the end of this generation all major engines and studios will either be using it via UE5 or would have implemented something similar in their own engine.

Flying around in Horizon Forbidden West shows how desperate we are for a system like Nanite to become standard.

Flying around in Horizon Forbidden West shows how desperate we are for a system like Nanite to become standard.

Agreed. Nanite (or more broadly virtualized geometry) is what's needed to truly bridge that gap between games which obviously look like games (LOD pop-in and other artifacts)... and games which could fool you into thinking it's CGI.I wonder if there's anything AMD/Nvidia/Intel can do with their respective architectures to help accelerate Nanite performance further, as I'm hoping that by the end of this generation all major engines and studios will either be using it via UE5 or would have implemented something similar in their own engine.

Flying around in Horizon Forbidden West shows how desperate we are for a system like Nanite to become standard.

Nothing gives it away more than that.

Using work graphs to implement hierarchal persistent culling is the obvious next step to improve Nanite performance ...I wonder if there's anything AMD/Nvidia/Intel can do with their respective architectures to help accelerate Nanite performance further, as I'm hoping that by the end of this generation all major engines and studios will either be using it via UE5 or would have implemented something similar in their own engine.

Flying around in Horizon Forbidden West shows how desperate we are for a system like Nanite to become standard.

Hardware rasterizeration/blending ? Implementing a faster hardware path for unordered rendering mode and supporting rendering to their visibility buffer format (64-bit integer unordered texture resource with custom encoding) is a possibility since they use 64-bit atomics on textures to do those rendering operations currently ...

Do we want to support pixel shaders for more use cases ? If so then implementing hardware support for pixel sized quads for texture sampling would be helpful ...

These are somewhat exotic proposals ...

Frenetic Pony

Veteran

Interested to see how they deal with the moving seams between meshlets, hopefully Brian or someone else shows up at Siggraph.

Also need to get someone over at Weta to release the Smaug model, 20 million polys (not sure if that's with or without tessellation). That would be a great test case

Also need to get someone over at Weta to release the Smaug model, 20 million polys (not sure if that's with or without tessellation). That would be a great test case

Agreed, and most of these UE5 graphics demos look all pretty much the same. This is one of the more impressive ones I've seen that definitely feels more alive:I know this is supposed to look good but it just looks so fake. The rendering on show is fantastic but it’s just so sterile. Walking on a gravel path, no dust, no rocks moving around. No wind, no trees moving, wildlife is missing, etc. I could go on and on. The closer we move towards hyper realism, the move things stick out.

I am not exactly seeing the connection here. What does Nanites existence have to do with fixed function hardware for RT? The OG Nanite was made in lieu of the existence of FF HW for RT. (The original demo is software Lumen)Nanite is only necessary because NVIDIA is feeding us raytracing improvements in tiny bites and everyone follows them slavishly.

It makes very little sense to make shitty fixed function hardware for it on top of the shitty fixed function raytracing.

If you look at HVVR, the need for hybrid rendering is near over. I suspect with shader instructions for triangle intersections, you could already raycast into a nanite type hierarchy at around the same speed as rasterization. What you lose in coherence you gain in occlusion.

Raytracing is the future, which should be the present, trying to make fixed function hardware to accelerate the last hurrah of hybrid rendering doesn't make sense.

Raytracing is the future, which should be the present, trying to make fixed function hardware to accelerate the last hurrah of hybrid rendering doesn't make sense.

Nanite is only necessary because NVIDIA is feeding us raytracing improvements in tiny bites and everyone follows them slavishly.

It makes very little sense to make shitty fixed function hardware for it on top of the shitty fixed function raytracing.

That would require hardware to leap even further ahead than software than it is today. The consoles and major game engines are still firmly rooted in raster.

Even the most intense RT games are still rasterizing primary visibility. I would take less ambitious improvements to both RT and raster over a moon shot that will just result in a ton of wasted transistors that nobody can or will use.

DavidGraham

Veteran

Skeletal meshes with Nanite gives 2x to 4x increase in fps compared to Skeletal meshes without Nanite, in this special demo.

Last edited:

I mean I hope so, but the timeline of that remains pretty unclear IMO. Furthermore even in that brave new world where we do RT for all visibility queries, you still need large parts of Nanite; the need for all the mesh simplification, LOD and streaming stuff does not disappear with RT.If you look at HVVR, the need for hybrid rendering is near over.

GPUs still need some architectural work before doing anything that pops back to shaders in the middle of ray traversal is not a large perf hit.I suspect with shader instructions for triangle intersections, you could already raycast into a nanite type hierarchy at around the same speed as rasterization.

That's really besides the point though. As I've repeatedly mentioned, ray cast performance is not even the issue that currently prevents Nanite RT from being feasible, it's primarily acceleration structure creation and update (which is tied to memory footprint and a bunch of other things). Under the current APIs, it is far too slow to be able to accomplish something at the level of detail and streaming that Nanite does. A lot of this could likely be improved with API changes even with current hardware, but I suspect some amount of fixed-function acceleration for BVH creation will be desirable in the future if things indeed go this route.

But of course the content is not a fixed thing either. As raytracing moves the needle of the amount and complexity of content it can handle, in parallel the art departments are pushing those limits further away as well. Assuming the BVH stuff gets improved it's very plausible to imagine rigid Nanite-level geometry being raytraced, but of course we've already moved on to semi-deformable stuff and alpha test, which Nanite doesn't love but raytracing loves even less. And of course 5.4 adds tessellation and displacement, which will definitely need some work on the hardware RT side if the goal is to raytrace primary and shadow rays.

All that said, I agree that it's not totally clear that it's worth the effort of adding any more fixed function rasterization hardware specifically for stuff like Nanite, particularly since it already works pretty well in most cases, and the cases where it doesn't are really not due to stuff like the use of atomics and other low hanging fruit for optimization.

Similar threads

- Replies

- 103

- Views

- 13K

- Replies

- 0

- Views

- 615

- Locked

- Replies

- 260

- Views

- 16K