Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Unreal Engine 5, [UE5 Developer Availability 2022-04-05]

- Thread starter mpg1

- Start date

D

Deleted member 2197

Guest

I know it will be included as a feature of UE5, but where does it say this presentation is using TSR?This is using AMD Temporal super resolution

https://community.amd.com/t5/blogs/...s-in-unreal-engine-5-early-access/ba-p/473407

Something about the GI lighting doesn't look quite as impressive as last years demo imo.

Yup!

Downloading it now.Would love to hear hardware specs and experiences from people trying it so far

yea i'm struggling here with downloading it. For some reason it's trying to cache it on my C:\ before moving it to my desired directory. I can't install it becauyse my C:\ is incredibly small compared to the rest of drives. sigh... it's > 50% of my C: . Moving all my liibraries and shit off just to download this. So frustrating I want a new drive nowThe Raw source of the project is 100 GB without any compression. This is huge.

https://docs.unrealengine.com/5.0/en-US/RenderingFeatures/Nanite/

Some number for Nanite tech assets size on the page

Some number for Nanite tech assets size on the page

How does Nanite work?

Nanite integrates as seamlessly as possible into existing engine workflows, while using a novel approach to storing and rendering mesh data.

Since Nanite relies on the ability to rapidly stream mesh data from disk on demand. Solid State Drives (or SSDs) are recommended for runtime storage.

- During import: meshes are analyzed and broken down into hierarchical clusters of triangle groups.

- During rendering: clusters are swapped on the fly at varying levels of detail based on the camera view, and connect perfectly without cracks to neighboring clusters within the same object. Data is streamed in on demand so that only visible detail needs to reside in memory. Nanite runs in its own rendering pass that completely bypasses traditional draw calls. Visualization modes can be used to inspect the Nanite pipeline.

What Types of Meshes Should Nanite Be Used For?

Nanite should generally be enabled wherever possible. Any Static Mesh that has it enabled will typically render faster, and take up less memory and disk space.

More specifically, a mesh is a good candidate for Nanite if it:

An example of an exception to these rules is something like a sky sphere: its triangles will be large on screen, it doesn't occlude anything, and there is only one in the scene.

- Contains many triangles, or has triangles that will be very small on screen

- Has many instances in the scene

- Acts as a major occluder of other Nanite geometry

Performance of Typical Content

For comparison purposes, the following GPU timings were taken from the PlayStation 5 Unreal Engine 5 technical demo Lumen in the Land of Nanite:

When considering these GPU times together, it's approximately 4.5ms combined for what would be equivalent to Unreal Engine 4's depth prepass plus the base pass. This makes Nanite well-suited for game projects targeting 60 FPS.

- Average render resolution of 1400p temporally upsampled to 4K.

- ~2.5 millisecond (ms) to cull and rasterize all Nanite meshes (which was nearly everything in this demo)

- Nearly all geometry used was a Nanite mesh

- Nearly no CPU cost since it is 100% GPU-driven

- ~2ms to evaluate materials for all Nanite meshes

- Small CPU cost with 1 draw call per material present in the scene.

Numbers like these should be expected from content that doesn't suffer from the aforementioned performance pitfalls in previous sections. Very high instance counts and large numbers of unique materials can also cause increased costs and is an area of Nanite development that is being actively worked on.

Data Size

Because of the micro detail that Nanite is able to achieve, it might be assumed that it means a large increase in geometry data resulting in larger game package sizes and downloads for players. However, the reality isn't that dire. In fact, Nanite's mesh format is significantly smaller than the standard Static Mesh format because of Nanite's specialized mesh encoding.

For example, using the Unreal Engine 5 sample Valley of the Ancients, Nanite meshes average 14.4 bytes per input triangle. This means an average one million triangle Nanite mesh will be ~13.8 megabytes (MB) on disk.

Comparing a traditional low poly mesh plus its Normal map to a high poly Nanite mesh, you would see something like:

Low Polygon Mesh

Static Mesh Compressed Packaged Size: 1.34MB

- Triangles: 19,066

- Vertices: 10,930

- Num LODs: 4

- Nanite: Disabled

Nanite Mesh

Static Mesh compressed package size: 19.64MB

- Triangles: 1,545,338

- Vertices: 793,330

- Num LODs: n/a

- Nanite: Enabled

Low Polygon Static Mesh

with 4k Normal Map

High Poly Static Mesh

with 4k Normal Map

The compressed package size isn't the entire size of the asset though. There are also unique textures only used by this mesh that have to be accounted for. Many of the materials used by meshes have their own unique textures made up of different Normal, BaseColor, Metallic, Specular, Roughness, and Mask textures.

This particular asset only uses two textures (BaseColor and Normal) and thus is not as costly on disk space as one with many other unique textures. For example, note the size of the of the Nanite mesh with ~1.5 million triangles is smaller in size (at 19.64MB) than a 4k normal map texture is.

Texture Type

Texture Size

Size on Disk

BaseColor

4k x 4k

8.2MB

Normal

4k x 4k

21.85MB

The total compressed package size for this mesh and its textures is:

Because the Nanite mesh is very detailed already we can try replacing the unique normal map with a tiling detail normal that is shared with other assets. Although this results in some loss in quality in this case, it is fairly small and certainly much smaller than the difference in quality between the low and high poly version. So a 1.5M triangle Nanite mesh can both look better and be smaller than a low poly mesh with 4k normal map.

- Low Poly Mesh: 31.04MB

- High Poly Mesh: 49.69MB

Total compressed package size for the Nanite-enabled mesh and textures: 27.83MB

High Poly Static Mesh

with 4k Normal Map

Nanite Mesh

with 4k Detail Normal Map

There are plenty of experiments that can be done with texture resolution and detail normal maps, but this particular comparison is to demonstrate that the data sizes of Nanite meshes are not too dissimilar from data that artists are already familiar with.

Lastly, we can compare the Nanite compression to the standard Static Mesh format using the high poly, where both are identical at LOD0.

High Poly Static Mesh

Static Mesh Compressed Packaged Size: 148.95MB

- Triangles: 1,545,338

- Vertices: 793,330

- Num LODs: 4

- Nanite: Disabled

Nanite Mesh

Static Mesh compressed package size: 19.64MB

- Triangles: 1,545,338

- Vertices: 793,330

- Num LODs: n/a

- Nanite: Enabled

Comparing the Nanite compression from earlier with a size of 19.64MB is 7.6x smaller than the standard Static Mesh compression with 4 LODs.

Nanite compression and data sizes are a key area that will be improved in future releases of Unreal Engine.

The Raw source of the project is 100 GB without any compression. This is huge.

Any quick or dirty math on how compressed sizes would look using PS5/XBSX respective compression techniques?

Any quick or dirty math on how compressed sizes would look using PS5/XBSX respective compression techniques?

This is the demo size into the editor. This has nothing to do with size at runtime. Here it is the raw assets not the format they use in Nanite and after there is the compression. This is probably much less.

Here you ahve the original millions polygons assets.

Here they compare assets size against low poly assets and high quality 4k normal maps and 4 LOD for an assets and Nanite wins

https://docs.unrealengine.com/5.0/en-US/RenderingFeatures/Nanite/

An example

For example, using the Unreal Engine 5 sample Valley of the Ancients, Nanite meshes average 14.4 bytes per input triangle. This means an average one million triangle Nanite mesh will be ~13.8 megabytes (MB) on disk.

EDIT: They will explore way to improve data size on disk

General Advice on Data Size and a Look to the Future

Nanite and Virtual Texturing systems, coupled with fast SSDs, have lessened concern over runtime budgets of geometry and textures. The biggest bottleneck now is how to deliver this data to the user.

Data size on disk is an important factor when considering how content is delivered — on physical media or downloaded over the internet — and compression technology can only do so much. Average end user's internet bandwidth, optical media sizes, and hard drive sizes have not scaled at the same rate as hard drive bandwidth and access latency, GPU compute power, and software technology like Nanite. Pushing that data to users is proving challenging.

Rendering highly detailed meshes efficiently is less of a concern with Nanite, but storage of its data on disk is now the key area that must be kept in check. Outside of compression, future releases of Unreal Engine should see tools to support more aggressive reuse of repeated detail, and tools to enable trimming data late in production to get package size in line, allowing art to safely overshoot their quality bar instead of undershoot it.

Looking to the future development of Nanite, many parallels can be drawn to how texture data is managed that has had decades more industry experience to build, such as:

Similar strategies are being explored and developed for geometry in Unreal Engine 5.

- Texture tiling

- UV stacking and mirroring

- Detail textures

- Texture memory reports

- Dropping mip levels for final packaged data

Last edited:

yea i'm struggling here with downloading it. For some reason it's trying to cache it on my C:\ before moving it to my desired directory. I can't install it becauyse my C:\ is incredibly small compared to the rest of drives. sigh... it's > 50% of my C: . Moving all my libraries and shit off just to download this. So frustrating I want a new drive now

I haven't had that problem, but Epic servers are somewhat slow today for obvious reasons. Usually, I can do 80-110MB/s easily any other day, but now...

https://www.resetera.com/threads/unreal-engine-5-dev-workflow-stream-today-5-26.431627/post-65781908

gofreak on era packaged and buid the demo and the size is 24.8 GB

gofreak on era packaged and buid the demo and the size is 24.8 GB

D

Deleted member 86764

Guest

INTERESTING

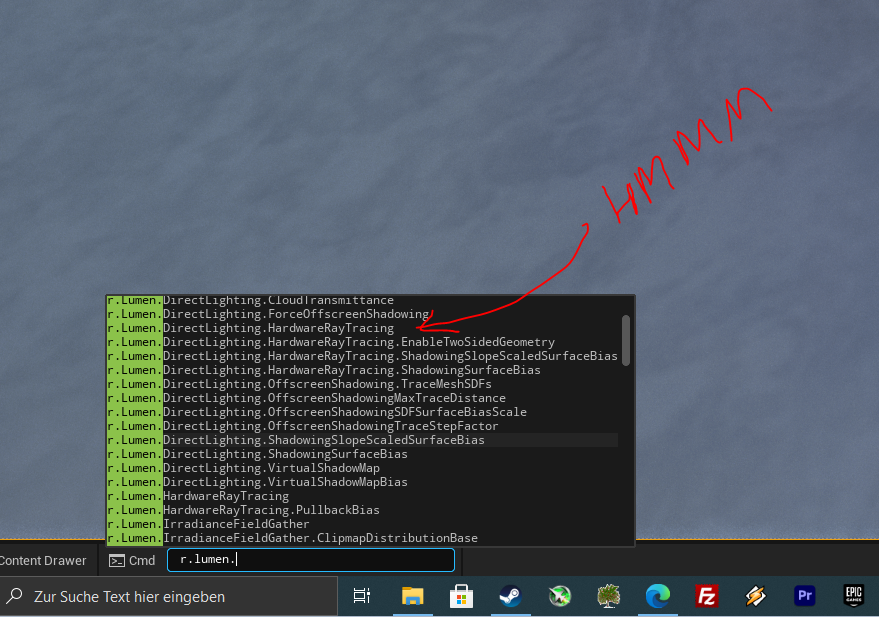

looking through all the r.lumen commands is interesting. The specular reflections are pretty rough as I am seeing right now, but pretty interesting!

*Waiting for your video analysis*

im' resolved, I moved the cache directory off C:\ and I can download finally. lolI haven't had that problem, but Epic servers are somewhat slow today for obvious reasons. Usually, I can do 80-110MB/s easily any other day, but now...

https://www.resetera.com/threads/unreal-engine-5-dev-workflow-stream-today-5-26.431627/post-65781908

gofreak on era packaged and buid the demo and the size is 24.8 GB

Maybe that explains 64GB RAM needed for pc. PC could load the whole thing into ram and avoid using ssd/decompression... Interesting to see how ram requirement changes once directstorage is available and integrated into ue5.

https://docs.unrealengine.com/5.0/en-US/RenderingFeatures/Lumen/TechOverview/

Lumen details and performance on Xbox series X and PS5. This is the costly part of the rendering not Nanite

Lumen details and performance on Xbox series X and PS5. This is the costly part of the rendering not Nanite

Lumen's Global Illumination and Reflections primary shipping target is to support large, open worlds running at 60 frames per second (fps) on next-generation consoles. The engine's High scalability level contains settings for Lumen targeting 60 fps.

Lumen's secondary focus is on clean indoor lighting at 30 fps on next-generation consoles. The engine's Epic scalability level produces around 8 milliseconds (ms) on next-generation consoles for global illumination and reflections at 1080p internal resolution, relying on Temporal Super Resolution to output at quality approaching native 4k.

I assume they mean 60 fps is also 1080p there?

I assume they mean 60 fps is also 1080p there?

I suppose too.

They change the technology, no voxel, just detailed sdf for individual mesh for static geometry until 200 m. Further distance details are using screen space GI.

Screen space GI is used for the character too.

EDIT: they use screen space too for details losr in sdf tracing.

Last edited:

https://docs.unrealengine.com/5.0/en-US/RenderingFeatures/Lumen/TechOverview/ - this is probably the most interesting documents section. Just finished reading it.ough all the r.lumen commands is interesting. The specular reflections are pretty rough as I am seeing right now, but pretty interesting!

Yes, they do support HW RT GI for all types of geometry via the low poly proxies. Moreover, they claim that it's a better quality solution overall because the quality of proxies can be adjusted and it works for all geometry. SDF based Lumen has a lot of limitations and simplifications

They make it sound like HW-RT is a lot slower than their software solution, which kind of defeats the purpose of having HW-RT at all.https://docs.unrealengine.com/5.0/en-US/RenderingFeatures/Lumen/TechOverview/ - this is probably the most interesting documents section. Just finished reading it.

Yes, they do support HW RT GI for all types of geometry via the low poly proxies. Moreover, they claim that it's a better quality solution overall because the quality of proxies can be adjusted and it works for all geometry. SDF based Lumen has a lot of limitations and simplifications

Sure it might look a bit better, but most people won't notice anyway, as Lumen looks more than good enough. They should focus on having HW-RT accelerate Lumen.

Similar threads

- Replies

- 45

- Views

- 5K

- Replies

- 104

- Views

- 19K

- Replies

- 0

- Views

- 950

- Locked

- Replies

- 260

- Views

- 23K