Interesting. Wonder why it's so much better than 680-SLI. Maybe I'll get one of these to kick around until the real Kepler shows up.

Lower-latency inter-GPU communication?

Interesting. Wonder why it's so much better than 680-SLI. Maybe I'll get one of these to kick around until the real Kepler shows up.

Interesting. Wonder why it's so much better than 680-SLI. Maybe I'll get one of these to kick around until the real Kepler shows up.

Another micro-stutter investigation from HT4U.net:

http://ht4u.net/reviews/2012/nvidia_geforce_gtx_690_im_test/index3.php

Good to see sites investigate this.

Another micro-stutter investigation from HT4U.net:

http://ht4u.net/reviews/2012/nvidia_geforce_gtx_690_im_test/index3.php

Good to see sites investigate this.

So here's a card that, according to Anandtech, consumes at load 100 to 120W less than the GTX590, yet retains the same power supply capacity. IOW: a large safety margin over what's strictly required.Kaotik said:Hmh, 300W limit? It has 2x8pin, it's not following the limitations, the real-life consumption (according to TPU) is about same as 6990. (sure, it's under 300W, but TDP != consumption)

So here's a card that, according to Anandtech, consumes at load 100 to 120W less than the GTX590, yet retains the same power supply capacity. IOW: a large safety margin over what's strictly required.

And somehow you consider that bad? What do you suggest they do instead? Remove those 2 GND pins to get 8+6 pin connectors and reduce the operating margin? Just to satisfy an footnote in the PCIe specification that's not even required to pass certification?

http://www.newegg.ca/Product/Product.aspx?Item=N82E16814130781

Wonder if we'll ever see one for anything near $999. On the bright side, you can buy one.

http://www.newegg.ca/Product/Product.aspx?Item=N82E16814130781

Wonder if we'll ever see one for anything near $999. On the bright side, you can buy one.

Maybe I'll get one of these to kick around until the real Kepler shows up.

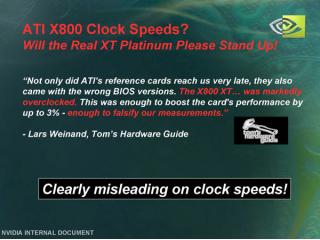

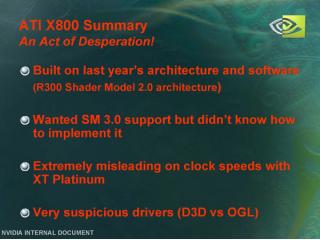

reminds me when TomsHardware got a X800XT-PE sample with memory clocked at 575 instead of 560MHz...Tridam's just published an article about GPU Boost, revisited: http://www.hardware.fr/focus/65/gpu-boost-gtx-680-double-variabilite.html

Basically, his press sample was qualified up to 1110MHz while retail cards may be limited to 1097, 1084, 1071, or perhaps as low as 1058MHz. So he benched a random retail card against his press sample, measured a 1.5% difference on average, up to 5% in Anno 2070.