You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Xbox 360 Direct3D Performance Update *changed

- Thread starter Arctic Permafrost

- Start date

Can anyone expand on these slides pls? GPU clipping twice as fast as before? Auto Z? 3.2 GB/s (84 times) increase for the shader compliler? (hope these slides are not too big)

Nothing to see here, move along

Nice improvements, probably more to come. Nothing like "OMG teh hidden power is teh unl0cked, ph34r".

Clipping is twice as fast, yes, but it's far from being a bottleneck.

AutoZ is something that would make Z-only passes marginally faster by bundling normal and z-only shaders together, reusing things like shader constants between them.

The 84x faster slide is not about the shader compiler, but the normal C/C++ compiler. It shows how to avoid getting into a pathologically slow (84x slower) memory write pattern.

here's some additional information. Master Disaster couldn't post because he's banned for some reason. Here you go:

Could some developers give us the low-down on what all this stuff means??

MasterDisaster said:http://www.larabie.net/xbox360/360PerformanceUpdate.jpg

http://www.larabie.net/xbox360/PredicatedTilingPerf.jpg

http://www.larabie.net/xbox360/ShaderPerfFix.jpg

Here's the text from *some* of the slides:

--------------------------------------

AutoZ Vertex Shaders

Compiles vertex shaders into 2 versions bundled together:

One version outputs position only

Other version does all outputs

D3D automatically chooses the appropriate version when loading shader

Results are guaranteed identical (no Z fighting)

2 versions are smaller than can be done manually since constants are shared

AutoZ & BeginZPass/EndZPass

This combination gets you:

An optimized GPU Z pre-pass

Double-speed fill

Optimized Z pre-pass vertex shaders

With no CPU cost

And free bounds determination for predicated tiling

Predicated Tiling Perf

D3D’s tiling perf has steadily improved:

Compiler makes more efficient vertex shaders

E.g., too many vfetches often made vertex shaders vfetch-bound, hurting tiling disproportionately

GPU’s clipping configured to be twice as fast

Support for D3DTILING_ONE_PASS_ZPASS

One Z pre-pass works for all tiles

AutoZ means BeginZPass/EndZPass is faster

Good because tile patching now done at end of first Z pre-pass

Predicated Tiling Perf

Before evaluating tiling perf, don’t forget to:

Use D3DCREATE_BUFFER_2_FRAMES

...and so also double size of secondary ring buffer

Check for bad CPU/GPU synchronization via

% Frame GPU Wasted counter in PIX

Note that Tiling can save memory:

E.g., 1280×720×32bpp at 4× multisampling

28 MB on traditional architecture

10 MB EDRAM + 3.5 MB extra front buffer + ≈2.5 MB extra secondary buffer = 16 MB with tiling

GpuLoadShaders

Allows shader loads to be predicated

Useful with BeginZPass/EndZPass:

GpuLoadShaders allows simpler shader substitution during Z pass

Useful with command buffers:

A single command buffer can potentially encode multiple different passes

Keep using SetVertex/PixelShader for normal rendering

Have to GpuOwn literal constants

Not faster than regular APIs

Memory Performance Moral

Write-combining tips:

__storewordupdate, __storefloatupdate ensure proper ordering

volatile does, too, but can introduce suboptimal code gen

Always look at generated assembly

Beware of memcpy

Consider switching to cacheable

WC was 41 MB/s before, 3.4 GB/s after

Cacheable was 310 MB/s before, 1.0 GB/s after

Cacheable is more forgiving

Saving Memory

Read white paper “Xbox 360 Texture Storageâ€

Then use new APIs when you bundle your textures:

XGAddress2D/3DTiledExtent to get true allocation size

XGGetTextureLayout to enumerate unused portions that can be used for other stuff

XGSet[Cube|Array]TextureHeaderPair to pack two 128×128 (or smaller) textures in the same space required for one

Good Swap Synchronization

Use D3DCREATE_BUFFER_2_FRAMES to allow CPU 1 frame ahead of GPU

And resize ring buffer appropriately via D3DPRESENT_PARAMETERS:: RingBufferParameters

Let D3D do swap throttling

Double buffer all dynamic per-frame resources

--------------------------------------

Could some developers give us the low-down on what all this stuff means??

I know this is going to hurt some people's feelings, but one of these days it needs to be said at least once.

If there needs to be a software update to tweak the load-balancing characteristics of Xenos, it can only mean that the thing is fundamentally broken.

Wow, so GPUs are always fundamentally broken.

Ditto consoles that get performance and feature updates to the OS and APIs years after release.

Fact: Xenos, from day 1, allowed developers to program threading. Streamlining the API and compilers to get better performance, especially out of chips with newer architectures, is not an indication of something being broken at all.

Unified shading's key benefit is automatic load balancing. Shuffling around of compute resources on the fly, at any time, which specifically has to include mid-batch. Software can't do this. The hardware must manage itself. If it doesn't do that, the effort is wasted. If Xenos doesn't do that, well, that's ... bad.

The hardware... does do that. And from day 1 it was noted developers can get their hands dirty with algorhythms that are tailored to tailor to their specific criteria (wow, control is now bad?). But as a dev here said, he was guestimating less than 5% gain from doing such.

The fact you constant drive at these same fundamental points, with no evidence, really clears things up. Thanks.

EDIT: @ the OP: Can we stop with the sensational, misleading titles? Gamerfest was a while back, already discussed, and while the 360 will get updates, this is not uncommon for consoles. Things will become more effecient and streamlined, problems will be identified and some resolved, new features revealed, etc... This is normal. Nothing is gonna change, fundamentally, what the 360 is. The major gains in software in the next 2 years will be driven by games being designed with the platform in mind (or as target platform) with dev kits from the start and a working knowledge of the platform instead of blind stabbing.

Last edited by a moderator:

The hardware... does do that. And from day 1 it was noted developers can get their hands dirty with algorhythms that are tailored to tailor to their specific criteria (wow, control is now bad?). But as a dev here said, he was guestimating less than 5% gain from doing such.

Well it was an advantage, if not the advantage that lb is done automatically to always get 100% utilization. If you must constantly tweak it to get the best possible performance, than there really is no advantage over not having it at all imo, since you also have to tweak loads on a non US hardware ... but maybe i misunderstand that a bit here.

You want to discuss or just be a venting jerk? If you want to discuss stuff, please don't pretend I've written stuff I haven't written. Then you'll get a proper reply.Wow, so GPUs are always fundamentally broken.

Wtf! Back off now. Whatever you think I've written, you have got the wrong man. Seriously. My posting history is open for searches.Acert93 said:The fact you constant drive at these same fundamental points, with no evidence, really clears things up. Thanks.

To be precise, they ameliorated the performance of some elements of the D3D API and the HLSL compiler from the XeDK.Now we're getting somewhere. We finally have official comfirmation from MS themselves of unlocking the full power of the Xbox 360 GPU instead of some random forum post. Interesting....

Nothing has been locked away from developers, per se.

It's just that now developers won't have to use low level optimisations to get better performances in the aforementioned cases. In other words, it simplifies the life of the X360 developers some more, which is good news for the developers.

That's one of the biggest difference between MS and some other console manufacturers, their tools are great, perform well and are constantly updated. No need to worry about having to do tons of optimisations to get most of the hardware. And, in the case you wanted to optimise some more your code, MS provide powerful profiling tools to help you with the task.

On the other side of the spectrum, some developers have to deal with, let's say, slightly bugged compilers, which require code with a godd chunk of ASM if you want any form of good performances add to that a still WIP IDE integration... (That other console manufacturer is not Nintendo in case someone wanted to pint that Nintendo's tool are great).

MS definitely deserves some props for that.

Well it was an advantage, if not the advantage that lb is done automatically to always get 100% utilization. If you must constantly tweak it to get the best possible performance, than there really is no advantage over not having it at all imo, since you also have to tweak loads on a non US hardware ... but maybe i misunderstand that a bit here.

1. ATI never claimed 100% ALU utilization. They actually claimed 95% ALU utilization for unified shaders and 50-70% ALU utilization for ATI's traditional designs.

2. You have to tweak your game code to get the best performance possible out of any GPU. Just because you have to do such doesn't mean that something new is "worthless" or broken. Hypothetically, tweaking gets you from 85% to 90% where another architecture requires tweaking to get from 50% to 70%, just because the later got a 40% increase doesn't mean it has better utilization.

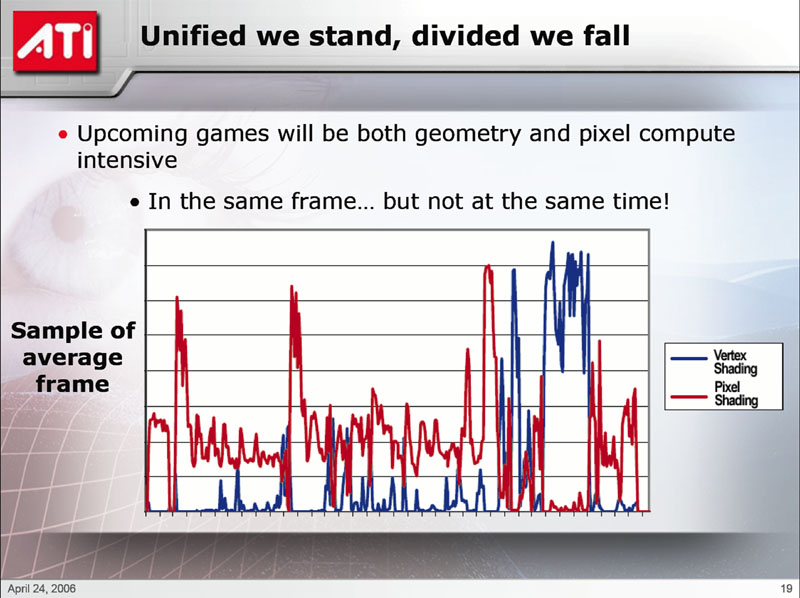

3. What maybe has not been understood through words, maybe a chart will suffice:

This graph was actually swiped from a B3D member who was doing some profiling (this is supposed to be a typical "frame" in a typical game). YMMV. Every game will be different (and every game will vary within itself from level to level, part of level to the next, frame to frame, and even within a frame itself) but the fact is it seems that you can never get 100% utilization of GPU ALUs. There will be times when you are VS bound and other times when you are PS bound.

Currently when GPUs are VS bound it is a big hit to performance. e.g. Take an R580. When VS bound, the 48 Pixel Shaders are idle while the 8 VS are pushed to the max. Now we do know there are some threading overhead (and one of the reasons ATI didn't claim 100% utilization), but from day 1 it was noted that developers have control. But even then it was noted it wasn't very bad and that tweaking would most likely be in the 5% range in many cases.

I still don't get where you are coming from when you say, "If you must constantly tweak it to get the best possible performance, than there really is no advantage over not having it at all imo, since you also have to tweak loads on a non US hardware". Mind explaining how if tweaking is required anyone on traditional designs that tweaking is somehow bad on a USA design? As you say, you are already doing it... so what is the difference?

Arctic Permafrost

Banned

Because, on the one hand, I want to be conscious about what's going on to some extent, and on the other hand, it's cool to increase the extent of my knowledgement... To make it clear, not to the point of getting saturated with info, because I like to mind my own business and don’t like poking my nose in other folks.Right - because the primary/only objective of anything we implement in a game, is to advertise it in PR.

Some posts in this forum are truly cryptic for me, given the fact I don't understand a word of it. But concepts like HDR, tessellator, tiling, FSAA, AF, etc, are common knowledge and it's just neat to know if they are implemented in a game.

I am not telling you that you can reveal some secrets here, as in real life I only do that with exclusive people. Or something like unveiling secrets and hidden levels of the game via microtransactions -like EA is going to do-.

Or giving players 3 lives to start with and selling more lives if the need arises -microtransactions again-. I prefer something real, credible and easy to understand info.

________________________________________

"Unlucky in games, lucky in love"

Gamertag: Cyaneyes

Arctic Permafrost

Banned

X360 is something to take seriously, it isn't the old and crappy PS2. Yeah, PS2 games have improved since the debut of the console, but Ps2 was a dictatorial console, xboxers got tired of Ps2 based ports, we were suffering because of PS2 limitations -playability matters but developers don't tend to take advantage of a specific hardware when they create a multisystem game-.That doesn't mean there is anything that hasn't been 'yet revealed', as the topic subject line says. We've known about these features for a long time now, before the 360 was launched in fact, they're not new, and programmers have had access to them before now as well. Just because they're getting (better) integrated into microsoft's software libraries doesn't mean it suddenly is new stuff. Quite the opposite.

Limitations are unavoidable, software optimizations that take advantage of the hardware are not.

Thank god, currently we can compare and make justice with games in similar systems. X360 is great, Sony is not charismatic enough for me but make no mistake about it, I am eternally grateful to PS3.

Xbox 360 is a very complex system, good software can improve performance by a 40-50%. I guess next XNA and dashboard update will improve performance of future X360 games by a 40-50% (or even 2 times) margin. Desirably, current XTS games should be also improved in some way from the update.

________________________________________

"Unlucky in games, lucky in love"

Gamertag: Cyaneyes

Similar threads

- Replies

- 111

- Views

- 7K

- Replies

- 66

- Views

- 3K