He tests all 3 of the common resolutions but 4k helps minimize CPU limitations.So, why is he testing 4K?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Value of Hardware Unboxed benchmarking *spawn

- Thread starter Phantom88

- Start date

The premise behind this thread is that HUB is biased towards AMD and possibly even puts out fake/altered benchmarks to make Nvidia look worse.

I never agreed to that. I said they are biased against ray tracing, which they are. They say it generally doesn't look improved enough to warrant the performance decrease. Digital Foundry looks at the same games and they generally conclude that it does. HUB biases their testing towards rasterization because they, and their audience, prefer the performance. Digital Foundry biases their testing towards ray tracing because they think the visuals are worth the performance, and are forward looking etc.

My stance is people need to stop trying to conclude that one of these perspectives is more correct than the other. People have difference preferences and they're both fine. There's no objective way to settle the difference. Basically, find a reviewer that aligns with your subjective tastes and stick to that.

Or worse, choose a number of games that support RT but test them with RT disabled on $1000+ GPU's that are more than capable of playing them with RT enabled.

It's actually totally fine. Imagine a hypothetical reviewer that only wants maximum frame rate. They could review every game with ray tracing turned off. That's not an unfair test. They're just not testing ray tracing. If that's what they and their audience wants, then they're actually doing their audience a service. Reviewers can have niches to cater to specific people. If you don't like it, don't read it/watch it. Find a reviewer that tests the things you want to see tested.

Edit: These gpus aren't even strictly for gaming. Most reviews are heavily biased towards gaming, and all of the video editing, blender are lightly covered. How many of the gaming sites do any kind of benchmarking with deep learning? It's totally reasonable to cater reviews towards personal interest and make recommendations based on how well something suits your own needs.

Last edited:

It's actually totally fine. Imagine a hypothetical reviewer that only wants maximum frame rate. They could review every game with ray tracing turned off. That's not an unfair test. They're just not testing ray tracing. If that's what they and their audience wants, then they're actually doing their audience a service. Reviewers can have niches to cater to specific people. If you don't like it, don't read it/watch it. Find a reviewer that tests the things you want to see tested.

Edit: These gpus aren't even strictly for gaming. Most reviews are heavily biased towards gaming, and all of the video editing, blender are lightly covered. How many of the gaming sites do any kind of benchmarking with deep learning? It's totally reasonable to cater reviews towards personal interest and make recommendations based on how well something suits your own needs.

I do agree with this. But if this is the intention (and nothing wrong with that), it should be called out at the start. The problem with HUB is that they do mix in RT benchmarks - just generally ones that are more forgiving on AMD hardware, and they even call out the fact that they're using RT games in their suite as evidence that they're being balanced.

If the best possible performance was really their motivation (as opposed to trying to look balanced but choosing a very specific mix of games, tests, and settings which favour AMD) then RT would have been turned off in all games, and they wouldn't have ran at otherwise maxed out settings, and 4k. In the end it's a matter of consistency. All RT on, or all RT off. Not pick'n'mix based on which AIB performs better.

HUB has done some dumb stuff. Like I think one of the 4090 reviews they did used a 5800x3d as the cpu, which isn't the fastest choice and the 4090 can be cpu limited in a lot of games. Probably would have made more sense to use a 13900k to really see the gpu scaling. But overall I don't think they're really bad. Mostly I'd just like them to show their test runs so I could actually compare.

How can a selection of 30-50+ games with all the popular and AAA titles tested be chosen specifically to favor AMD?

Because where a large proportion of those games support RT, they choose to only turn it on in the games where the hit to AMD isn't that big compared to Nvidia. They also test some of the games multiple times at different settings but this duplication of testing more heavily favours AMD and seems to have little logic behind it.

Like Cyberpunk, Dying light 2, Metro and Control? Are those titles that favor AMD’s lesser RT? They tested one title, MW 2, at two settings profiles. I would guess it’s because of how popular it is as well as the ultra settings still falling a bit short of a truly high framerate experience. Oh wait he also tested Fortnite with and without the nanite/lumen features. So yeah, I really don't see this cherry picking. Watching HUB content doesn’t paint the disparity in RT performance between the vendors differently than we all know.Because where a large proportion of those games support RT, they choose to only turn it on in the games where the hit to AMD isn't that big compared to Nvidia. They also test some of the games multiple times at different settings but this duplication of testing more heavily favours AMD and seems to have little logic behind it.

It takes time to migrate all your benches to a new CPU because you have to retest all GPUs you plan to have in your comparison.HUB has done some dumb stuff. Like I think one of the 4090 reviews they did used a 5800x3d as the cpu, which isn't the fastest choice and the 4090 can be cpu limited in a lot of games. Probably would have made more sense to use a 13900k to really see the gpu scaling. But overall I don't think they're really bad. Mostly I'd just like them to show their test runs so I could actually compare.

Last edited:

Like Cyberpunk, Dying light 2, Metro and Control? Are those titles that favor AMD’s lesser RT?

You've just perfectly illustrated my point. No those titles don't favour AMD's lesser RT which is exactly why despite being included in the bench, it is without their RT enabled:

Metro Exodus Enhanced runs fine on a Series S with RT enabled yet here he is testing the non RT version on a 7900XTX in a head to head with Nvidia. Can't imagine why...

They tested one title, MW 2, at two settings profiles. I would guess it’s because of how popular it is as well as the ultra settings still falling a bit short of a truly high framerate experience.

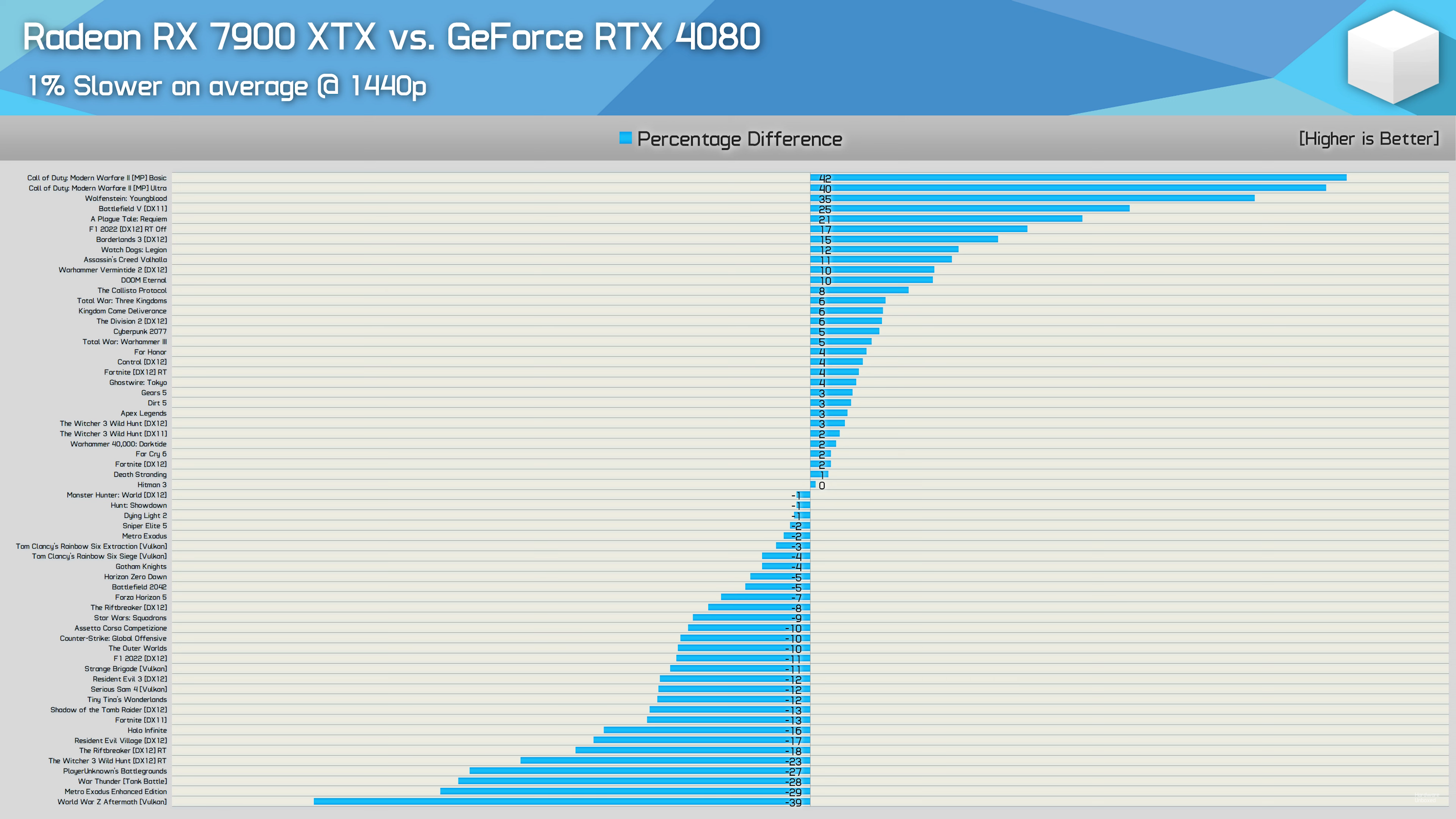

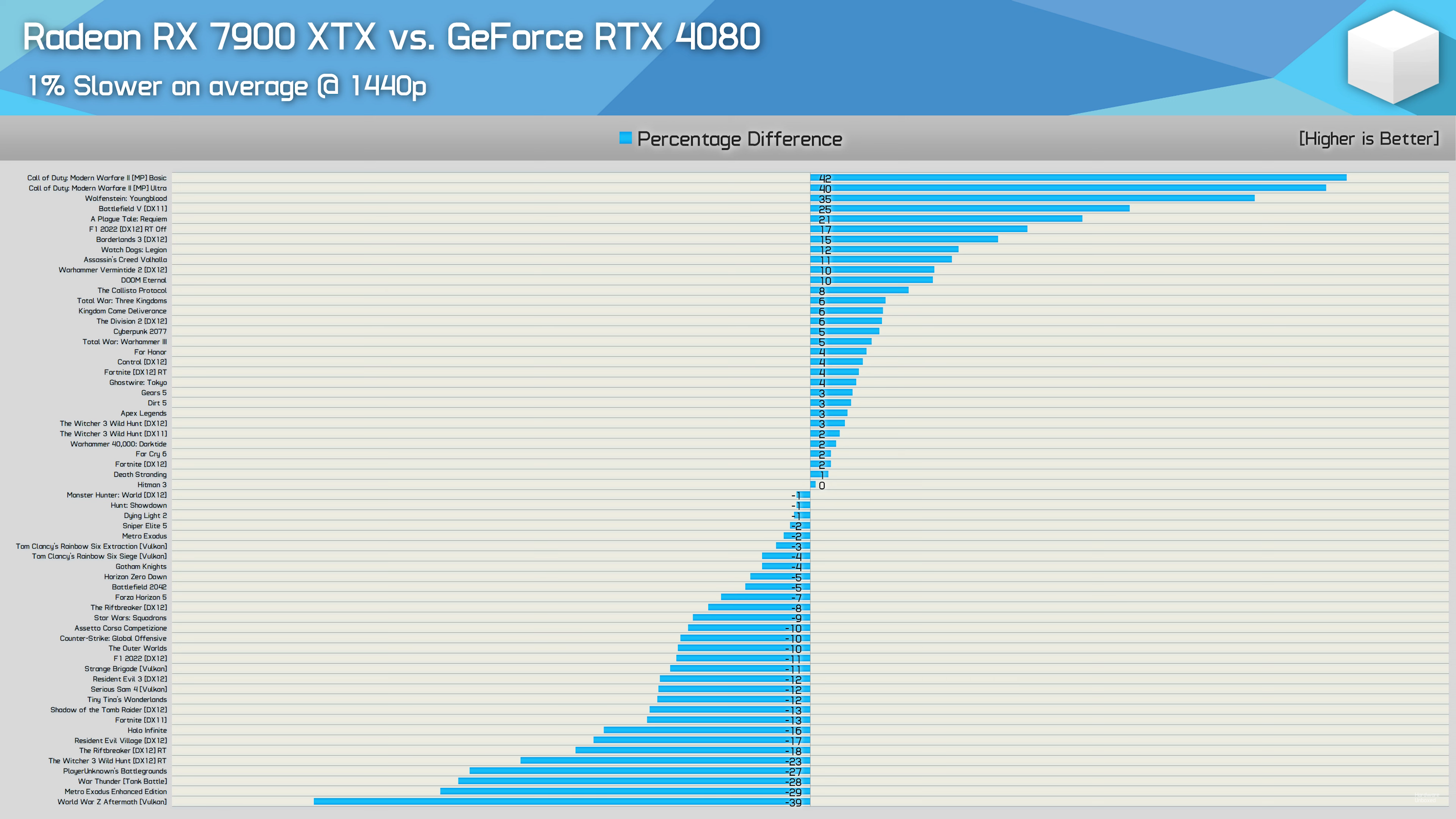

I'm sure that's the reasoning he'd give as well. And the fact that it's a massive performance outlier for AMD which he's now counting twice in an average performance score which just happens to beat the Nvidia GPU by the tiniest of margins (at 4K) has nothing at all to do with it.

Metro Exodus Enhanced runs fine on a Series S with RT enabled yet here he is testing the non RT version on a 7900XTX in a head to head with Nvidia.

HUB included both original Metro and Metro EE. RT was disabled in Control, Cyberpunk and DL2 though.

Obviously they’re not serious about evaluating RT performance or IQ impact when they enable it in Riftbreaker but not the games where it actually matters.

The 13900k launched after the 4090.HUB has done some dumb stuff. Like I think one of the 4090 reviews they did used a 5800x3d as the cpu, which isn't the fastest choice and the 4090 can be cpu limited in a lot of games. Probably would have made more sense to use a 13900k to really see the gpu scaling.

They have used the 5800X3D in more recent reviews too but I wouldn't call that dumb. Most reviewers don't update their GPU test system instantly after a new king of the hill gaming CPU is released.

You've just perfectly illustrated my point. No those titles don't favour AMD's lesser RT which is exactly why despite being included in the bench, it is without their RT enabled:

Metro Exodus Enhanced runs fine on a Series S with RT enabled yet here he is testing the non RT version on a 7900XTX in a head to head with Nvidia. Can't imagine why...

And the fact that this is all out in the open is fine -- it's exactly the point that @Scott_Arm is making.

If you want reviews that focus more on visual fidelity and less on "hardcore/performance gaming", then there are plenty of alternative review sources to use.

For example, look at any of HUB's monitor reviews. They put far more importance on refresh rate and latency performance than they do on the colour quality or calibration of a display panel. If you're a creative professional or a digital artist, that angle may not be particularly helpful. You can appreciate the other aspects in which HUB tests monitors, and you can always read alternative reviews on display quality elsewhere.

I'm sure that's the reasoning he'd give as well. And the fact that it's a massive performance outlier for AMD which he's now counting twice in an average performance score which just happens to beat the Nvidia GPU by the tiniest of margins (at 4K) has nothing at all to do with it.

Roughly more than two-thirds of the games tested feature single digit differences between the two cards. Furthermore, due to how an average works, COD's contribution to the average is miniscule. I really don't see big deal here.

In Riftbreaker you don't have to throw half your performance out the window for the RT effects it has.HUB included both original Metro and Metro EE. RT was disabled in Control, Cyberpunk and DL2 though.

Obviously they’re not serious about evaluating RT performance or IQ impact when they enable it in Riftbreaker but not the games where it actually matters.

Based on your past posts the 'games where it matters' are those where it hurts the performance most. For many, including HUB, in most cases that kind of sacrifice is not acceptable for the added effects it brings.

I don't understand how this simple concept is so hard for some to grasp:

It's not about RT or no RT, it's about the performance penalty it brings vs the gains in IQ.

For some it may be just fine to get accurate reflections while losing half your performance, but for others it's not. Everyone has their own scales for it.

People need to accept the fact that their own opinions, contrary to popular beliefs, are in fact not universal truths.

Exactly.In Riftbreaker you don't have to throw half your performance out the window for the RT effects it has.

Based on your past posts the 'games where it matters' are those where it hurts the performance most. For many, including HUB, in most cases that kind of sacrifice is not acceptable for the added effects it brings.

I don't understand how this simple concept is so hard for some to grasp:

It's not about RT or no RT, it's about the performance penalty it brings vs the gains in IQ.

For some it may be just fine to get accurate reflections while losing half your performance, but for others it's not. Everyone has their own scales for it.

People need to accept the fact that their own opinions, contrary to popular beliefs, are in fact not universal truths.

Simple economics and the concept of marginal utility.

He tested Metro EE as well. It’s right there at the bottom heavily weighted in Nvidia's favor. He tested the other titles I mentioned in the 7900 launch video. This witch hunt is really not very well thought out. He included World War Z which is a disaster for AMD with virtually equivalent margins as COD only in Nvidia’s favor.You've just perfectly illustrated my point. No those titles don't favour AMD's lesser RT which is exactly why despite being included in the bench, it is without their RT enabled:

Metro Exodus Enhanced runs fine on a Series S with RT enabled yet here he is testing the non RT version on a 7900XTX in a head to head with Nvidia. Can't imagine why...

I'm sure that's the reasoning he'd give as well. And the fact that it's a massive performance outlier for AMD which he's now counting twice in an average performance score which just happens to beat the Nvidia GPU by the tiniest of margins (at 4K) has nothing at all to do with it.

Last edited:

In Riftbreaker you don't have to throw half your performance out the window for the RT effects it has.

Does that explain their decision to test Witcher 3 RT? It was universally panned as overly expensive.

It's not about RT or no RT, it's about the performance penalty it brings vs the gains in IQ.

HUB’s game selection doesn’t actually fit that criteria though. They clearly aren’t choosing RT tests based on performance hit, popularity or IQ benefit.

But let’s play devils advocate and assume HUB is philosophically against settings that cost a lot of performance for limited IQ benefit. Where is the same concern for ultra settings or 4K resolution? The idea that they’re making some sort of judgement call based on ROI doesn’t really hold up.

It would be a different story if they were doing subjective best playable settings like HardOCP back in the day and were actually thoughtful about all IQ settings not just RT.

Henry swagger

Newcomer

When is the architecture products section getting opened again mods ? .. place is getting boring without discussion hardware

No, it does not. Maybe they like to test high-profile new games at the lowest and highest settings. When the game isn't hot anymore they settle on one setting.Does that explain their decision to test Witcher 3 RT? It was universally panned as overly expensive.

Start an architecture thread somewhere else, maybe they won't noticeWhen is the architecture products section getting opened again mods ? .. place is getting boring without discussion hardware

Similar threads

- Replies

- 112

- Views

- 12K

- Replies

- 157

- Views

- 7K

- Replies

- 21

- Views

- 2K