Excessive geometry is the new ray traced reflections in your face as a "cheap" effect to wow casuals. I hope artists don't go crazy, this makes no sense

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Unreal Engine 5, [UE5 Developer Availability 2022-04-05]

- Thread starter mpg1

- Start date

D

Deleted member 2197

Guest

The latest incarnation of primitive shaders is another term for mesh shaders.I did not read the papers myself but if what you said is correct wouldn't that put hardware that does not have primitive shaders at a disadvantage? From my understanding only certain AMD GPUs and APUs have the hardware for primitive shaders. What about Nvidia's RTX GPUs, GTX 1600 series and I'm guessing the Xbox Series APU's?

D

Deleted member 86764

Guest

Good optimizations or pc lacking optimizations? The engine is in preview, not final. From documentation console target is 1080p and then use TSR to scale into 4k. PC could also be enabling more features than console versions are using.

I guess detail is proportional to resolution. If they're planning on limiting the polygons to one per pixel, then increased resolution would be increased polygon counts.

DegustatoR

Legend

I hope they do actually. Not necessarily through Nanite approach though.Excessive geometry is the new ray traced reflections in your face as a "cheap" effect to wow casuals. I hope artists don't go crazy, this makes no sense

Not sure that this is correct.The latest incarnation of primitive shaders is another term for mesh shaders.

Note that PS5 doesn't support mesh shaders but do support primitive shaders.

Primitive shaders do run on RDNA2 but they are not the same thing as mesh shaders.

AFAIU primitive shaders are about geometry culling while mesh shaders are about geometry processing.

it's really a testament to how effective their compute shader setup is at culling triangles. Something that the hardware path cannot do.Excessive geometry is the new ray traced reflections in your face as a "cheap" effect to wow casuals. I hope artists don't go crazy, this makes no sense

This is really important for sure, to be able to do something like this, even on machines that don't support mesh shaders.

I guess detail is proportional to resolution. If they're planning on limiting the polygons to one per pixel, then increased resolution would be increased polygon counts.

That's exactly what they are trying to do. Another side effect of this is that needed ssd bandwidth is likely directly tied to resolution used. 4k probably needs 4x data from ssd compared to 1080p.

D

Deleted member 2197

Guest

True. I did not mean they were synomous but had some similarities in common. Based on this read mesh shaders seem to offer more flexibility regarding programmability.Not sure that this is correct.

Note that PS5 doesn't support mesh shaders but do support primitive shaders.

Primitive shaders do run on RDNA2 but they are not the same thing as mesh shaders.

AFAIU primitive shaders are about geometry culling while mesh shaders are about geometry processing.

Primitive Shader: AMD's Patent Deep Dive | ResetEraSo will PS5 also support mesh shader?

There are several noticeable differences between the two. With the primitive shader setup AMD described, you still have to "assemble" the input data of predefined format (vertices and vertex indices) sequentially, where as with mesh shader the input is completely user defined, and the launching of mesh shader is not bound by the input assembly stage - it's more like compute shader that generates data to be consumed by the rasterizer. Also with primitive shader, the tessellation stage is still optionally present, whereas for mesh shader tessellation simply doesn't fit.

There are also overlaps between the two. Primitive culling are done programmatically and can be performed in a more unrestricted order; LDS is now (optionally) visible in user shader code, whereas in the traditional vertex shader based pipeline, each thread is unaware of Local Data Store's existence.

...

Here's my take: To support mesh shader in its purest form, you'll need the GPU's command processor to be able to launch shader in mesh shader's way. If the command processor somehow isn't able so, as long as the API exposes good level of hardware detail, developers should be able to take most of mesh shader's advantages. If it's like AMD's approach with in-driver shader transformation, then the advantage will be limited compare to full mesh shader support, as programmability will be greatly sacrificed.

Last edited by a moderator:

I'm getting really noticeable artifacting around the character when in motion. Looks like ghosting from the TAA or motion blur implementation.

Has anyone been able to package the demo as a standalone executable so that it’s runnable outside the editor?I’m getting an error that the SDK isn’t installed properly whenever I try.

Has anyone been able to package the demo as a standalone executable so that it’s runnable outside the editor?I’m getting an error that the SDK isn’t installed properly whenever I try.

That propagation update delay is going to be noticeable all generation.

It doesn't seem to be very effective at culling, culling time is the same with both r.NaniteComputeRasterization and r.NanitePrimShaderRasterization, but rather compute rasterization part is way faster at rasterizing triangles with sizes less than 4 pixels with r.NaniteComputeRasterization and roughly equal to HW rasterization at r.Nanite.MaxPixelsPerEdge=4, then it starts losing to r.NanitePrimShaderRasterization at larger triangles, which is supposedly rasterization path.it's really a testament to how effective their compute shader setup is at culling triangles. Something that the hardware path cannot do.

That's all for the 2560x720 rendering resolution (slightly below 1080p in pixel count), default setting for this resolution is r.Nanite.MaxPixelsPerEdge=2, this would translate into 8 pixels per edge in 4K, so raster still can beat it in this resolution with such settings. Not sure whether classic rasterizer part is optimized in UE5 to the same extent as compute rasterizer. I have tried NVIDIA's mesh shaders Asteroids demo recently (without some fancy optimizations) and it was capable of rasterizing 300 millions of polygons of overlapping asteroids meshes at 4K with 30 FPS, in wireframe mode there were a few meshes with holes, but otherwise the screen was mostly opaque (close to per pixel density)

Frenetic Pony

Veteran

That propagation update delay is going to be noticeable all generation.

It so annoys me I'm already trying to think of clever ways around it

Course in this case I haven't even figured out how lumen does its updating, so...

I'm getting really noticeable artifacting around the character when in motion. Looks like ghosting from the TAA or motion blur implementation.

Has anyone been able to package the demo as a standalone executable so that it’s runnable outside the editor?I’m getting an error that the SDK isn’t installed properly whenever I try.

As a guess this could be the screenspace GI they use for filling in holes and detail. The same can happen in Days Gone when that's enabled. Little pools of darkness going along with moving objects maybe, or am I totally off?

It's the Gen5TAA aka Temporal Super Resolution. If you switch to old TAA (r.TemporalAA.Algorithm 0) the ghosting stops.I'm getting really noticeable artifacting around the character when in motion. Looks like ghosting from the TAA or motion blur implementation.

I guess for those particles it's all screenspace, so to reduce lag you'd want to prefer spatial over temporal sampling.It so annoys me I'm already trying to think of clever ways around it

AFAIK, this is from Path Of Exile dev and he generally tries to do so:

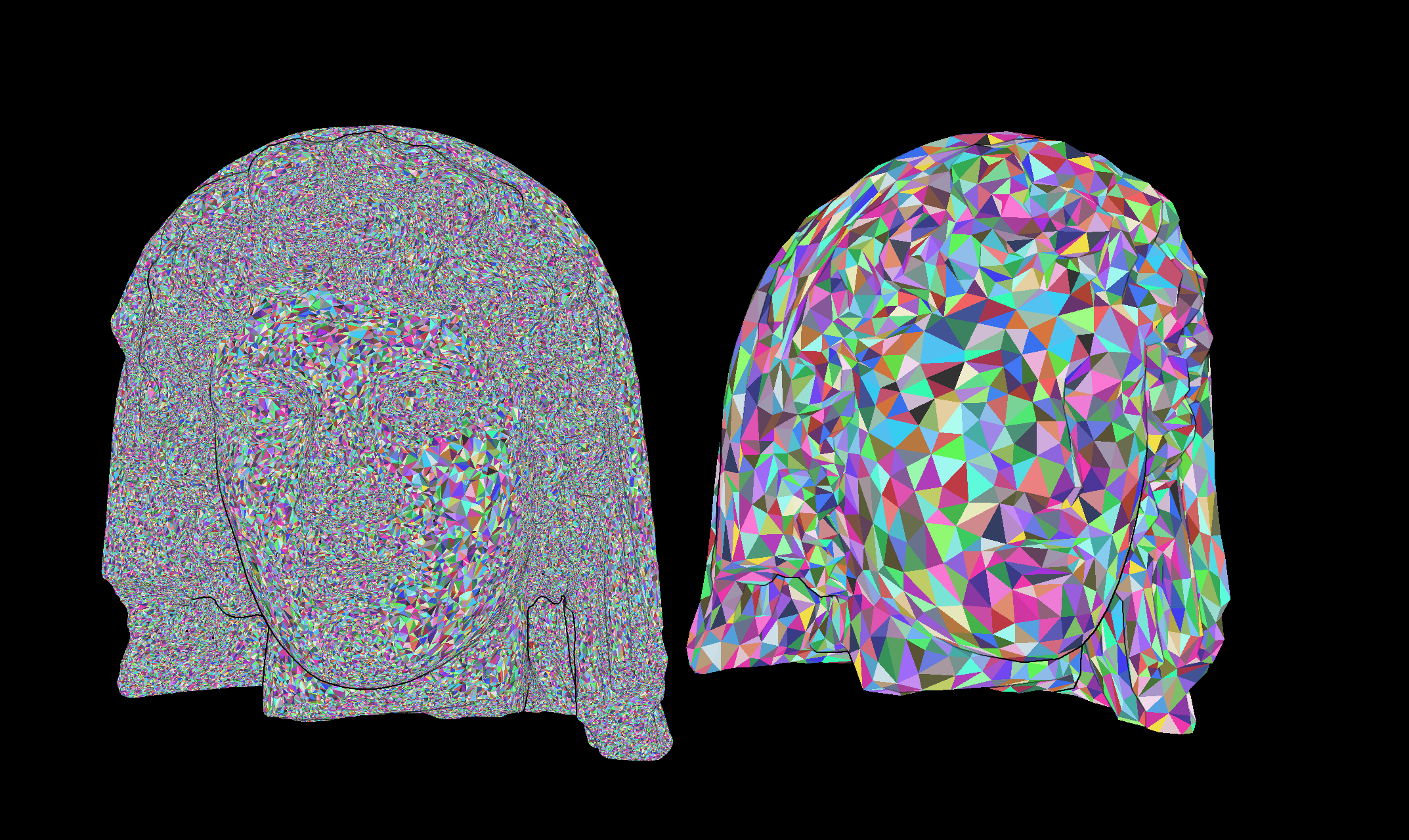

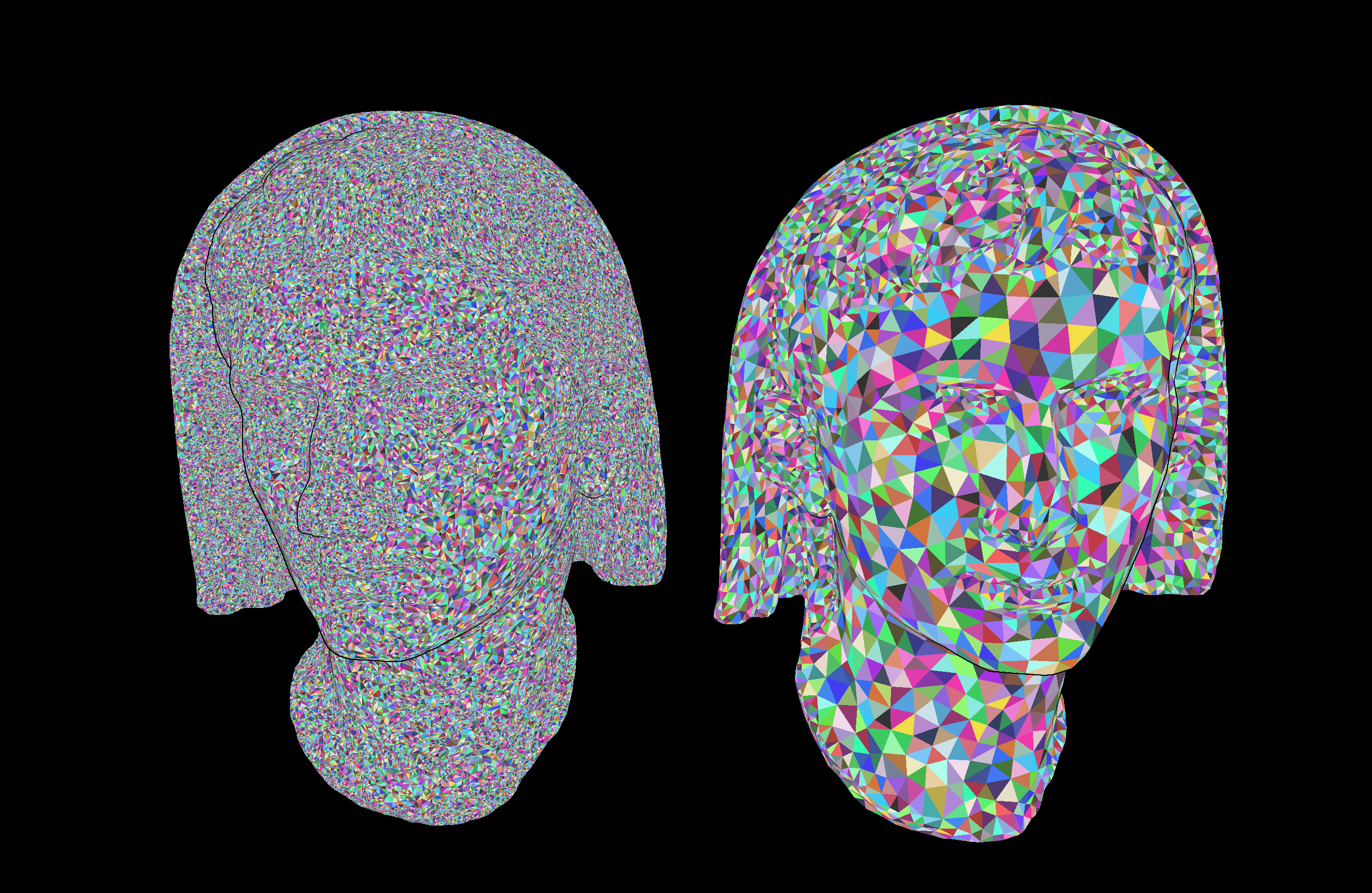

Not sure if this is of interest to anyone here or has been brought up before but I think the Nanite renderer uses a visibility buffer approach to deferred rendering ...

This engine does make me wish that CIG went with unreal instead of cryengine. This stuff would have worked great in space giving a ton of detail to the ships which is mostly what you see out there and the ability to get really detailed on the planets too.

Oh well

I await the first games using this

Oh well

I await the first games using this

cheapchips

Veteran

This engine does make me wish that CIG went with unreal instead of cryengine. This stuff would have worked great in space giving a ton of detail to the ships which is mostly what you see out there and the ability to get really detailed on the planets too.

SC is all about wishes. ;-)

It does make me wonder how you'd build planetary tech to make the most of Nanite though.

Similar threads

- Replies

- 24

- Views

- 4K

- Replies

- 104

- Views

- 18K

- Replies

- 0

- Views

- 927

- Locked

- Replies

- 260

- Views

- 22K