John Norum

Newcomer

That text however focusses basically on hardware compression support and not necessarily on reduced latency although this may come eventually of course.

I wouldn’t be surprised if we start seeing SSD connectors on GPUs again though in the near future as that could solve most of this for PC. [emoji16]

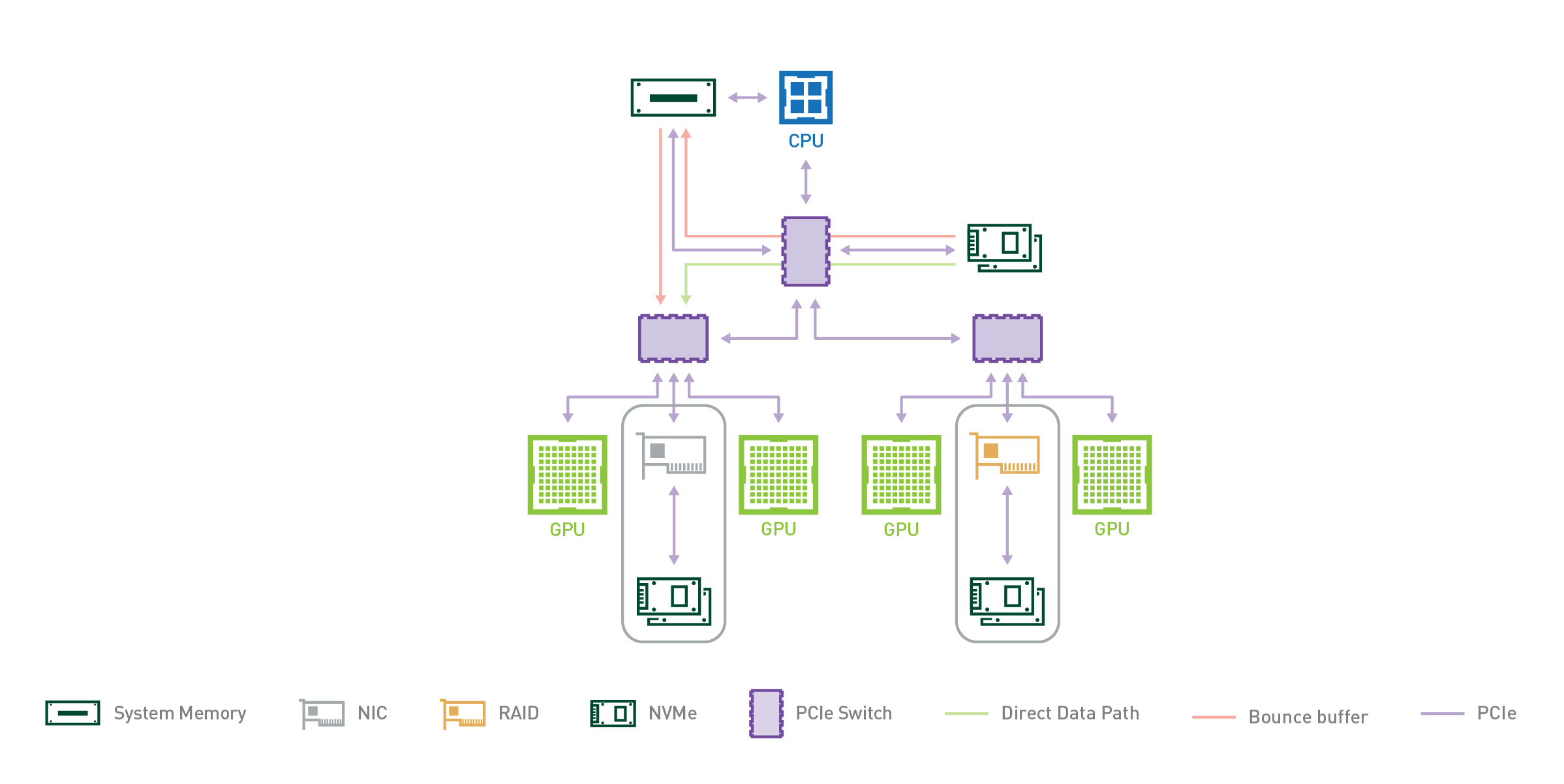

Just as GPUDirect RDMA (Remote Direct Memory Address) improved bandwidth and latency when moving data directly between a network interface card (NIC) and GPU memory, a new technology called GPUDirect Storage enables a direct data path between local or remote storage, like NVMe or NVMe over Fabric (NVMe-oF), and GPU memory.

The bandwidth from SysMem, from many local drives and from many NICs can be combined to achieve an upper bandwidth limit of nearly 200 GB/s in a DGX-2.

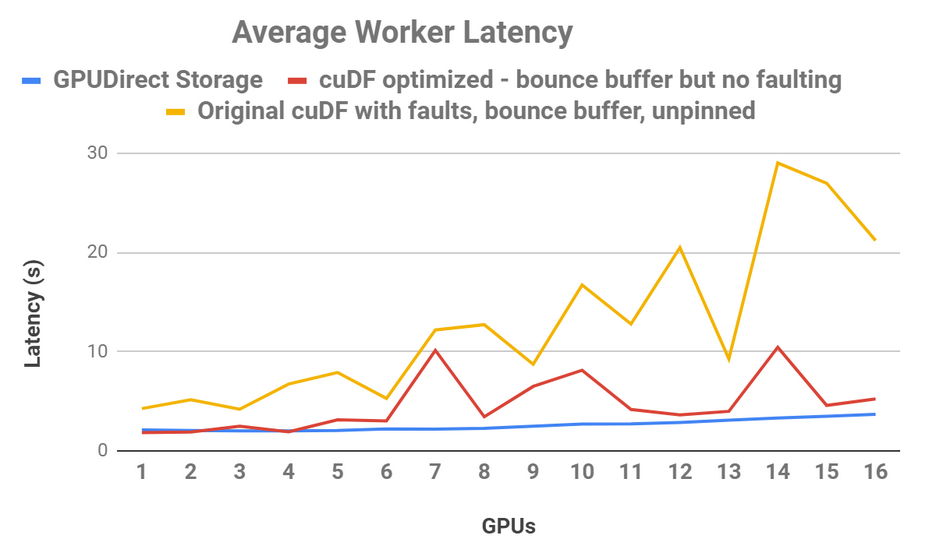

We demonstrate that direct memory access from storage to GPU relieves the CPU I/O bottleneck and enables increased I/O bandwidth and capacity. Further, we provide initial performance metrics presented at GTC19 in San Jose, based on the RAPIDS project’s GPU-accelerated CSV reader housed within the cuDF library. Lastly, we will provide suggestions on key applications that can make use of faster and increased bandwidth, lower latency, and increased capacity between storage and GPUs

https://devblogs.nvidia.com/gpudirect-storage/