I know there aren't many benchmarks out there yet since there's no standard build or anything, but has anyone seen any perf data on this city demo for 5800X vs 5800X3D? Really interested to see how much effect the cache has on UE5 or whether it's just single core clock speed all the way.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Unreal Engine 5, [UE5 Developer Availability 2022-04-05]

- Thread starter mpg1

- Start date

Silent_Buddha

Legend

And check out that PS5 texture resolution compared to the others, isn't that linked to internal resolution?

If you look closely, on PC there are 4x sidewalk "squares" for each 1x PS5 sidewalk square. So they aren't equivalent. The PC and PS5 are doing something quite different in that area. If you instead look at the cement patch on the right side of the screen where it appears they are using the same resources, the PS5 texture detail appears to be somewhere between the 50% and 100% TSR PC sample.

Regards,

SB

Last edited:

It's certainly possible it is using some custom setting on PS5... my memory is that it was -1.5 but honestly so much stuff gets tweaked in the last few weeks it very well could have been changed. The texture/sidewalk thing is a bit weird... I'm not sure why that would have changed, but maybe there's just some details of generating the city for the sample that made it different.As based on what I am seeing on PC, PS5 VSM quality still lines up below what I am finding there even when pumping the internal pre-TSR resolution down quite a bit. Either implying we are quite sub-1080p on PS5 (under 30% screen percentage would be very unlikely) or perhaps it is not using Shadow Quality 3 on console (more likely)?

Apparent shadow resolution is always heavily based on the light angle as well, and the Matrix demo has a very low sun (lower than you could ever really get away with with conventional shadow maps to be honest). That said if this is the same geometry, light angle, shadow resolution and render resolution I would expect them to match; so one of those 4 must be different somewhere

Frenetic Pony

Veteran

WIth the entire thing so CPU bound I wonder if there's any plans at all to change UE5 to something even close to modern CPU scheduling (you know, like a decade after it should've been done). I know there's a slightly hacky parallelization of the physics and new animation system. But from the city demo that really doesn't look to have solved the problem.

Seems like a mistake to me. Are you only gaming in 1080p, or 720p? If so, you're already getting extremely high framerates... who cares about the difference between 260fps vs 294fps? At 1440p there may be some improvement, but again nothing that's going to make the experience feel genuinely improved. At 4K there's likely no appreciable difference between most recent CPUs.I was contemplating whether to swap my 5950x for a 5800x3D. Seems like a no brainer if even the biggest baddest engine can’t use more than 6 cores in 2022.

On top of that, a 5950x will absolutely chew through shader compilation processes designed to scale with cores at a much faster rate. Not to mention general Windows multi-tasking performance will be better.. and should you consider doing other tasks... your 5950x will undoubtedly be superior at the job.

And after all that... you'd be stupid to swap it out for a CPU which is literally going to be outdated later this year regardless. Now sure, the new CPUs will be on a different socket.. but you'd be better off just holding onto your current setup, and when you're ready make the full jump to a PCIe5 based next-gen AMD CPU.

Seems like a mistake to me. Are you only gaming in 1080p, or 720p? If so, you're already getting extremely high framerates... who cares about the difference between 260fps vs 294fps? At 1440p there may be some improvement, but again nothing that's going to make the experience feel genuinely improved. At 4K there's likely no appreciable difference between most recent CPUs.

I game at 3840x1600 144Hz. It’s true that most games show no CPU difference at those resolutions on todays GPUs. But I’m not thinking about todays GPUs. Also, there are some games that already show a big difference - e.g. GTA V, StarCraft.

The 1080p and 1440p results are good indicators of what next generation GPUs will put out at 4K. And all indications are that the 5950x will bottleneck those cards much faster than a 5800x3D.

On top of that, a 5950x will absolutely chew through shader compilation processes designed to scale with cores at a much faster rate. Not to mention general Windows multi-tasking performance will be better.. and should you consider doing other tasks... your 5950x will undoubtedly be superior at the job.

8 cores / 16 threads is way more than I need for the general purpose stuff I do on my PC. It’ll be fine.

And after all that... you'd be stupid to swap it out for a CPU which is literally going to be outdated later this year regardless. Now sure, the new CPUs will be on a different socket.. but you'd be better off just holding onto your current setup, and when you're ready make the full jump to a PCIe5 based next-gen AMD CPU.

Not relevant in my case. I just got this AM4 setup less than a year ago and I keep my platforms for 5+ years. I upgrade at the end of every DDR generation.So I’ll probably be gaming on this same machine with a RTX 6xxxx.

Another option is to just stick with the 5950x until I actually need more CPU grunt and hope to find a 5800x3D on eBay a few years from now.

I guess I'm struggling to understand why you bought a 5950x in the first place then.I game at 3840x1600 144Hz. It’s true that most games show no CPU difference at those resolutions on todays GPUs. But I’m not thinking about todays GPUs. Also, there are some games that already show a big difference - e.g. GTA V, StarCraft.

The 1080p and 1440p results are good indicators of what next generation GPUs will put out at 4K. And all indications are that the 5950x will bottleneck those cards much faster than a 5800x3D.

8 cores / 16 threads is way more than I need for the general purpose stuff I do on my PC. It’ll be fine.

Not relevant in my case. I just got this AM4 setup less than a year ago and I keep my platforms for 5+ years. I upgrade at the end of every DDR generation.So I’ll probably be gaming on this same machine with a RTX 6xxxx.

Another option is to just stick with the 5950x until I actually need more CPU grunt and hope to find a 5800x3D on eBay a few years from now.

And UE4/5 isn't the only engine out there.

I guess I'm struggling to understand why you bought a 5950x in the first place then.

That’s easy. It was the only thing available. I actually have only one of the CCDs enabled. So I’m basically running a 5800x that boosts to 4.9Ghz.

And UE4/5 isn't the only engine out there.

Yeah and hopefully other engines make these 12 and 16 core CPUs worthwhile. Not counting on it though.

WIth the entire thing so CPU bound I wonder if there's any plans at all to change UE5 to something even close to modern CPU scheduling (you know, like a decade after it should've been done). I know there's a slightly hacky parallelization of the physics and new animation system. But from the city demo that really doesn't look to have solved the problem.

Yeah, those darn lazy (Unreal Engine) devs!

WIth the entire thing so CPU bound I wonder if there's any plans at all to change UE5 to something even close to modern CPU scheduling (you know, like a decade after it should've been done). I know there's a slightly hacky parallelization of the physics and new animation system. But from the city demo that really doesn't look to have solved the problem.

What makes you take its all Unreal 5's fault and not something like poor memory or draw call management by devs?

What makes you take its all Unreal 5's fault and not something like poor memory or draw call management by devs?

UE5 does that for you, unless you rewrite the renderer or the underlying game object system.

Well UE has been notoriously single thread limited for a long time and the hope was that UE5 would break out of that aging paradigm.What makes you take its all Unreal 5's fault and not something like poor memory or draw call management by devs?

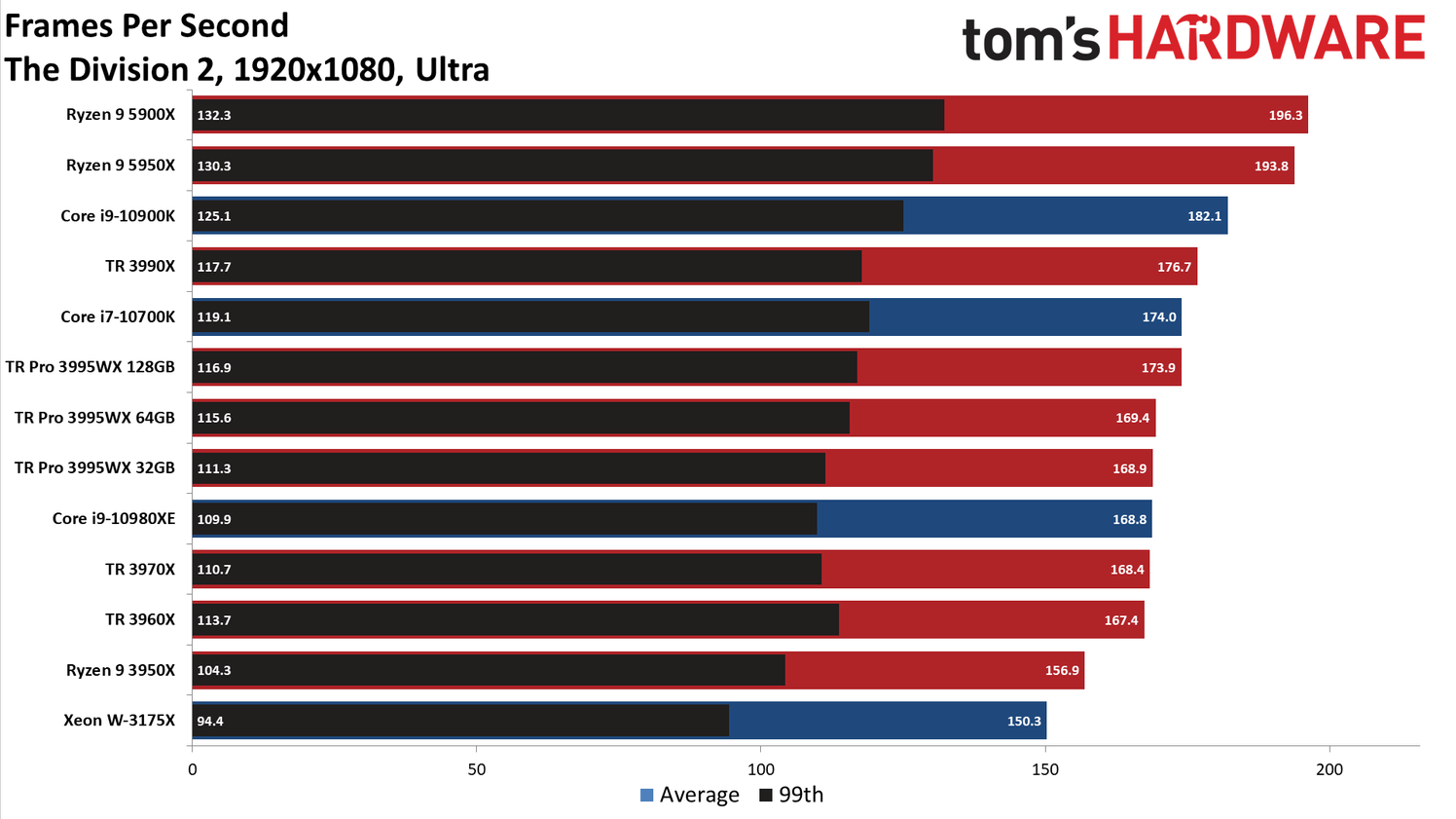

All game engine are not scaling with number of core and thread, there is a reason Threadripper is not the best CPU for gaming. I suppose this gen devs will design games around a 8 cores 16 threads CPU. if next generation consoles have 16 cores 32 threads CPU this will double.

Single core performance was hurting AMD on the gaming side until they improve it.

Single core performance was hurting AMD on the gaming side until they improve it.

Try taking a look at Snowdrop engine in Division 1/2, with significant CPU usage distributed across 8 or more cores during game play. There are many examples of engines that take advantage of multi core workloads.All game engine are not scaling with number of core and thread, there is a reason Threadripper is not the best CPU for gaming. I suppose this gen devs will design games around a 8 cores 16 threads CPU. if next generation consoles have 16 cores 32 threads CPU this will double.

Single core performance was hurting AMD on the gaming side until they improve it.

Try taking a look at Snowdrop engine in Division 1/2, with significant CPU usage distributed across 8 or more cores during game play. There are many examples of engines that take advantage of multi core workloads.

https://wccftech.com/the-division-2-pc-performance-explored/

https://www.guru3d.com/articles_pages/the_division_2_pc_graphics_performance_benchmark_review,7.html

Maybe it change but everything from 4cores and 8 threads aren't far from CPU with more core in performance. Double the number of core of thread don't improve the performance tha much out of a few fps.

Same here with the threadripper 3995W.

https://www.tomshardware.com/reviews/amd-threadripper-pro-3995wx-review/4

Similar threads

- Replies

- 158

- Views

- 18K

- Replies

- 104

- Views

- 21K

- Replies

- 0

- Views

- 1K

- Locked

- Replies

- 260

- Views

- 25K