I think that VFX_Veteran is arguing that some games run different screen-space shaders for different masked screen regions corresponding to different reflective objects, which *would* be doable (but is unusual or nonexistent for what he's claiming, as far as I know).

Screen-space shaders definitely can be made to care about the things that you're saying they can't. They are, in certain cases, for instance in some TAA implementations masking some dynamic objects from the TAA blending is a viable hack to minimize ghosting.[/QUOthereIt can be done, but it is costlier, the esier and fastest AO, is the global one, and to se extent SSR. I see people talk about every dynamic object casting AO, as if that was advanced tech.

Of course, as I said, there are some gotchas here and there. For example, some Deffered lighting engines allow artists to flag objects to not

receive AO, but that's very specific, and it sure isn't a performance saving feature. The SSAO still run for every pixel on screen*, considering every object on it, its just that flagged objects chose to not apply that AO to them on the second rendering pass.

EDIT: Other masking objects out of either receiving or casting AO could be devised as well, but they would make SSAO slower, not faster.

SSR reflections are a bit trickier, because there are single-surface-orientation implementations out there, which simplifies the ray calculation, and saves you from reading the normal buffer.

But for the robust implementations, that support multiple surfaces with varying orientations and positions simultaneously, there is literally nothing impressive about "applying it liberally in many objects on-screen". Just like SSAO, those types of SSR algos run for every pixel on screen, independently if they'll use it or not. For a consistent framerate, the algo has to be optimised for the worst case, and in any free camera game can have the screen end up completely covered by a reflective material, even if the artists just put this single small puddle on their level. At this point masking things in or out just adds extra complexity, and makes the shader run slower. With a shader like that in place, the only thing keeping artists from making every single surface a damn mirror is their artistic vision. Framerate is non-affected. This applies to QB, it also applies to the ps4 launch titles KKSF, and InfamousSS.

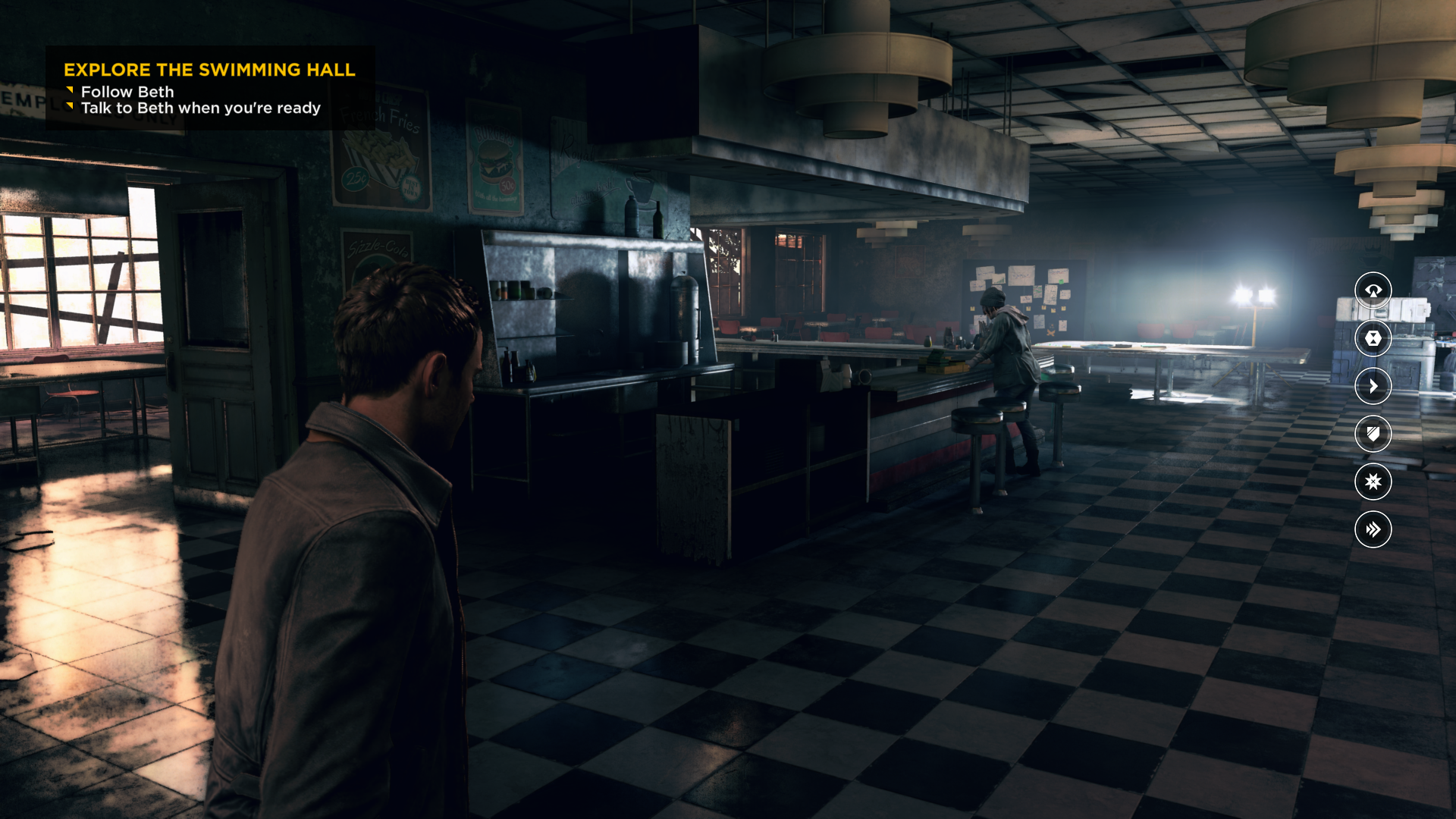

What QB does do better then most in SSR department though, is how they handle reflections on rough surfaces. KKSF calculates reflections for every surface as if mirror like, and blurs them after the fact in screen space again, with varying blur radios depending on how rough the surfaces are. There are several reasons why this doesn't look very good or realistic, and its far from PBResque. QB seems to do some sort of stochastic distribution of rays across rough surfaces, that gets them that nice sharp contact reflections that grow blurrier with distance from the reflected object. They also seem to not do even the slightest of blurring on that, which keeps them sharp, at the cost of making them very noisy. You can even spot their jitter pattern on large flat rough surfaces.

*Usually at quarter res or lower. QB does it at 720p, which makes their SSAO and SSR higher res than even those of 1080p gamea, and also have them match native g-buffer resolution, which is unusual, but also made easier by the fact that their native resolution is simply that low...

EDIT: Mirrors Edge Catalyst will ship with EA's Frostbite's new SSR implementation, which is one of the most robust and high quality ones right now. I recomend you guys checking their siggraph presentation.