DavidGraham

Veteran

Obvious fake is a fake !

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

*snip*

Don't ask.. I have no idea where this is coming from.

Don't ask.. I have no idea where this is coming from.

I have this great idea for a website: write a whole bunch of nonsense breaking news about company XYZ. Then a bit later, I write more breaking news that my earlier breaking news is probably too hard for company XYZ because of whatever other nonsense reason. Then even later, I write that my first breaking news won't happen after all, because, wouldn't you know it, it was indeed too hard for company XYZ. And then I write "I told you so." And nobody will ever be able to prove me wrong.

Rinse, lather, repeat.

Indeed. Hence the alleged...

However, it does look as if it is small, at least smaller than we expected.

im sorry to break magic number 555

http://diy.pconline.com.cn/graphics/news/1203/2702126.html?ad=6431

http://www.3dcenter.org/news/nvidia-gk104-354-milliarden-transistoren-auf-nur-294mm%C2%B2-chip-flaeche

Maybe someone who knows German can translate but I can see 294mm² die size, 3.54 billion transistors, TDP 195W and something about 185W.

http://www.3dcenter.org/news/nvidia-gk104-354-milliarden-transistoren-auf-nur-294mm²-chip-flaeche

Maybe someone who knows German can translate but I can see 294mm² die size, 3.54 billion transistors, TDP 195W and something about 185W.

I don't speak German either but the 185W seems like typical power consumption.

One of the early reports of a "boost" mentioned +5-7% I think. +52 MHz fits in with that at least.

What's 555?

The 185W are a guess of real-world consumption.

And the 3.54B and 294mm² are from Chiphells Napoleon: http://www.chiphell.com/forum.php?mod=viewthread&tid=382049&page=2#pid11424899

my forum post count, sorry i disappointed you :/

If true, point to note is that GK104 beats Tahiti in density. Pitcairn is still 10% higher though.

Just a guess but I've heard multiple times that IO doesn't shrink well, so this may just be down to having a 20% smaller die with 50% less pins to RAM.

Just a guess but I've heard multiple times that IO doesn't shrink well, so this may just be down to having a 20% smaller die with 50% less pins to RAM.

I'm going to speculate the GTX680 will launch at $399, and the GTX670 at $299

I don't believe NV will launch with price/performance parity with AMD, since

1) They are 3-4 months behind AMD, so equal price-performance will seem lame, and not win any kudos...

2) The GK104 looks to be small & powerful, so when NV have a winner, they usually go after market...

http://fudzilla.com/home/item/26308-nvidia-gtx-680-pixellized-in-more-detail

That sounds pretty negative considering the source. Around the same speed as 7970 in the nvidia-selected benchmarks and drivers (IOW: slower), and nvidia feels the need to bang the perf/watt drum instead. (wonder if the 190W is tdp or just nvidia-tdp).

I see what he says but... how exactly would that happen? Are the guys at Apple blind not to see that Intel's graphics products are next to tragedy with image quality, drivers, etc?

If low and mid-range videocards segments will be gone forever, then what exactly future do nvidia have? Considering they lost consoles too.And with these uber-high high-end videocards prices, next to no one will buy them, excluding professional segments...

Then I want a model that is $200 less expensive and can be flashed to be the same as the fastest card. Otherwise your analogy just makes pandas sad.So basically we're back to NV40 versus R420 (similar price, similar performance), G70 versus R520 (again similar price, similar performance)

Then I want a model that is $200 less expensive and can be flashed to be the same as the fastest card. Otherwise your analogy just makes pandas sad.

Don't ask.. I have no idea where this is coming from.

There are three far more likely possibilities:

- ECC and DP make the die less dense

- Tahiti is intentionally less dense to increase performance

- Or, AMD had greater knowledge of the process and architecture when they designed Pitcairn

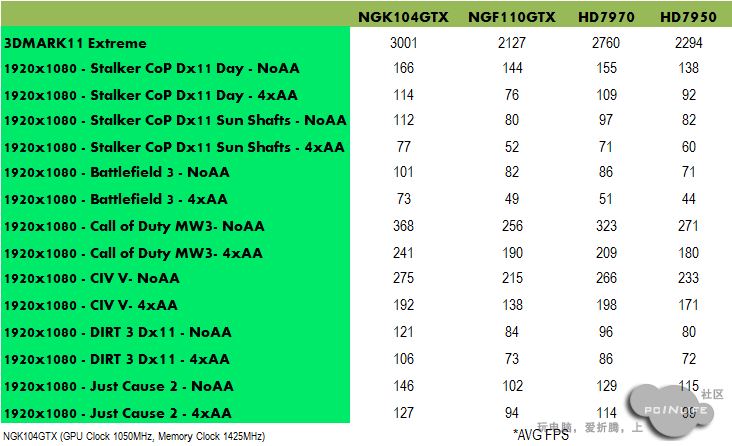

God how I hate graphs with no zero. Utter horseshit through and through.

The GTX680 has 3x the bar length in BF3!!! WOW!!!

Of course, I'm calling the data baloney too.

Only until you actually look at the values on the graph. Sure, it'll fool the Joe Average but anyone with half a brain shouldn't really be all that bothered about it.God how I hate graphs with no zero. Utter horseshit through and through.

Then I want a model that is $200 less expensive and can be flashed to be the same as the fastest card. Otherwise your analogy just makes pandas sad.