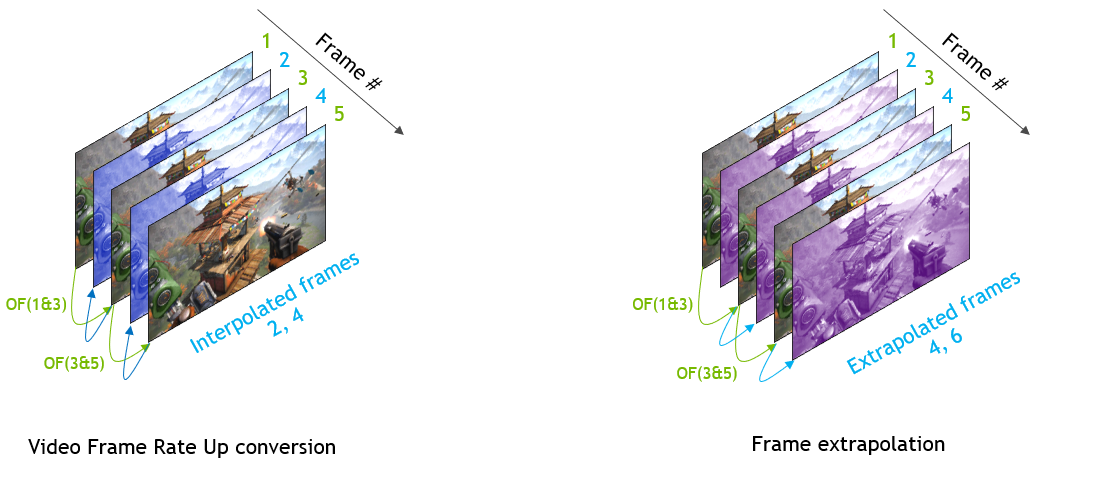

Yep DLSS3 adds latency and smooths motion and the combination seems completely pointless to me. The benefit is smoother motion and this benefit is most needed at lower frame rates. However lower frame rates mean higher latency and this is worst time to add even more latency. Really looking forward to the DF analysis on this. Not sure what problem this is solving.

Blur Busters Law: The Amazing Journey To Future 1000Hz Displays - Blur Busters

A Blur Busters Holiday 2017 Special Feature by Mark D. Rejhon 2020 Update: NVIDIA and ASUS now has a long-term road map to 1000 Hz displays by the 2030s! Contacts at NVIDIA and vendors has confirmed this article is scientifically accurate. Before I Explain Blur Busters Law for Display Motion...

There is a noticeable difference in motion sharpness between 240Hz and 120Hz as well as an improvement in image smoothness. I've been using a 240Hz display for a while and the difference between 240 fps and 120 fps is noticeable in first or third person games where you control the camera. The next big perceivable jump from 240Hz is probably 480Hz and it's just unrealistic for cpu, memory and gpu to scale to reach that in the near future. WIth frame generation of sufficient quality you can get there with some trade offs. 1080p500Hz displays with gsync modules should be out before the end of the year.