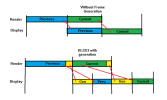

Kaotik: there is no frame extrapolation, which would be equivalent to predict the future.

I've heard that's a pretty hard problem to solve

Just listen to Jensen's words (the video starts playing right before the quote below):

.. and the DLSS 3 blog post also confirms the same notion:

I've heard that's a pretty hard problem to solve

Just listen to Jensen's words (the video starts playing right before the quote below):

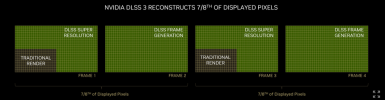

DLSS 3 processes the new frame and the prior frame to discover how the scene is changing.

The optical flow accelerator provides the neural network with the direction and velocity of pixels from frame to frame.

Pairs of frames from the game, along with the geometry and pixel motion vectors, are then fed into a neural network, that generates the intermediate frame.

.. and the DLSS 3 blog post also confirms the same notion:

For each pixel, the DLSS Frame Generation AI network decides how to use information from the game motion vectors, the optical flow field, and the sequential game frames to create intermediate frames.