Where the frame ends up it hard to say. You’re right on that point. I think my only point I wanted to bring forward is that it doesn’t need the current and prior frame to generate the frame in between them. It refers to a prior, so that would be current -1. But it doesn’t refer to a current. So where that frame is injected or which frame it’s generating is not crystal clear to me. Ordering is certainly up for discussion. What the Inputs are is where I’m having a firmer stance on.If it's extrapolating it could perhaps order the frames in a way where the tensor cores are busy generating the current frame (using previous frame information) at the same time as the rest of the GPU is working on the next frame, and the half frame latency comes down to presenting/processing etc.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia DLSS 3 antialiasing discussion

- Thread starter TopSpoiler

- Start date

There's no way this tech can exist if it only works on the final frame buffer. I assume it works at the same stage as DLSS, where final overlays are added before sending to the display.I could stomach seeing the health bar wiggle in response to movement on the screen from time to time, but the idea of the crosshairs shifting away in response to an enemy moving towards them is kind of amusing. Something like that I'd think you would want to explicitly train and/or mask out, because something that's pixel-thick could easily be overpowered by large moving objects?

but 1/2 frame sort of still tells me that Optical Flow is being used as extrapolation and not interpolation. If it was a interpolation latency will be 1.5 frames behind. Not 0.5 frames behind.

Look at what scott_arm posted before to see how interpolation produces a 0.5 frame delay when going from 60 FPS to 120 FPS. Instead of display Frame 2 at 16.6 ms, it displays frame 1.5 at 16.6 ms and displays Frame 2 at 24.9 ms (16.6+8.3 ms).

In terms of "presentation" if we only consider whatever is displayed, yes. In terms of "input latency", this is not true if interpolation is actually the case here because ultimately our rendered frame is the one that's truly polling for user input. Our generated frame does NOT poll for user input so we have a full rendered frame worth of delay added when it comes to input latency ...Look at what scott_arm posted before to see how interpolation produces a 0.5 frame delay when going from 60 FPS to 120 FPS. Instead of display Frame 2 at 16.6 ms, it displays frame 1.5 at 16.6 ms and displays Frame 2 at 24.9 ms (16.6+8.3 ms).

For DLSS to truly ever have only half a frame of additional input latency, it would have to be able to run the game logic/user input polling twice as fast but I doubt this happens if one of their goals is to improve frame rate in "CPU bound" situations ...

I would draw that differently, only because the entire DLSS frame is generated outside of the graphics pipeline. It's not sequential, meaning the standard graphics pipeline stays as it is, but the only difference is that when it comes to buffer switching, you're going to switch to the intermediate frame earlier, and give your gpu as much time as possible to finish then at the 1/2 frame mark, switch the buffer to the end product, so that's how you have a 1/2 frame latency. If you re-order it to be the frame after the current still in progress frame, you have no effective latency loss, but then you can run into some weird frame pacing errors if the next rendered frame doesn't show up quick enough.Look at what scott_arm posted before to see how interpolation produces a 0.5 frame delay when going from 60 FPS to 120 FPS. Instead of display Frame 2 at 16.6 ms, it displays frame 1.5 at 16.6 ms and displays Frame 2 at 24.9 ms (16.6+8.3 ms).

The model could technically be trained in either direction, but in between the current frame and the prior frame should lead to more accuracy, but you eat some latency. If you go the other direction, you remove the latency, but you introduce more accuracy loss and your frame pacing may be all over the place.

Exactly. In the end it's going to be more performant, smoother looking, with less input latency than native would.Yea it's definitely worse than DLSS2.0 + reflex, it's not free. But it is better than native, which couldn't be possible with traditional interpolation.

The-Nastiest

Newcomer

As a tech head, I'm super excited to see how DLSS 3 frame-gen translates on screen, but I don't know when that will be since everyone I know (myself included) upgraded in the last 2 gens and no one I know plans on getting an RTX 40 card  . I don't think DF's slow motion videos will be able to translate the actual experience.

. I don't think DF's slow motion videos will be able to translate the actual experience.

To be honest, while curious, I'm not too hopeful. I can't imagine "fake frames" that are injected into the pipeline will look natural in motion. Even with DLSS 2.X, if I can hit my target framerate I never use it since I think it looks a bit weird in motion.

I don't have the correct technical terms to describe it, but despite its superior reconstruction and AA coverage over TAA, it looks unnatural when moving the camera since it attempts to keep details sharp (overly sharp if you ask me) by continually referencing the 16K image its trained on.

To be honest, while curious, I'm not too hopeful. I can't imagine "fake frames" that are injected into the pipeline will look natural in motion. Even with DLSS 2.X, if I can hit my target framerate I never use it since I think it looks a bit weird in motion.

I don't have the correct technical terms to describe it, but despite its superior reconstruction and AA coverage over TAA, it looks unnatural when moving the camera since it attempts to keep details sharp (overly sharp if you ask me) by continually referencing the 16K image its trained on.

D

Deleted member 2197

Guest

MSN

www.msn.com

The new part is frame generation. DLSS 3 will generate an entirely unique frame every other frame, essentially generating seven out of every eight pixels you see. You can see an illustration of that in the flow chart below. In the case of 4K, your GPU only renders the pixels for 1080p and uses that information for not only the current frame but also the next frame.

...

Frame generation isn’t just some AI secret sauce, though. In DLSS 2 and tools like FSR, motion vectors are a key input for the upscaling. They describe where objects are moving from one frame to the next, but motion vectors only apply to geometry in a scene. Elements that don’t have 3D geometry, like shadows, reflections, and particles, have traditionally been masked out of the upscaling process to avoid visual artifacts.

Masking isn’t an option when an AI is generating an entirely unique frame, which is where the Optical Flow Accelerator in RTX 40-series GPUs comes into play. It’s like a motion vector, except the graphics card is tracking the movement of individual pixels from one frame to the next. This optical flow field, along with motion vectors, depth, and color, contribute to the AI-generated frame.

It sounds like all upsides, but there’s a big problem with frames generated by the AI: they increase latency. The frame generated by the AI never passes through your PC — it’s a “fake” frame, so you won’t see it on traditional fps readouts in games or tools like FRAPS. So, latency doesn’t go down despite having so many extra frames, and due to the computational overhead of optical flow, the latency actually goes up. Because of that, DLSS 3 requires Nvidia Reflex to offset the higher latency.

...

DLSS is executing at runtime. It’s possible to develop an algorithm, free of machine learning, to estimate how each pixel moves from one frame to the next, but it’s computationally expensive, which runs counter to the whole point of supersampling in the first place. With an AI model that doesn’t require a lot of horsepower and enough training data — and rest assured, Nvidia has plenty of training data to work with — you can achieve optical flow that is high quality and can execute at runtime.

That leads to an improvement in frame rate even in games that are CPU limited. Supersampling only applies to your resolution, which is almost exclusively dependent on your GPU. With a new frame that bypasses CPU processing, DLSS 3 can double frame rates in games even if you have a complete CPU bottleneck. That’s impressive and currently only possible with AI.

I for one am not a fan of these fake frames found in DLSS 3 and I certainly will be turning it off where possible. Instead of Nvidia advancing their architecture and hardware design, they’re looking for shortcuts to cheat. DF hasn’t even released their full video and I’ve already spotted tons on artifacts in Nvidia’s video. The whole point of increasing frame rate is to reduce latency. This doesn’t reduce latency and it inserts frames not tied to the game logic. In essence, it should be brandished as a scam because that’s exactly what it is. This way, Nvidia gets to advertise higher FPS on their gpus while not providing the benefits of increased fps.

Edit: Now we can’t even trust FPS metics because, Nvidia is determined to obfuscate the data similar to monitor manufacturers and dynamic contrast. It’s been a while since I’ve come across a scam this big and anyone endorsing this needs to get their heads checked.

Edit: Now we can’t even trust FPS metics because, Nvidia is determined to obfuscate the data similar to monitor manufacturers and dynamic contrast. It’s been a while since I’ve come across a scam this big and anyone endorsing this needs to get their heads checked.

What the hell? That's just simply not true at all. Why would you possibly think like that?I for one am not a fan of these fake frames found in DLSS 3 and I certainly will be turning it off where possible. Instead of Nvidia advancing their architecture and hardware design, they’re looking for shortcuts to cheat. DF hasn’t even released their full video and I’ve already spotted tons on artifacts in Nvidia’s video. The whole point of increasing frame rate is to reduce latency. This doesn’t reduce latency and it inserts frames not tied to the game logic. In essence, it should be brandished as a scam because that’s exactly what it is. This way, Nvidia gets to advertise higher FPS on their gpus while not providing the benefits of increased fps.

Edit: Now we can’t even trust FPS metics because, Nvidia is determined to obfuscate the data similar to monitor manufacturers and dynamic contrast. It’s been a while since I’ve come across a scam this big and anyone endorsing this needs to get their heads checked.

If you want to be real, engineers have been "looking for shortcuts to cheat" since the beginning of 3d computer rendering, and that's exactly what has allowed us to get to the point were at. Literally every aspect of rendering is "cheating" in some aspect..

Nvidia are the only ones actually ADVANCING rendering. You could have your favorite GPU company design a completely new gaming focused hardware design with lets say 20x more efficient architecture..... and they're STILL going to look for ways to be more efficient... render less... compute less... and add that on top of the other improvements. Rendering smarter is entirely separate from everything else. If you can produce something which for all intents and purposes looks the same with rendering 1/4 (or 1/8th) of the pixels... you're going to do it. That will never change.

Trusting FPS metrics has been useless for a long time. We already have better ways to measure performance and consistency.

You're misguided in your hatred towards this type of advancement. It's absolutely necessary for the future, and as with anything new.. people hate what they don't understand or want to understand.

And of course, certain people wont understand until AMD comes with some bootleg implementation that's not quite as good, but good enough.

gamervivek

Regular

GSync/Freesync double(or even triple/quadruple) the monitor's Hz if the fps drops below the lower range of the VRR range on the monitor/TV. When I first played cyberpunk, I manually increased the lower limit using CRU so that fixed 60fps would be displayed at 120Hz on the monitor. The game felt smoother and I think DLSS3 should make it even better since it's not simply duplicating the frame. It should also get rid of the judder you'd get when panning across a scene.

The high-refresh rate monitors that are present currently are mostly IPS and while they work well at lower refresh rates, the overdrive is tuned for the highest refresh rate and most don't have variable OD. Even OLEDs which don't require OD, have gamma shift issues.

The high-refresh rate monitors that are present currently are mostly IPS and while they work well at lower refresh rates, the overdrive is tuned for the highest refresh rate and most don't have variable OD. Even OLEDs which don't require OD, have gamma shift issues.

@Remij I'm not sure if a lot of these people just haven't experience high refresh displays so they don't understand the benefits of having a 240 or 360Hz display, and how difficult it is to find gpus, cpus and RAM that can drive them.

CPU scaling is still pretty slow if you want consistent 500 fps, let alone the 1000 fps that would be needed for really life-like motion and images. Gen over gen cpu gains are decent, but it's clearly a long road to get a lot of games hitting high frame rates. Plenty of CPU-limited games especially when ray-tracing is enabled. 240Hz is pretty doable for a lot of games with a mid to high end PC, but if you want 360 or 480Hz displays there's a pretty small group of games you could actually play at that rate. Lots of games have internal caps that don't go that high, and most others just require absurd cpus and RAM to try. It's really only old games and a few competitive games like CS and Valorant where it's pretty achievable.

RAM technology is advancing way slower than CPUs and to get really high 1% lows a lot of games will require overclocked DDR4 samsung b-die memory. DDR5 is expensive and loses to DDR4 in most games until you get into the higher 6000s ranges, which is hard to do.

GPUs are the most likely to scale but even there we're clearly hitting a wall with 300W gpus. How do we get to 500+ fps while also adding ray-tracing and increasing resolution? There's basically one answer, and that's to drastically reduced the amount of rendering that's done per frame. Generating frames between rendered frames is probably one of the keys to getting there. It's not "cheating." If you can get a game to render at 250+ fps and then amplify that to 500 or even 1000, you'll still have great input lag but you'll have a way more lifelike image.

The question is whether RTX 40 series can do that with good quality, and holy shit it's not even released yet. First attempt may be good or bad, but at least wait and see. Maybe in two years DLSS 3.1 or DLSS 4 comes out and fixes a lot of the problems like DLSS 2 did. Maybe not. Who knows. These people should at least wait and see before they "brandish as a scam" or say the frames are "fake." Are the frames any less fake than DLSS or FSR? Are checkerboard frames "fake"? I don't get it.

CPU scaling is still pretty slow if you want consistent 500 fps, let alone the 1000 fps that would be needed for really life-like motion and images. Gen over gen cpu gains are decent, but it's clearly a long road to get a lot of games hitting high frame rates. Plenty of CPU-limited games especially when ray-tracing is enabled. 240Hz is pretty doable for a lot of games with a mid to high end PC, but if you want 360 or 480Hz displays there's a pretty small group of games you could actually play at that rate. Lots of games have internal caps that don't go that high, and most others just require absurd cpus and RAM to try. It's really only old games and a few competitive games like CS and Valorant where it's pretty achievable.

RAM technology is advancing way slower than CPUs and to get really high 1% lows a lot of games will require overclocked DDR4 samsung b-die memory. DDR5 is expensive and loses to DDR4 in most games until you get into the higher 6000s ranges, which is hard to do.

GPUs are the most likely to scale but even there we're clearly hitting a wall with 300W gpus. How do we get to 500+ fps while also adding ray-tracing and increasing resolution? There's basically one answer, and that's to drastically reduced the amount of rendering that's done per frame. Generating frames between rendered frames is probably one of the keys to getting there. It's not "cheating." If you can get a game to render at 250+ fps and then amplify that to 500 or even 1000, you'll still have great input lag but you'll have a way more lifelike image.

The question is whether RTX 40 series can do that with good quality, and holy shit it's not even released yet. First attempt may be good or bad, but at least wait and see. Maybe in two years DLSS 3.1 or DLSS 4 comes out and fixes a lot of the problems like DLSS 2 did. Maybe not. Who knows. These people should at least wait and see before they "brandish as a scam" or say the frames are "fake." Are the frames any less fake than DLSS or FSR? Are checkerboard frames "fake"? I don't get it.

Your first mistake was assuming I stan for Amd. I don’t care about either company. All I see here is a long winded post justifying how cheating by creating fake frames is ok by Nvidia. This new lazy cheat by Nvidia doesn’t fall under the standard “cheating” you’re describing. They are creating frames that have no impact or nothing to do with the game logic. It’s just garbage frames that mean nothing. It does absolutely nothing to improve the feel of the game, the responsiveness of game, etc. it’s by definition fake data. They then count this fake data and claim a 2x - 4x improvement which is essentially tales/frames from their ass. They’re being dishonest and your argument is also dishonest.What the hell? That's just simply not true at all. Why would you possibly think like that?

If you want to be real, engineers have been "looking for shortcuts to cheat" since the beginning of 3d computer rendering, and that's exactly what has allowed us to get to the point were at. Literally every aspect of rendering is "cheating" in some aspect..

Nvidia are the only ones actually ADVANCING rendering. You could have your favorite GPU company design a completely new gaming focused hardware design with lets say 20x more efficient architecture..... and they're STILL going to look for ways to be more efficient... render less... compute less... and add that on top of the other improvements. Rendering smarter is entirely separate from everything else. If you can produce something which for all intents and purposes looks the same with rendering 1/4 (or 1/8th) of the pixels... you're going to do it. That will never change.

Trusting FPS metrics has been useless for a long time. We already have better ways to measure performance and consistency.

You're misguided in your hatred towards this type of advancement. It's absolutely necessary for the future, and as with anything new.. people hate what they don't understand or want to understand.

And of course, certain people wont understand until AMD comes with some bootleg implementation that's not quite as good, but good enough.

This isn’t looking for ways to be more efficient, it’s just garbage frames that have nothing to do with the game. Cheap interpolation tricks. Rubbish.

Your first mistake was assuming I stan for Amd. I don’t care about either company. All I see here is a long winded post justifying how cheating by creating fake frames is ok by Nvidia. This new lazy cheat by Nvidia doesn’t fall under the standard “cheating” you’re describing. They are creating frames that have no impact or nothing to do with the game logic. It’s just garbage frames that mean nothing. It does absolutely nothing to improve the feel of the game, the responsiveness of game, etc. it’s by definition fake data. They then count this fake data and claim a 2x - 4x improvement which is essentially tales/frames from their ass. They’re being dishonest and your argument is also dishonest.

This isn’t looking for ways to be more efficient, it’s just garbage frames that have nothing to do with the game. Cheap interpolation tricks. Rubbish.

Honestly one of the worst and least charitable set of posts I've seen on this forum in a while. I wrote up a bit of an explanation based on the potential benefits and drawbacks. I'd actually amend this into four potential positives and three negatives. Honestly some of the positives are all but guaranteed. The three negatives we don't really know yet. It seems obvious there will be compromises to image quality and input latency, but until we see it in action the subjective difference is not known.

On top of that there's frame pacing. It could actually really help with 1% lows and make games seem incredibly smooth. The higher you push fps the more you tend to get deviation in frame times, especially if you have slow RAM. If this generation works well, you could actually have very good frametimes for half you frames, and then you'll have much higher 1% lows. But it could also potentially magnify dips, or have other issues that lead to some odd frame pacing that causes more stutter. We don't know yet.

Potential positives:

judder reduction

animation smoothness

motion blur reduction

cpu, memory, gpu scaling

frame pacing

Potential negatives:

input latency

image quality (artifacts etc)

frame pacing

My personal take is you have a number of different factors that make DLSS3 very interesting.

CPU + memory scaling

motion improvement/judder reduction

motion blur reduction

input latency

image quality

DLSS3 basically addresses the first three at the expense of the last two. I'll use the example of COD Warzone, because it's popular and incredibly demanding. To get 200+ fps consistently at 1080p you need something like a 12900K that's overclocked with ringbus and DDR4 (samsung b-die only) pushed to the absolute limit, and probably a 3090 overclocked. On top of that, you need pretty much every in-game setting set to low. The cost of that computer is absolutely out of reach of a ton of people, and requires a skill set and time and patience to deal with all of the overclocking. On top of that, DDR5 is the next spec for gaming, and so far it's very expensive and actually not as good for gaming overall because it has higher latency.

There is push to get to 1000Hz over time, because motion blur on sample and hold displays will effectively be eliminated. On top of that the reduction of judder when panning will create an incredibly lifelike image. There is no practical way to get there by drawing "native" frames. It's not practical with how hardware scales over time, especially as rendering requirements increase with ray tracing etc. What's going to get there is some form of frame construction that doesn't require rendering complete frames or rendering every frame.

The compromise is image quality and input latency. We'll have to see how bad the compromises are, and it's clear there are compromises, but overall this is the direction that gaming hardware has to take. RTX 40 series may not be the best example, and there will probably be good reasons to turn DLSS3 off with particular games, or even all of them. My guess is the intent is 240 fps with 120fps input lag, or 480 fps with 240fps input lag to match the current top of the line displays. 60 to 120 could be pretty good, but I think 30 to 60 is probably going to be trash. I also kind of wonder if games that are being made to support DLSS3.0 from the ground up will have higher quality than the ones that have the support added after release. Really don't know how involved or complex the integration of the new optical frame generation component is.

They have to start somewhere. You need D3D2 to get to D3D7. You need D3D7 to get to D3D12. You need TAA to get to DLSS/FSR2. DXR1 will lead to DXR2. DLSS3 is a first step to get to a better version of the same thing.

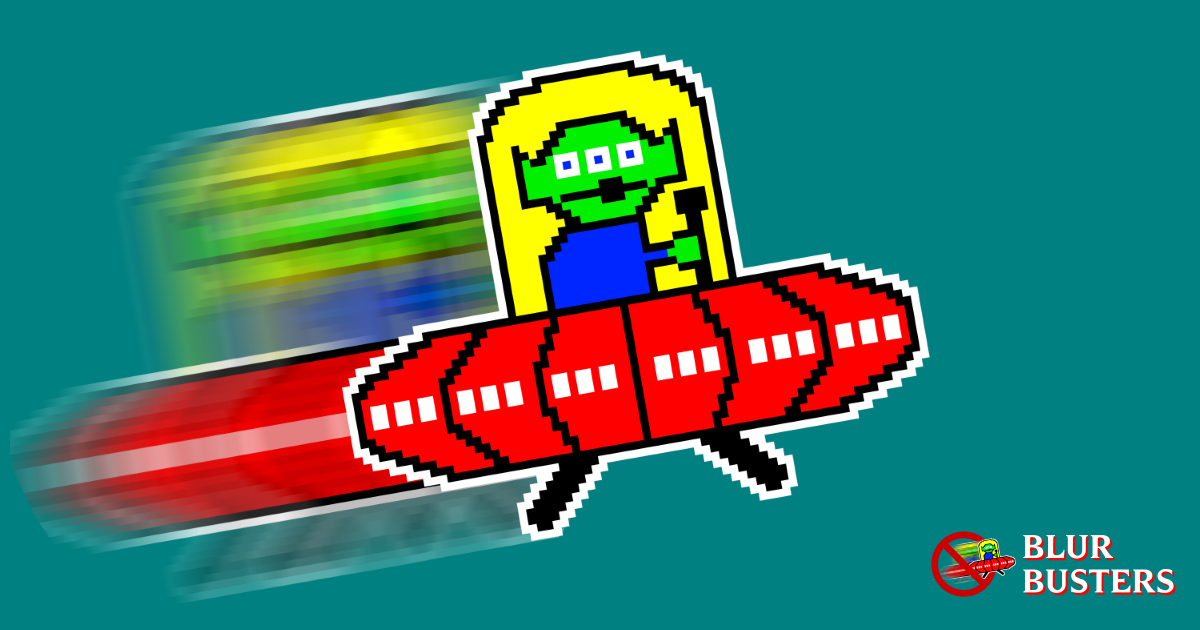

Blur Busters Law: The Amazing Journey To Future 1000Hz Displays - Blur Busters

A Blur Busters Holiday 2017 Special Feature by Mark D. Rejhon 2020 Update: NVIDIA and ASUS now has a long-term road map to 1000 Hz displays by the 2030s! Contacts at NVIDIA and vendors has confirmed this article is scientifically accurate. Before I Explain Blur Busters Law for Display Motion...blurbusters.com

I'd recommend going to blur busters to understand motion picture response time (MPRT), the benefits of 1000Hz displays and the potential benefits of frame amplification. Also the test ufo pattern is worth a look.

Blur Busters Law: The Amazing Journey To Future 1000Hz Displays - Blur Busters

A Blur Busters Holiday 2017 Special Feature by Mark D. Rejhon 2020 Update: NVIDIA and ASUS now has a long-term road map to 1000 Hz displays by the 2030s! Contacts at NVIDIA and vendors has confirmed this article is scientifically accurate. Before I Explain Blur Busters Law for Display Motion...

Frame Rate Amplification Tech (FRAT) — More Frame Rate With Better Graphics

Tomorrow, 1000fps for free. Not your grandfather's Classic Interpolation. Oculus has Asynchronous Time Warp. Cambrige has Temporal Resolution Multiplexing. NVIDIA has Deep Learning Super Sampling. Have cake and eat it too with high-detail high-framerates!

GtG versus MPRT: Frequently Asked Questions About Pixel Response On Displays

GtG and MPRT are two different pixel response benchmarks for displays. We explain the difference.

Blur Busters TestUFO Motion Tests. Benchmark for monitors & displays.

Blur Busters UFO Motion Tests with ghosting test, 30fps vs 60fps vs 120hz vs 144hz vs 240hz, PWM test, motion blur test, judder test, benchmarks, and more.

The generated frame polls input from neighboring frames though, so it should have input from the latest actual rendered frame, no?In terms of "presentation" if we only consider whatever is displayed, yes. In terms of "input latency", this is not true if interpolation is actually the case here because ultimately our rendered frame is the one that's truly polling for user input. Our generated frame does NOT poll for user input so we have a full rendered frame worth of delay added when it comes to input latency ...

For DLSS to truly ever have only half a frame of additional input latency, it would have to be able to run the game logic/user input polling twice as fast but I doubt this happens if one of their goals is to improve frame rate in "CPU bound" situations ...

Camera has been panning left for eternity for x amount each real rendered frame. Frame 1 is the last frame where it pans left after which the direction switches and now camera pans amount x to the right for frame 2. The change in panning should already affect generated frame 1.5 as it it uses both frames 1 and 2 as input.

The generated frame polls input from neighboring frames though, so it should have input from the latest actual rendered frame, no?

Camera has been panning left for eternity for x amount each real rendered frame. Frame 1 is the last frame where it pans left after which the direction switches and now camera pans amount x to the right for frame 2. The change in panning should already affect generated frame 1.5 as it it uses both frames 1 and 2 as input.

Yah, I don't get this. As far as I know a lot of games decouple input from rendering. Games like Overwatch have decoupled mouse input from rendering so you can aim between frames.

New Feature – High Precision Mouse Input (Gameplay Option)

Hi everyone! I’m Derek, a gameplay engineer on Overwatch and wanted to run through this new feature in version 1.42, and exactly what it does and how it works. First, a quick summary on how aiming and shooting happens in Overwatch. Overwatch simulates (or ticks) every 16 milliseconds, or at...

us.forums.blizzard.com

us.forums.blizzard.com

I really don't see how input would be affected by frame generation besides some additional display lag.

Similar threads

- Replies

- 7

- Views

- 2K

- Replies

- 4

- Views

- 3K

- Replies

- 11

- Views

- 3K

- Replies

- 173

- Views

- 15K