seeing rtx2060 struggle with stable 60fps with dlss on ps5 performance is quite good in this game

The PS 5 does not render a fixed 2160p...it drops down to 1944p.

So apples and oranges...once again.

seeing rtx2060 struggle with stable 60fps with dlss on ps5 performance is quite good in this game

Keep in mind that DLSS has costs and that cost is fixed.

The less tensor cores the longer that fixed cost will be so it doesn’t matter how little or how much is happening on the screen really, it has little control over that portion. to meet 16.6ms you’re going to need to be significantly less than that to make up for DLSS time.

If we assume DLSS has a fixed time of 5-6ms (it is likely less). That frame time needs to be below or around 9ms to make it.

I’m just not sure if the 2060 is the card to do that, its nearly like asking for it to make 100+ fps. Which is tough already for most cards in the 6TF range.

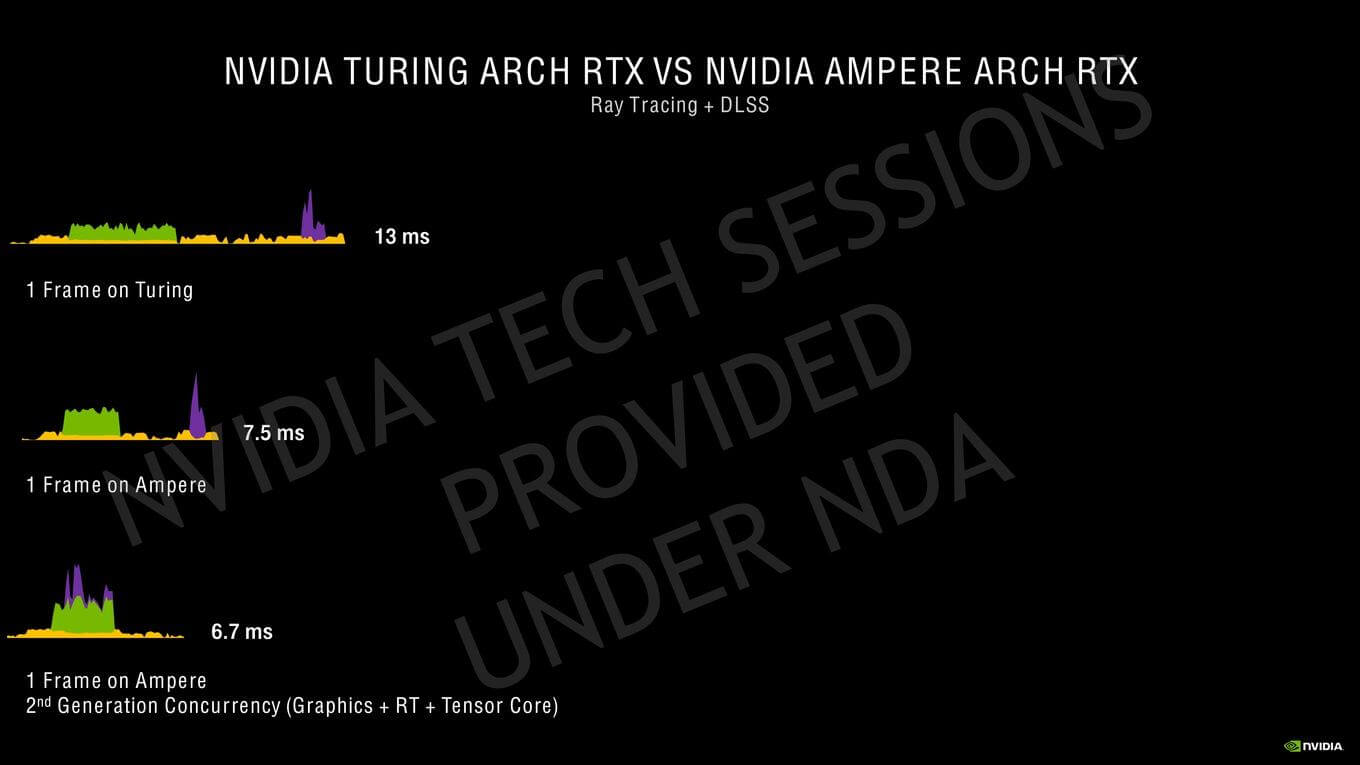

DLSS in its 2.0 iteration is a bit different to think about - when I say it is rendering internally at XXXX by XXXX resolution in the video I am simplifying it a bit. Post processing like motion blur, depth of field, bloom, colour correction, etc. is all done at native resolution actually in DLSS 2.0. So it more expensive by default than just rendering at the internal resolution. THen you have the DLSS run time on top, which is different between GPUs and also different between Ampere and Turing (Ampere has a level of concurency I believe with its Tensor cores that TUring does not have).

I know, I wrote in earlier post what vgtech resolutions found in quality modeThe PS 5 does not render a fixed 2160p...it drops down to 1944p.

So apples and oranges...once again.

Btw, Wolfenstein is the only game where this Ampere concurrency is actually in use afaik.

It's possible to see the DLSS processing cost using the SDK version of nvngx_dlss.dll and enabling an on-screen indicator from Windows registry. Looks like this:

View attachment 5317

Wolfenstein is the only game where I've seen this show 0.00ms.

Maybe the concurrency is difficult to implement or something, otherwise you'd think it would be in UE4 etc already.

It should work in combination with any rendering. But I assume that the renderer must be crafted in a way which will allow this since it means that DLSS and everything after it must be done asynchronously with graphics.It only works in combination with raytracing. Can you try Call of Duty Cold War? Performance impact is very small for DLSS.

That's not always the case, that's just a recommendation for DLSS from NVIDIA, but there are many games which don't follow this recommendation - Control, Death Stranding, CP2077 and even Nioh, this usually appears as either aliased edges during motion blur in CP2077 or Control or as DOF Boke shapes flickering as with Death Stranding and Nioh. I wish there was at least depth buffer rendered at full resolution (should be virtually free since rasterization speed is 4x for depth only anyway), so that devs can do depth aware upsampling for such effects as MB, DOF, etc., this would eliminate resolution loss due to low res post processing, but would probably require additional implementation effort from game devs (and we know that they even forget setting LOD bias levels on a regular basis). Luckily, this resolution loss due to low res PP is nowhere near as severe as fucked up jittering or resolve in GoW - https://forum.beyond3d.com/threads/god-of-war-ps4.58133/page-38#post-2193708Post processing like motion blur, depth of field, bloom, colour correction, etc. is all done at native resolution actually in DLSS 2.0

In order to implement this concurrency, additional profiling is required and inter frame async execution is likely a requirement, so yes, it does make things harder.Maybe the concurrency is difficult to implement or something, otherwise you'd think it would be in UE4 etc already.

Nope, don't happen to own that game.Can you try Call of Duty Cold War?

Windows Registry Editor Version 5.00

[HKEY_LOCAL_MACHINE\SOFTWARE\NVIDIA Corporation\Global\NGXCore]

"FullPath"="C:\\Program Files\\NVIDIA Corporation\\NVIDIA NGX"

"ShowDlssIndicator"=dword:00000001

Any idea what happens when you use this OSD and Marvels Avengers + DLSS? Does it show the internal DRS res constantly switching I wonder...Btw, Wolfenstein is the only game where this Ampere concurrency is actually in use afaik.

It's possible to see the DLSS processing cost using the SDK version of nvngx_dlss.dll and enabling an on-screen indicator from Windows registry. Looks like this:

View attachment 5317

Wolfenstein is the only game where I've seen this show 0.00ms.

Maybe the concurrency is difficult to implement or something, otherwise you'd think it would be in UE4 etc already.

No one made that claim in this thread.but I don't understand the claim about the PS5 punching above it's weight

Nioh 2 isn't a good comparison point anyway because the game is rather badly optimized for NV h/w as it is and DLSS in this case is just a band aid slapped on top of the badly optimized code to make things somewhat better.

Don't have that game either. I would assume the render resolution indicator updates in realtime as render resolution changes.Any idea what happens when you use this OSD and Marvels Avengers + DLSS? Does it show the internal DRS res constantly switching I wonder...

The new UE 4.26 editor DLSS plugin has a slider which allows you to use any render resolution between 50% and 66%.