D

Deleted member 2197

Guest

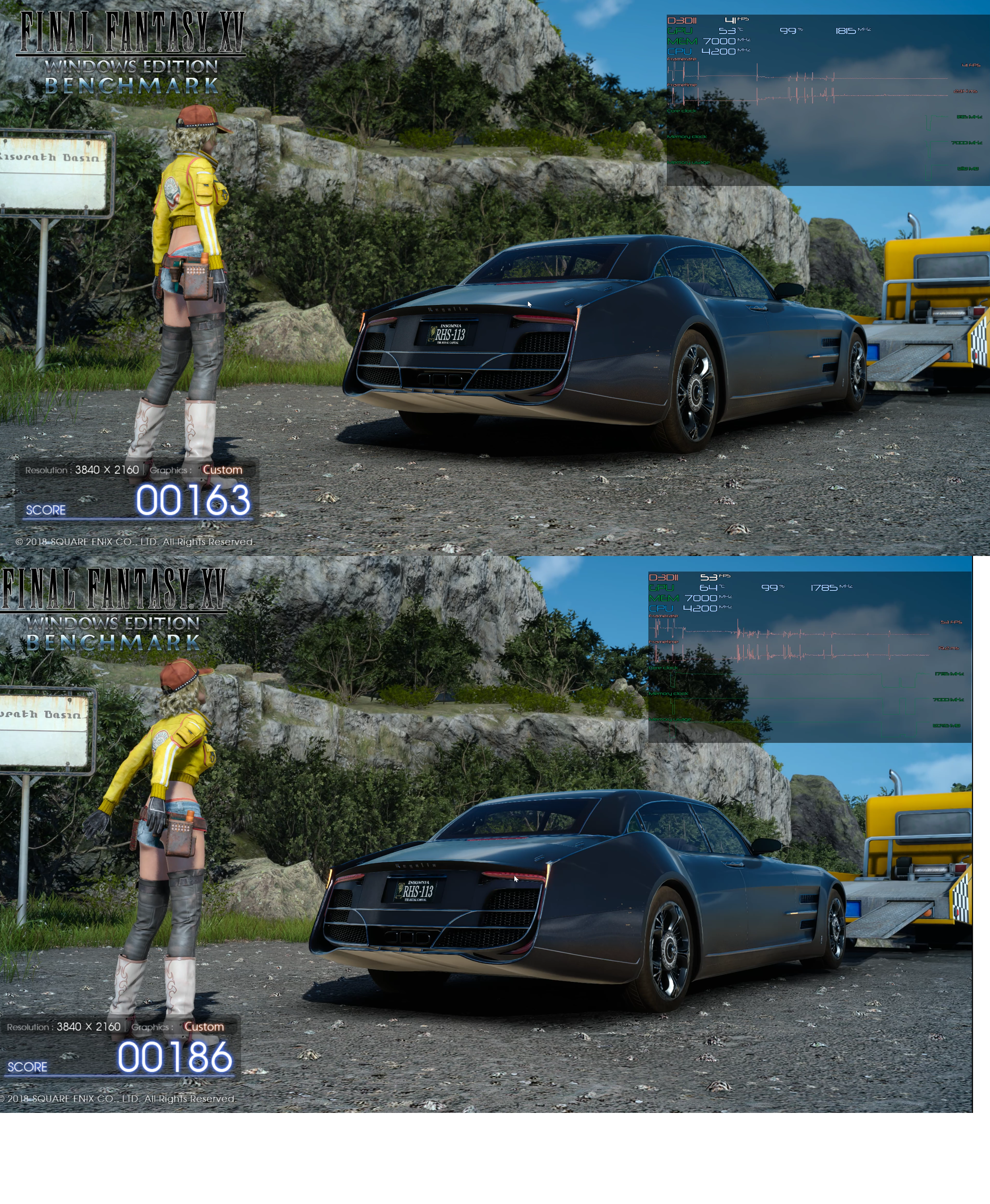

Here's an image posted by Hilbert @ Guru3D and asks people to compare.

Last edited by a moderator:

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Obviously an image without motion...Here's an image posted by Hilbert @ Guru3D and asks people to compare.

Yes, apparently one of the few artifacts. In the TAA image there is more blurring in the bushes on the cliff, lettering in sign, the sign frame, wheel rims look less defined.

Edit: Hilbert's point is no AA technique is perfect. There are trade-offs and each person has to decide which is best based on their gaming needs.

So DLSS handles transparency much better, is overall sharper, and avoids Ghosting artifacts. It's also far better than checker-boarding ..Also, DigitalFoundrys DLSS-video is up

Note: This assumes that the public version and DLSS-version of the FFXV are supposed to look the same

In the public version the bushes, rocks etc are blurred even without AA which suggests that they're supposed to be blurred (DoF) and DLSS either completely breaks it or guesses the amount of blurring completely wrong.

--

Also, DigitalFoundrys DLSS-video is up

But it's also sometimes sharper when it shouldn't be sharper (still assuming they didn't completely change the DoF in DLSS-build of the benchmark since it looks the same on TAA on public and DLSS builds.)So DLSS handles transparency much better, is overall sharper, and avoids Ghosting artifacts. It's also far better than checker-boarding ..

So DLSS handles transparency much better, is overall sharper, and avoids Ghosting artifacts. It's also far better than checker-boarding ..

Are you 100% sure about this?Other resolutions could be added later but the AI has to be retrained for that specific resolution.

But would for example 1440p DLSS'd to 4K downsampled to 1080p or 1440p even be better than native 1440p or 1440p scaled to 1080p? Especially considering the chance of artifacts and whatnotAre you 100% sure about this?

It makes it a bit useless to anyone without a 4K monitor/TV. Unless it works with downsampling, I guess.

But would for example 1440p DLSS'd to 4K downsampled to 1080p or 1440p even be better than native 1440p or 1440p scaled to 1080p? Especially considering the chance of artifacts and whatnot

They actually backed down on the game ready drivers part, they're available just as quickly and as often from their websiteAt the very least, 1440p DLSS'd to 4K then downsampled to 1080/1440p should behave as a form of antialiasing that's noticeably cheaper than regular supersampling.

I see it needs training on a game-by-game basis.

How likely is it for nvidia to put the training data behind the Geforce Experience subscription, like they already do with the regular automatic game settings profiles, video recording, game streaming, game-ready drivers, etc.?

The quickest method to distribute any training data or updates would be to store that data on the cloud. I imagine having a Geforce Experience ID would facilitate access to that data.How likely is it for nvidia to put the training data behind the Geforce Experience subscription, like they already do with the regular automatic game settings profiles, video recording, game streaming,game-ready drivers, etc.?

DLSS is just another reconstruction technique, like checkboarding. Insomniac already has a stellar reconstruction technique, though we don't know the specifics of it, running on a 1.8 TF PS4 in compute, not even relying on PS4Pro's ID buffer which can improve things. DLSS isn't bringing anything new to the possibilities on offer for lower-than-native ray-tracing.

DLSS is a byproduct of the AI/ML based OptiX denoising for offline rendering and NVidia want's to "pimp" it Tensor core chops to differentiate its Turing GPUs and DLSS is the one of the many ways to hype the hell out of it's overpriced consumer RTX boards and justify the fact that they are not that faster than the Pascal GPUs at conventional rasterization (but this is is part because they are still stuck on the 12nm node).We have yet to see DLSS used in really gameplay situations because all they have show publicly so far are on-rails benchmarks (which are the base case scenarios for Machine Learning) and even in those bench it exhibit heavy blurry in some non stack situations... As shifty said they are already really great reconstruction techniques right about now that work on any low/mid/high-end GPUs.So basically Nvidia are getting all the plaudits and slaps on the back for all these great new things that have been in real use on consoles/PC for a while then?