currently you have to use an

IOMMU that sits on the motherboard, Intel calls it Vt-d, and pass around the whole graphics card to a VM. hypervisor such as Xen or Vmware (ESXi variant) has to support it. same tech allows to use a real network card, storage controller or usb controller.

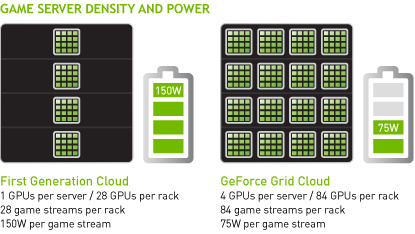

but doing that, it's one GPU per VM, and one VM per GPU, with implementation limitations that can kick in (it's tricky due to specific nature of the VGA BIOS, so for how many cards will this work? depends on hypervisor, graphics card and motherboard)

the IOMMU translates addressing, commands meant for the VM's address space into real addressing for the physical graphics card, and vice versa.

also if you want to beam framebuffer up through the network, you'll have to do it yourself it's not covered by the technique.

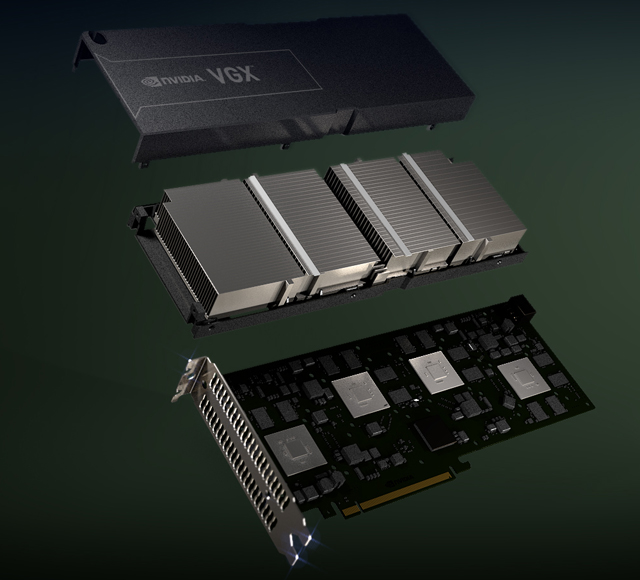

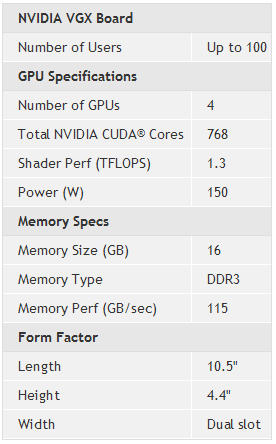

here, and I wondered before if I understood that well from the presentation, an

MMU sits in the GPU and communicates with the hypervisor so the transition from virtual to real addressing is done there. so apparently you can use as many physical GPU as you want, and on top of that as many virtual (i.e. as seen by the VM) GPUs as you want.

it's in the scenario of VDI, i.e. one VM per user. I find this to be needless waste (unless Windows 7 pro or enterprise is allowed, saving a lot on licensing). why not have 20 users per VM. but maybe you can have multiple virtual GPUs per VM, I don't know.

[nice that you find something like Xen to be "big iron", I know of a nice installation of it on a pentium E2200 with 2GB ram, running 5 VMs with almost no down time for a few years

]