D

Deleted member 2197

Guest

Yes. From the post seems to currently used only for the ray tracing calculation portion of the benchmark.Does this benchmark even use RT cores?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Yes. From the post seems to currently used only for the ray tracing calculation portion of the benchmark.Does this benchmark even use RT cores?

Does this benchmark even use RT cores?

Looking at Octanebench results page, the 1080Ti is tied with the 2080 and the Titan V is easily beating the Titan RTX.

https://render.otoy.com/octanebench/results.php?v=4.00&sort_by=avg&filter=&singleGPU=1

I'm pretty sure the official benchmark doesn't support RTX.

We are happy to announce the release of a new preview version of OctaneBench with experimental support to leverage ray tracing acceleration hardware using the NVIDIA RTX platform introduced by the new NVIDIA Turing architecture.

That makes more sense from an RT acceleration effect. What confused me is support for RT acceleration was included in April for OctaneRender 2020.

In contrast to the previous experimental builds we have decided to switch our ray tracing backend to make use of OptiX due to some issues affecting VulkanRT that still need to be solved by third parties. This change increases overall render performance for most scenes but specially for complex ones and reduces VRAM usage to almost half to what it was required before.

We are pleased to release the first build of OctaneStandalone 2019 with experimental support for ray tracing acceleration hardware using the NVIDIA RTX platform introduced by the new NVIDIA Turing architecture.

This release is a follow up to the earlier RTX benchmark which you can also download here.

This standalone version comes with a built-in script that allows you to measure the RTX on/off speedup for any render target that you can load in this version of the Octane V7 standalone engine with Vulkan and RTX backend that can be swapped in for CUDA in the device properties. In order to do this, select any render target and go to Script > RTX performance test.

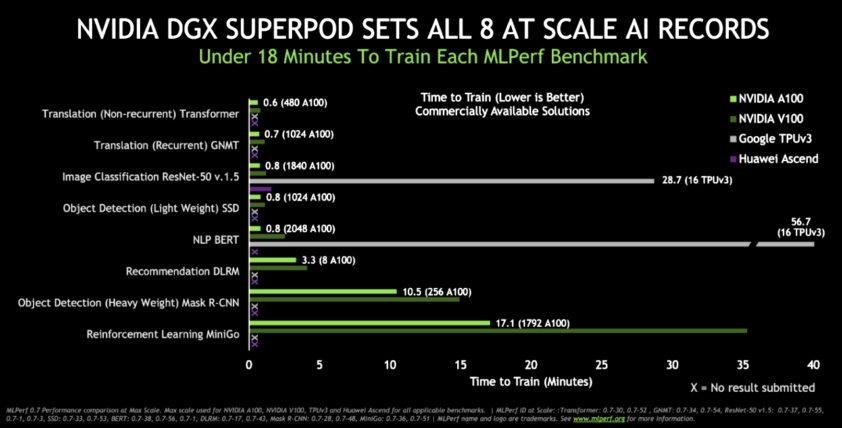

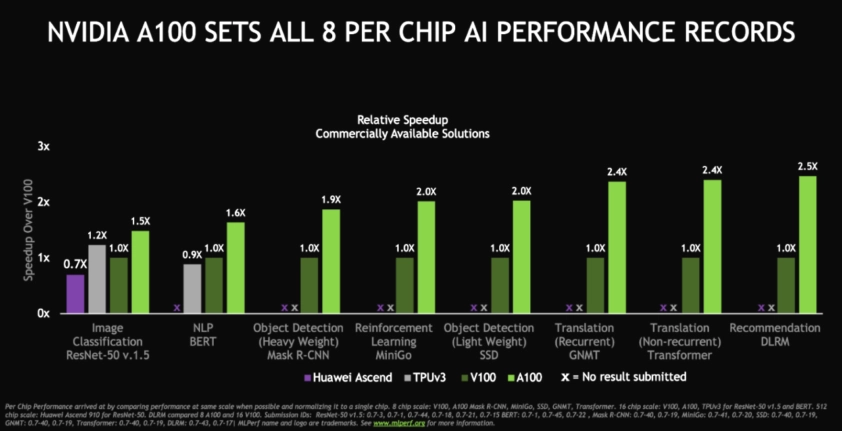

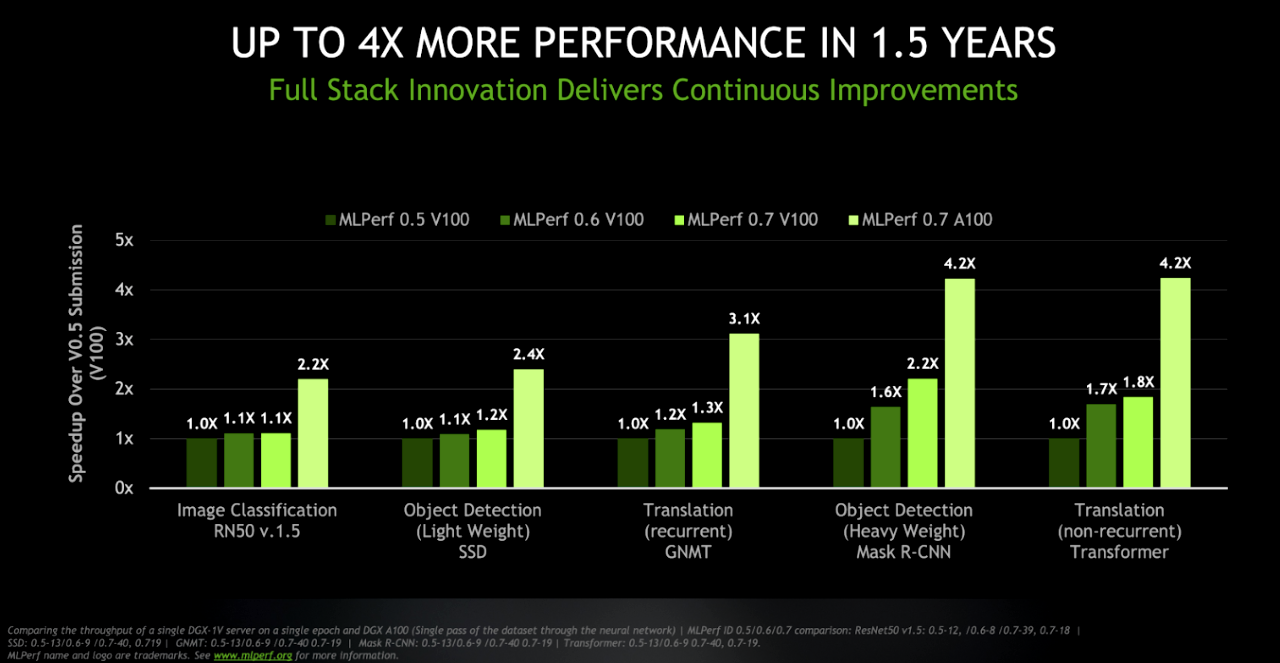

NVIDIA delivers the world’s fastest AI training performance among commercially available products, according to MLPerf benchmarks released today.

The A100 Tensor Core GPU demonstrated the fastest performance per accelerator on all eight MLPerf benchmarks. For overall fastest time to solution at scale, the DGX SuperPOD system, a massive cluster of DGX A100 systems connected with HDR InfiniBand, also set eight new performance milestones. The real winners are customers applying this performance today to transform their businesses faster and more cost effectively with AI.

https://syncedreview.com/2020/07/29...results-released-google-nvidia-lead-the-race/Google broke performance records in DLRM, Transformer, BERT, SSD, ResNet-50, and Mask R-CNN using its new ML supercomputer and latest Tensor Processing Unit (TPU) chip. Google disclosed in a blog post that the supercomputer includes 4096 TPU v3 chips and hundreds of CPU host machines and delivers over 430 PFLOPs of peak performance.

...

While Google ramped up their cloud TPUs and the new supercomputer to deliver faster-than-ever training speeds, NVIDIA’s new A100 Tensor Core GPU also showcased its capabilities with the fastest performance per accelerator on all eight MLPerf benchmarks.

https://www.enterpriseai.news/2020/07/29/nvidia-dominates-latest-mlperf-training-benchmark-results/Freund seemed less impressed with Google's TPU 4.0 showing – “it is only marginally better on 3 of the eight benchmarks. And it won’t be available for some time yet” – but Google struck a distinctly upbeat tone.

“Google’s fourth-generation TPU ASIC offers more than double the matrix multiplication TFLOPs of TPU v3, a significant boost in memory bandwidth, and advances in interconnect technology. Google’s TPU v4 MLPerf submissions take advantage of these new hardware features with complementary compiler and modeling advances. The results demonstrate an average improvement of 2.7 times over TPU v3 performance at a similar scale in the last MLPerf Training competition.

21 days from now is August 31st. NVIDIA revealed original GeForce August 31st in 1999, 21 years ago (though the cards actually came for sale over a month later, October 11th)Signs of life...

21 days from now is August 31st. NVIDIA revealed original GeForce August 31st in 1999, 21 years ago (though the cards actually came for sale over a month later, October 11th)

And 3 more YouTube videos.One billion % performance increase! Perfect for a WCCFTech article!

Drawing comparisons to the original GeForce implies significant performance or feature advancements.

Anandtech said:But held back by a lack of support for its true potential and maturing drivers may taint the introduction of this part.

Drawing comparisons to the original GeForce implies significant performance or feature advancements.

Hard to imagine that nowadays people complain about the comparatively smaller performance jumps from gen to gen.Look don't get me started about the geforce. I remember getting that and then the ddr versions came out and destroyed that card. Was a very very sad teenager who spent all summer cutting grass to get that card ... also damn I'm old

Or it could be an appeal for patience while half the chip's hardware remains idle. Just like the original Geforce

https://www.anandtech.com/show/391

I remember that card being such a disappointment. Nice demos that came with it, but uninspired in games at the time with T&L largely being unsupported. It didn't last long in my machine.

Regards,

SB

Hard to imagine that nowadays people complain about the comparatively smaller performance jumps from gen to gen.

With today's transistor density, you could make (per CEO math) a whole GF256 (or R100) with a die size of less than 1 mm².