Fact 1 was ever in contention?

Probably depends on what you consider contention. There hasn't really been any mainstream reporting actually discussing the specifics of the business side of graphics cards and the pricing over the last 2 years. So I would say plenty of people with at least some cursory interest in the industry are likely not fully aware of the intricacies. If you go on broader discussion forums for instance you can see people discuss under various impressions of what caused the high prices and who they went to.

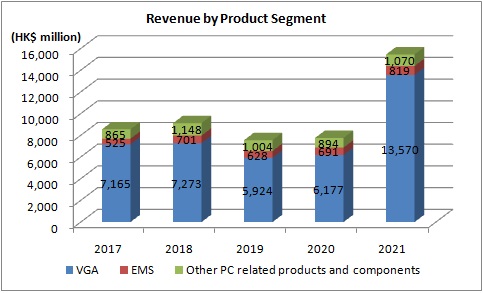

The likely reality though is that all parties, including the suppliers of the manufacturers, saw increased revenue and profits. It shouldn't be overlooked too that graphics cards were actually able to to increase shipments in a supply constrained environment. The higher prices did likely contribute to Nvidia/AiBs likely being able to have some priority on supply/logistics in such an environment. Whereas if you look at the consoles that had to operate with a tighter fixed price they not only weren't able to increase shipments in face of higher demand they had to revise shipments numbers downwards continually.

Well, listening to some rumors, you would believe that AIBs are struggling with so much stock in hands that they're asking to delay Ada launch, when in fact its just going back to normal pre-pandemic world with 3-4 months of inventory in the channel. Inventory that AIBs have ordered when everything was immediately flying off the shelves...

I feel a lot of the public sentiment regarding inventories likely stems from anecdotal impressions after acclimating to the situation over the last two months. The fact that graphics cards are sitting on so called "shelves" (real or virtual) at MSRP (well this is a bit of a loose term, some cards, eg. 3060ti are not sitting on shelves at MSRP) with ample inventory to many people feels like their is essentially over stock. However that was the normal situation prior to 2020, you could always basically walk in or click in and buy a graphics card. The public view is too surface level to really know the actual inventory situation.

Non specifically graphics cards though there does seem to be general traction that the broader consumer tech industry is facing lowering demand related to broader macro conditions leading to manufactures and suppliers starting to cut orders and expansion.

They're Sapphires contract manufacturer. Also they're (or at least have been in recent history) contract manufacturer of crapload of other big companies starting from AMD

That's what I thought, I'm guessing it's included in their non branded numbers for revenue.

Another interesting data point would be from TUL Corporation (Powercolor) which I believe is also graphics focused. They only seem to have detailed breakdowns financial reports in Chinese though. In terms of general company revenue and profit -

https://finance.yahoo.com/quote/6150.TWO/financials?p=6150.TWO

(all numbers in thousands)

Revenue:

2020 - 3,776,428

2021 - 8,790,649

Gross profit:

2020 - 270,852

2021 - 1,866,279

Asus, Gigabyte, and MSI also did have very good 2021's but they are much more diversified companies so it would be harder to separate out the graphics component (which I couldn't find they reporting specifically). They did not have the same relative growth in revenue and profits as PC Partners or TUL Corporation, which is understandable due to graphics likely being a much smaller relative part of their business.