The BTX got you covered since 2005. Those hot NetBursts...Honestly with the size and power of gpus, cpus, I think the pc case, motherboard needs a re-design for better thermal performance.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Partially because Android is still playing catch-up with A14. Apple has zero incentive to use the latest and greatest on their normal lineup. And yearly release cycle means it won’t take too long to update as well.

Not on the GPU side though, its not really a massive difference cpu-wise either. Defending Apple charing 1600usd for a base model pro max but bashing NV for the same thing is just weird. Apple litterally increased the price by over 500usd for the pro models.

Everyone seems to have issues with the 4080 12

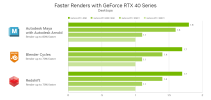

Nvidia's Justin Walker, Senior Director of Product Management, said, "The 4080 12GB is a really high performance GPU. It delivers performance considerably faster than a 3080 12GB... it's faster than a 3090 Ti, and we really think it's deserving of an 80-class product."

Putting aside the fact that the 4080(70) is almost certainly not faster than the 3090Ti in the overwhelming majority of games, x070's have always been around the same performance as the previous generations highest end GPU. But they just had to call this one a 4080 because... reasons.

DegustatoR

Legend

Because it's $900.Putting aside the fact that the 4080(70) is almost certainly not faster than the 3090Ti in the overwhelming majority of games, x070's have always been around the same performance as the previous generations highest end GPU. But they just had to call this one a 4080 because... reasons.

Yeah, that's pretty much it.Because it's $900.

You said that there's an extension in the NVIDIA API developers can use to optimize Shader Execution Reordering for their games. Does that mean SER will need to be optimized to give any performance advantage, or is there still an algorithm to slightly improve shader execution reordering automatically as well?

SER must be enabled specifically by the developer. These changes are usually quite modest, sometimes just a few lines of code. We expect this feature to be easily adopted.

NVIDIA Ada Lovelace Follow-Up Q&A - DLSS 3, SER, OMM, DMM and More

We followed up with NVIDIA for a brief Q&A on the newly announced DLSS 3, SER, OMM, and DMM features available with the new RTX 4000 GPUs.

Actually gpu is the biggest difference (don’t look at synthetics where everyone cheats by allowing different power states but the games shipping on Android vs iOS). Apple kept same MSRP in the US, but European prices are a bit stupid admittedly. That’s one thing Nvidia will never get right, no matter how much they want to be Apple, they always keep changing the price points for the same tier of GPUs, and PC gamers are rightfully pissed about it.Not on the GPU side though, its not really a massive difference cpu-wise either. Defending Apple charing 1600usd for a base model pro max but bashing NV for the same thing is just weird. Apple litterally increased the price by over 500usd for the pro models.

To the end consumer it doesn’t matter that TSMC 4N is more expensive to produce than Samsung 8N, but that the price of the xx80 GPU doubled. Of course the volume Nvidia is pushing is crickets compared to Apple so maybe they get worse deals, but again that doesn’t matter to the end consumer.

Last edited:

Henry swagger

Newcomer

Plus apple has millions of loyal fans who buy apple at what ever price.. nvidia is not apple nvidia's margins last quarter where below 50% and they.ll be worse with this grotesque pricingActually gpu is the biggest difference (don’t look at synthetics but the games shipping on Android vs iOS). Apple kept same MSRP in the US, but European prices are a bit stupid admittedly. That’s one thing Nvidia will never get right, no matter how much they want to be Apple, they always keep changing the price points for the same tier of GPUs, and PC gamers are rightfully pissed about it.

To the end consumer it doesn’t matter that TSMC 4N is more expensive to produce than Samsung 8N, but that the price of the xx80 GPU doubled. Of course the volume Nvidia is pushing is crickets compared to Apple so maybe they get worse deals, but again that doesn’t matter to the end consumer.

Nvidia is no Apple but they have their share of loyal fans to say the least.Plus apple has millions of loyal fans who buy apple at what ever price.. nvidia is not apple nvidia's margins last quarter where below 50% and they.ll be worse with this grotesque pricing

There's the perf increase as well, you (and @DegustatoR) forgot to factor in. The quote went on with "...and will use the shrink to increase clock speeds by ~50-60% and increase performance that way."Unless the less square millimeters in 4N costs more than the more square millimeters in 8N. We need to reset our mental calibration of Moore’s Law.

So you cannot only compare mm² to mm² but maybe SKU-mm² vs 1- or even 2-higher-SKU-mm².

That's interesting. In 65nm-generation we had large die-size high-end cards for 200 Euros/Dollars: GTX 280 at 300 and GTX260 as cheaper harvest parts around the 200 mark. According to this chart and the mentioned correlation, that should not have been possible. Yet, it was possible. And why? Because of competition and the fight for market share. That's the thing we're missing: Roughly equal performance from at least two GPU vendors with no blatant weaknesses in certain areas that the opposing marketing team can capitalize on.I don't understand your chain of logic. I said costs don't scale at <1x any more (when they used to scale at 0.5x every 2 years), and you conclude that that means costs are scaling at 10x every 2 years? It's not *that* bad! Otherwise the entire industry would have shut down.

Take a look at this chart. A truckload of caveats apply -- it's a rough academic projection, actual costs are different from foundry-to-foundry and depend on vendor-specific volume contracts, which are closely guarded. Also the costs change as a tech node matures and yields improve. But it shows the general trend.

Source: https://www.eetimes.com/fd-soi-benefits-rise-at-14nm/

You can see this has been happening for a while, and if you ignore instantaneous noise from demand spikes you can see how this has been reflected in ASPs over the past few generations that have scaled faster than inflation.

That's interesting. In 65nm-generation we had large die-size high-end cards for 200 Euros/Dollars: GTX 280 at 300 and GTX260 as cheaper harvest parts around the 200 mark. According to this chart and the mentioned correlation, that should not have been possible. Yet, it was possible. And why? Because of competition and the fight for market share. That's the thing we're missing: Roughly equal performance from at least two GPU vendors with no blatant weaknesses in certain areas that the opposing marketing team can capitalize on.

That’s a narrow view though. Lots of operating costs have gone up since those days, not just chip manufacturing. You can argue that profit margins have also increased in that time which would be an interesting debate. With greater competition Nvidia would most likely never have seen the kind of margins it has enjoyed for years.

DegustatoR

Legend

Well, sure, but will this performance increase be enough for such chips to get higher perf/cost than bigger chips made on 8N? Especially considering that you'd need to amortize the costs of making these chips from scratch on TSMC process.There's the perf increase as well, you (and @DegustatoR) forgot to factor in. The quote went on with "...and will use the shrink to increase clock speeds by ~50-60% and increase performance that way."

So you cannot only compare mm² to mm² but maybe SKU-mm² vs 1- or even 2-higher-SKU-mm².

I find it odd that the 4080 16gb is using a cut down AD103, considering the drop from AD102 and the crazy price you'd expect it to at least use the full die. Maybe they are keeping it for a 4080 super next year.

Also I'm not sure where the eventual 4080 ti would fit in the current lineup, the 4090 is heavily cut down already.

Also I'm not sure where the eventual 4080 ti would fit in the current lineup, the 4090 is heavily cut down already.

DegustatoR

Legend

RTX L5000 seems a more likely candidate.I find it odd that the 4080 16gb is using a cut down AD103, considering the drop from AD102 and the crazy price you'd expect it to at least use the full die. Maybe they are keeping it for a 4080 super next year.

Also I'm not sure where the eventual 4080 ti would fit in the current lineup, the 4090 is heavily cut down already.

Nvidia’s RTX 4090 Launch: A Strong Ray-Tracing Focus

Recently, Nvidia announced their RTX 4000 series at GTC 2022. At a high level, Nvidia took advantage of a process node shrink to evolve their architecture while also scaling it up and clocking it m…

chipsandcheese.com

chipsandcheese.com

But even that is up to developers implement, not automatic like at least Intel is apparently doingDont see the "strong Ray-Tracing Focus" above the usual improvement. With "SER" nVidia updated their shading execution model so this is bigger than any other change.

Yeah, exactly. That is one additional aspect to the one-dimensional chart with Dollars per Gate, right?That’s a narrow view though. Lots of operating costs have gone up since those days, not just chip manufacturing. You can argue that profit margins have also increased in that time which would be an interesting debate. With greater competition Nvidia would most likely never have seen the kind of margins it has enjoyed for years.

- Status

- Not open for further replies.

Similar threads

- Replies

- 177

- Views

- 40K

D

- Replies

- 90

- Views

- 17K

- Replies

- 20

- Views

- 6K