Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

MS leak illustrates new console development cycle

- Thread starter Shifty Geezer

- Start date

Yes MS has been doing that for home consoles. But I think a zen 6 processor on a new process node would be more than up for the task of improving previous generation games that are cpu limited. The gpu will likely be the driving force to offer better versions of series games and that would mostly be due to hopefully much better raytracing performance.A handheld can get away with BC performance that's sufficient for play as good as last gen home consoles, especially if it releases a bit before next gen.

So far MS has been the one to drive improved versions of last gen games with no developer input, using lots of clever interceptions of API calls from precompiled binaries and somehow also separating logic and rendering post release. It's nice, but it's not been a game changer when their two most successful competitors haven't given a shit.

The PS5 Pro rumors paint it as using a 3.8ghz zen 2 right ?I wouldn't bet on next gen home consoles being able to reliably run a single thread twice as fast as this gen either, regardless of x86 or Arm. Sony aren't exactly pushing clocks on the PS5 Pro, and they are again balancing CPU clocks and power draw against GPU clocks.

Edit: and to do what MS have done with Xbox One to Series consoles with 30 to 60 fps wizardry in (very selected) games, you'd need to bank on having your most framerate limiting thread reasonably reliably able to double up on performance.

A zen 5 offers a huge jump in IPC

- Zen3: 19%

- Zen4: 13%

- Zen5: 16%

Frenetic Pony

Veteran

considering those are gains on each previous generation with zen6 you could see close to a doubling of IPC vs zen 2. 3D cache can increase that further.

Yeah, averaged out Zen 6 should have twice the IPC of Zen 2 in cumulative terms, even if specific game instructions were somehow biased against an increase in clockspeed would easily make up for it.

cheapchips

Veteran

Benchmarks of native Arm applications, or of x86 emulated apps? One will be faster and more power efficient that the other.

Both?

If the new Xboxes let you run PC games, what's it's performance equivalent to?

Also, potentially we end up with ARM in the handheld and x86 in other devices. Developers could have the option to never even bother compiling for ARM. It might not be much work, but it's still work, especially for indies.

A '10%' cpu performance loss/ gain isn't going to make a difference in many cases.

Go tell to series sA '10%' cpu performance loss/ gain isn't going to make a difference in many cases.

cheapchips

Veteran

Go tell to series s

Are there titles on S that suffer from CPU related issues?

There’s virtually no difference in CPU between X and S.

A lot of woes around Series S are being blown out of proportion. Games that are designed for larger footprints have a bigger problem when porting downwards if Series S is an afterthought.

Games designed ground up with Series S in mind can make it work.

We’ve seen games ported all the way down to switch which is significantly weaker. they are time bound and Xbox has a rule that both X and S must release together and have the same experience. And for many of these developers, where they didn’t put Series

S upfront, they would rather ditch Xbox if they could, but they can’t. So they are frustrated for the game needing to be re-optimized to work on it.

Much easier to publicize an excuse and point at the hardware to justify that the studio did not have the labour resources to release on several platforms at once.

A lot of woes around Series S are being blown out of proportion. Games that are designed for larger footprints have a bigger problem when porting downwards if Series S is an afterthought.

Games designed ground up with Series S in mind can make it work.

We’ve seen games ported all the way down to switch which is significantly weaker. they are time bound and Xbox has a rule that both X and S must release together and have the same experience. And for many of these developers, where they didn’t put Series

S upfront, they would rather ditch Xbox if they could, but they can’t. So they are frustrated for the game needing to be re-optimized to work on it.

Much easier to publicize an excuse and point at the hardware to justify that the studio did not have the labour resources to release on several platforms at once.

There's never been any reports about CPU (for obvious reasons)

Even GPU isn't moaned about that much. They can just make it look really bad if they can't find development resources to optimise more.

The issues devs have to really work around is available memory.

Saying XSS CPU is an issue, can be thrown into the bucket of blaming everything including climate change on XSS.

Even GPU isn't moaned about that much. They can just make it look really bad if they can't find development resources to optimise more.

The issues devs have to really work around is available memory.

Saying XSS CPU is an issue, can be thrown into the bucket of blaming everything including climate change on XSS.

First, there's no proof of a lack of correlation between xss and climate change.

Second, from what I've got, the problem often starts when they bruteforce ps5 optimized code on series x, it barely keeps up with the frames, then run it on series s, it's below minimum and they are forced to spend time and resources to optimize it.

Of course, if you plan ahead and develop a game with both consoles in mind it's a lesser problem. But how many times does it happen?

On top, and this is my speculation, if you have some task that can be run on the gpu, on the s you don't have that option and must rely more on the CPU.

Anyway, write for high end x86 and run with emulation on an arm handset with just minimal performance drop, will never happen.

Second, from what I've got, the problem often starts when they bruteforce ps5 optimized code on series x, it barely keeps up with the frames, then run it on series s, it's below minimum and they are forced to spend time and resources to optimize it.

Of course, if you plan ahead and develop a game with both consoles in mind it's a lesser problem. But how many times does it happen?

On top, and this is my speculation, if you have some task that can be run on the gpu, on the s you don't have that option and must rely more on the CPU.

Anyway, write for high end x86 and run with emulation on an arm handset with just minimal performance drop, will never happen.

Pretty sure the XSS CPU is same or faster than PS5. Possibly beefier if take AVX implementation into account.

But there's been no reports on CPU issues which was the point.

SMT/SMT off

Series S is 3.4/3.6

Series x is 3.6/3.8

Ps5 has a variable 3.5 ghz cpu clock

So there really shouldn't be an issue on the series S with the cpu

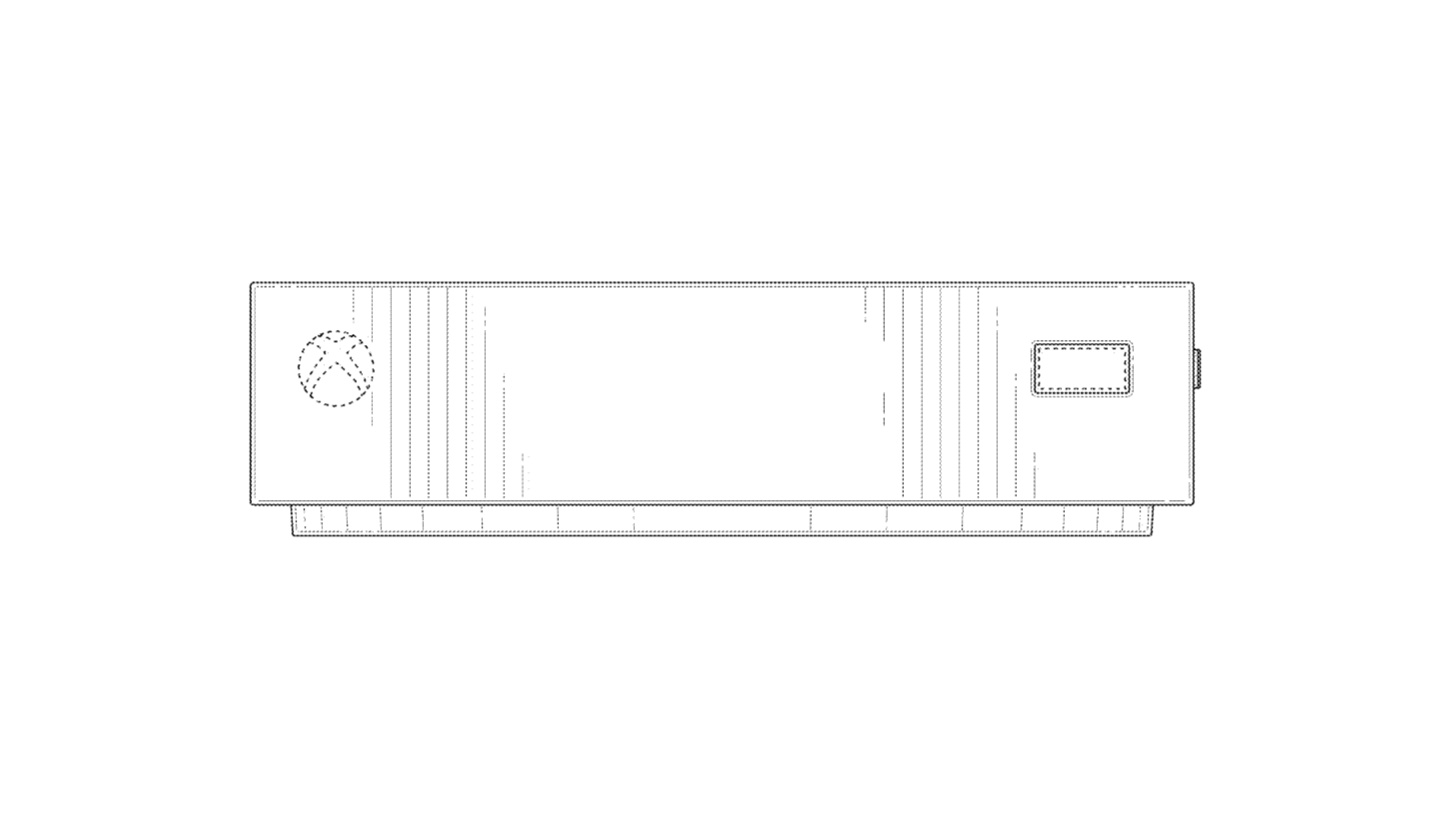

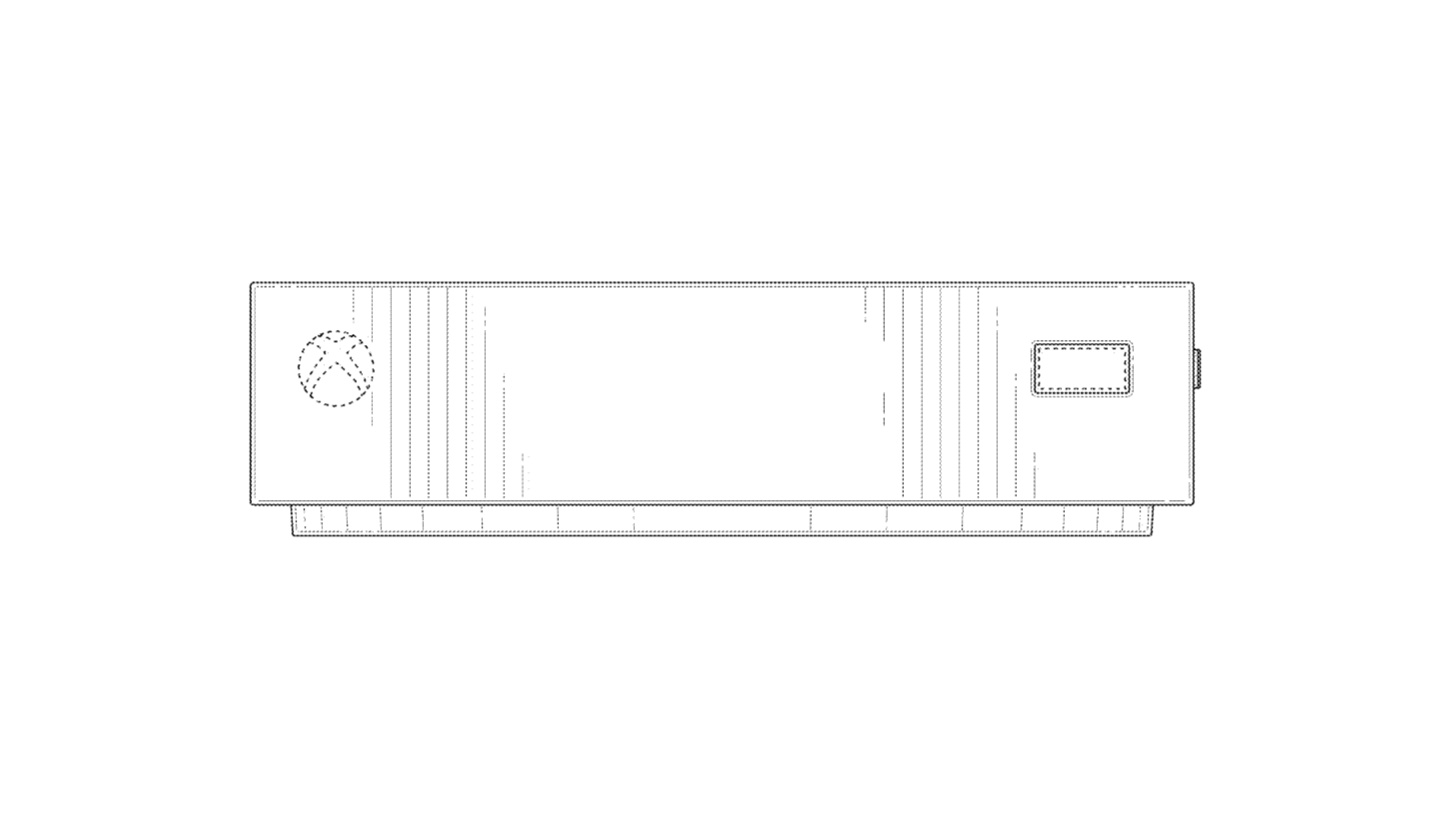

The Xbox that never was: Our first detailed look at the 'Keystone' cloud streaming console design

www.windowscentral.com

www.windowscentral.com

The Xbox that never was: Our first detailed look at the 'Keystone' cloud streaming console design

Ever wondered what Xbox Keystone was going to look like? A new patent gives us an idea!

www.windowscentral.com

www.windowscentral.com

Last edited:

Similar threads

- Replies

- 5

- Views

- 3K

- Replies

- 188

- Views

- 35K

- Replies

- 70

- Views

- 22K

- Replies

- 42

- Views

- 37K

- Replies

- 4

- Views

- 14K