D

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Intel XeSS anti-aliasing discussion

- Thread starter BRiT

- Start date

I feel like death looking at that thumbnail.

Imagine being a PHD computer scientist putting your day's work into an implementation for years and you see the word "fail" next to it on YouTube for some minor visual artefacting. Clearly, there are better ways to describe visual artefacts that require less hyperbole and "shitting on".

Imagine being a PHD computer scientist putting your day's work into an implementation for years and you see the word "fail" next to it on YouTube for some minor visual artefacting. Clearly, there are better ways to describe visual artefacts that require less hyperbole and "shitting on".

DegustatoR

Legend

I'm starting to wonder what other words HUB even know.

Here is the title for the FSR 1.0 video: AMD FidelityFX Super Resolution Analysis, Should Nvidia be Worried?

I know right. This is just soooo disrespectful to the engineers at Intel and it makes me pretty angry I admit. This is how HUB gets their clicks, they want you to feel strong emotions, instead of reviewing the hardware in a neutral and objective manner. They always use words like terrible, fail, unuseable, shitty etc in their wording.I feel like death looking at that thumbnail.

Imagine being a PHD computer scientist putting your day's work into an implementation for years and you see the word "fail" next to it on YouTube for some minor visual artefacting. Clearly, there are better ways to describe visual artefacts that require less hyperbole and "shitting on".

Seems a bit strange leaving out Arc GPUs from the comparison, having all three vendors with their own solutions in the same video would make it comprehensive (from a performance standpoint at least). Granted they did say they'll do Intel in another video and it is already 30+ mins, maybe a performance comparison video then an image quality comparison video would've been more cohesive? I guess we'll see what they do later

Here is the title for the FSR 1.0 video: AMD FidelityFX Super Resolution Analysis, Should Nvidia be Worried?

Their thumbnail for that video:

No fail there, I guess.

Also, in the conclusion section it's stated FSR 1.0 is at times competitive with DLSS2..

In fact, FSR2 is almost the same as DLSS, again, according to them.

well, whenever I see a recommended video of them I am going to click on "Don't recommend this channel". I did the same with the JayzTwoCents channel back in the day when he accused nVidia of using cheap capacitors that were causing issues with their RTX 3000 GPUs when they were launched, that allegedly caused those GPUs to freeze and so on, just because another person, an actually knowledgeable guy theorized about that -he was wrong, but that guy at least created that theory himself and he usually knows what he's talking about even if he might be wrong.

Imagine Dr Cutress who spent 3 years on doing Phd in chemistry but now he makes money on YouTube testing computers.I feel like death looking at that thumbnail.

Imagine being a PHD computer scientist putting your day's work into an implementation for years and you see the word "fail" next to it on YouTube for some minor visual artefacting. Clearly, there are better ways to describe visual artefacts that require less hyperbole and "shitting on".

List of games that support Intel XeSS (list updated september 20th):

www.tweaktown.com

www.tweaktown.com

As long as your GPU has dp4a support, then it is fully compatible to use XeSS on any game. This is XeSS running on a GTX 1630:

Intel details XeSS tech: Arc A770 GPU benched, 20+ games support XeSS

Intel details its XeSS technology, benchmarking its new Arc A770 with XeSS enabled: 2x performance improvement, 20+ games will support XeSS upscaling tech.

As long as your GPU has dp4a support, then it is fully compatible to use XeSS on any game. This is XeSS running on a GTX 1630:

It doesn't even need hardware DP4a support, just Shader Model 6.4. DP4a accelerates it though. Meaning it should work on Navi21 (RX5700 etc) too (only Navi without DP4a I think?)List of games that support Intel XeSS (list updated september 20th):

Intel details XeSS tech: Arc A770 GPU benched, 20+ games support XeSS

Intel details its XeSS technology, benchmarking its new Arc A770 with XeSS enabled: 2x performance improvement, 20+ games will support XeSS upscaling tech.www.tweaktown.com

As long as your GPU has dp4a support, then it is fully compatible to use XeSS on any game. This is XeSS running on a GTX 1630:

afaik, RX 5700 doesn't have dp4a support, yeah. As for what you mention, I've seen videos using XeSS natively on a GTX 1060. The overall speed is better than native although it doesn't perform like on dp4a compatible or native Intel gpus.It doesn't even need hardware DP4a support, just Shader Model 6.4. DP4a accelerates it though. Meaning it should work on Navi21 (RX5700 etc) too (only Navi without DP4a I think?)

In this video, they mention the fact of making XeSS part of the GPU drivers -video should start playing at that very moment-. I wonder if they could make XeSS work on all games forcing it via drivers. I'd certainly enable it most of the time.

On another note:

FARMING SIMULATOR 22 Platinum-Ready, XeSS Support, Improved AI: Patch 1.8.1 Now Available! - news on pcgameabout.com

We're only days away from releasing the Platinum Expansion for Farming Simulator 22. Patch 1. 8. 1 is now available to download and makes the game ready for all those new features, machines, and improvements.

DavidGraham

Veteran

So XeSS XMX (on Arc GPUs) is far superior to FSR2.1, and close to DLSS2. It's funny that some people here still doubt the efficacy of AI upscaling compared to traditional methods.

For those in doubt, this is ranking of the 3 upscaling methods:

DLSS2

XeSS XMX

FSR2

When upscaling from 1080p to 4K, both DLSS2 and XeSS XMX are even more far ahead of FSR2.

For those in doubt, this is ranking of the 3 upscaling methods:

DLSS2

XeSS XMX

FSR2

When upscaling from 1080p to 4K, both DLSS2 and XeSS XMX are even more far ahead of FSR2.

just enabled XeSS in Shadow of the Tomb Raider and if I could use it in all the games I wouldn't use anything else -save DLSS too if I could-. Now I can quite understand why DF recommends these reconstruction techniques over native.

NEVER seen such a clean game of jaggies, it feels like watching an animated movie in the cinema at times.

it feels like watching an animated movie in the cinema at times.

I'm not talking about graphics realism, and there are more graphically impressive games than Tomb Raider, but the cleanliness of the image is such that not even in the best games -except CoD Modern Warfare 2 that also uses XeSS- that I've seen, I looked at an image so clean-

I remember when I saw Digital Foundry's XeSS video, I was wondering what the trick was because they were upscaling a native 720p image -which is all blur and jaggies- using XeSS and on the upscale you saw the image without a single jaggie even on super thin elements. Specifically this image (and that's in Performance mode):

NEVER seen such a clean game of jaggies,

I'm not talking about graphics realism, and there are more graphically impressive games than Tomb Raider, but the cleanliness of the image is such that not even in the best games -except CoD Modern Warfare 2 that also uses XeSS- that I've seen, I looked at an image so clean-

I remember when I saw Digital Foundry's XeSS video, I was wondering what the trick was because they were upscaling a native 720p image -which is all blur and jaggies- using XeSS and on the upscale you saw the image without a single jaggie even on super thin elements. Specifically this image (and that's in Performance mode):

Last edited:

that being said, not so good news, I reported in the Intel support forums that XeSS doesn't work with Shadow of the Tomb Raider in the most recent beta version of the drivers, for whatever reason. I had to install the "old" last stable version from October so I could use XeSS in Shadow of the Tomb Raider.

I also noticed that at 4K, using XeSS Performance or Balanced, Lara's hair disappeared at times when running the benchmarks. Oddly enough that didn't happen the first time I benchmarked the game. I am playing the game using XeSS Quality and Raytracing shadows at ultra. The framerate is good.

I also noticed that at 4K, using XeSS Performance or Balanced, Lara's hair disappeared at times when running the benchmarks. Oddly enough that didn't happen the first time I benchmarked the game. I am playing the game using XeSS Quality and Raytracing shadows at ultra. The framerate is good.

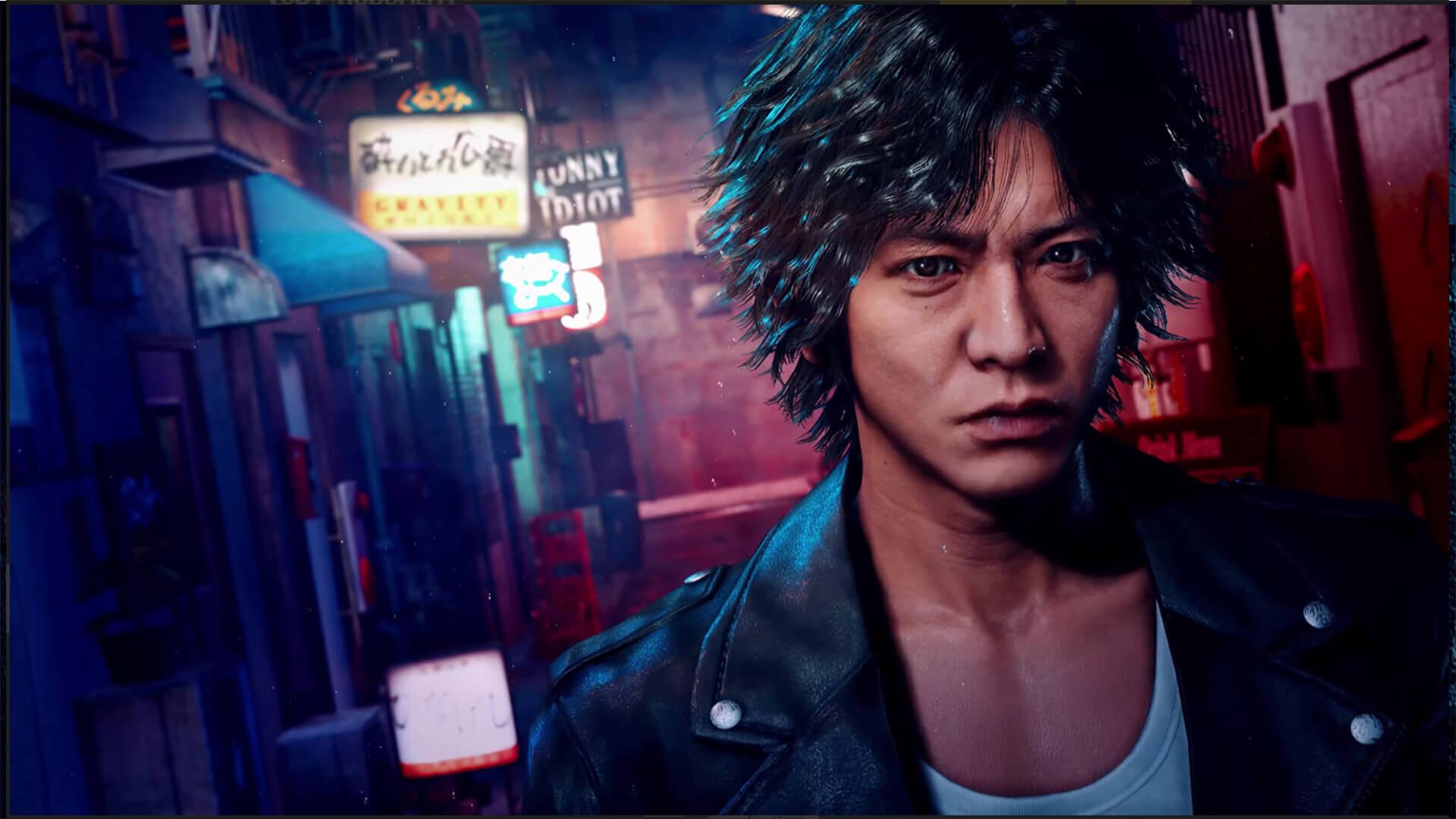

Judgment & Lost Judgment now support AMD FSR 2.1 & Intel XeSS 1.0.1

Ryu Ga Gotoku Studio has released a new update for Judgment and Lost Judgment that adds support for AMD FSR 2.1 and Intel XeSS 1.0.1.

www.dsogaming.com

www.dsogaming.com

And most importantly, XeSS support for one of my favourite games ever, Resident Evil 2 Remake.

Resident Evil 2 Remake DLSS / FSR 2.0 / XeSS Mod Showcased in New Video

A Resident Evil 2 Remake mod that is currently in development will add DLSS, FSR and XeSS support to the game

I got both Judgment and Lost Judgment by Sega, which feature XeSS.

Character's hair has some strange halo around it but I guess it's an Intel A770 artifact, not an issue with the game. It happens whether the game is running at 4K native resolution or XeSS.

This is a comparison between native 4K and XeSS Quality. The hair's aliasing is gone with XeSS Quality. 4K native shows a crisper texture specially on the leather jacket, but that's to be expected. XeSS Quality is usually my favourite setting, but any setting is ok as long as I dont have to play 4K native, which uses resources in excess with no clear IQ gain compared to XeSS Quality and Ultra Quality, plus worse AA.

The comparison features the same camera angle and position, only light changes slightly.

XeSS Quality

Native 4K

Does anyone know if that issue with the hair happens with other GPUs?

Character's hair has some strange halo around it but I guess it's an Intel A770 artifact, not an issue with the game. It happens whether the game is running at 4K native resolution or XeSS.

This is a comparison between native 4K and XeSS Quality. The hair's aliasing is gone with XeSS Quality. 4K native shows a crisper texture specially on the leather jacket, but that's to be expected. XeSS Quality is usually my favourite setting, but any setting is ok as long as I dont have to play 4K native, which uses resources in excess with no clear IQ gain compared to XeSS Quality and Ultra Quality, plus worse AA.

The comparison features the same camera angle and position, only light changes slightly.

XeSS Quality

Native 4K

Does anyone know if that issue with the hair happens with other GPUs?

Dying Light 2 adds XeSS support in a new patch.

dyinglightgame.com

dyinglightgame.com

Update 1.7.2 - Dying Light 2 – Official website

Modders took the occasion of the Skyrim's 11th anniversary on 11-11-2022 to create a mod that adds FSR 2, DLSS and XeSS support to Skyrim.

appuals.com

appuals.com

More detailed info with a video from an articulate guy in the DLSS thread.

forum.beyond3d.com

forum.beyond3d.com

Skyrim Gets Unofficial DLSS/XeSS/FSR Support

Yesterday marked Skyrim's eleventh anniversary, and in celebration, modders have created something new for the game.

appuals.com

appuals.com

More detailed info with a video from an articulate guy in the DLSS thread.

Nvidia DLSS 1 and 2 antialiasing discussion *spawn*

I just tried Detroit Become Human on my 2080Ti, max settings, native 4K, and I can't stand the amount of blur on the image, it's so disgustingly blurry to the point it becomes unbearable sometimes, which reminded me that so many games have terrible TAA implementations indeed, and how gamers had...

Last edited:

Similar threads

- Replies

- 7

- Views

- 2K

- Replies

- 1

- Views

- 1K

- Replies

- 18

- Views

- 2K

- Replies

- 21

- Views

- 9K