^^ yeah

Sony also calls the primitive shader NGG stuff on PS5 "mesh shaders" in their documentation but they also clearly line out the areas where it differs/falls short of the DX spec.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Direct3D Mesh Shaders

- Thread starter DmitryKo

- Start date

That's interesting, good find. I wonder how PS5 "mesh shaders" compare to RDNA1's implementation of primitive shaders.^^ yeah

Sony also calls the primitive shader NGG stuff on PS5 "mesh shaders" in their documentation but they also clearly line out the areas where it differs/falls short of the DX spec.

No.Can i see the documentation?

Documentation from Sony is watermarked. The developer in question would be liable.

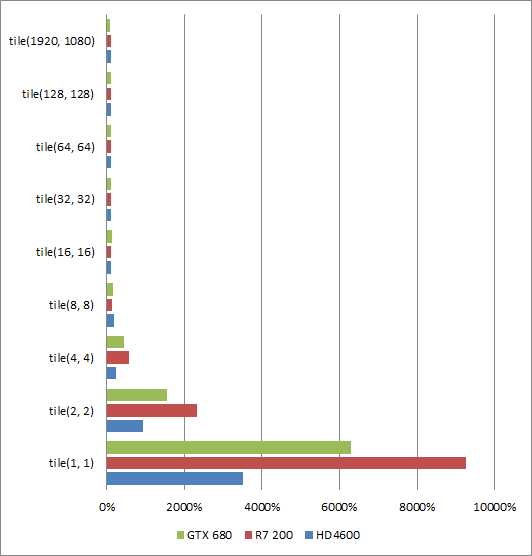

Here are some Tests from Mesh Shader and Compute Shader for Frontend. Looks like Compute Shaders are much faster then Mesh Shaders ^^

tellusim.com

tellusim.com

tellusim.com

tellusim.com

tellusim.com

tellusim.com

tellusim.com

tellusim.com

Mesh Shader Emulation - Tellusim Technologies Inc.

Tellusim Technologies Inc. Mesh Shader Emulation

tellusim.com

tellusim.com

Mesh Shader Performance - Tellusim Technologies Inc.

Tellusim Technologies Inc. Mesh Shader Performance

tellusim.com

tellusim.com

Mesh Shader versus MultiDrawIndirect - Tellusim Technologies Inc.

Tellusim Technologies Inc. Mesh Shader versus MultiDrawIndirect

tellusim.com

tellusim.com

Compute versus Hardware - Tellusim Technologies Inc.

Tellusim Technologies Inc. Compute versus Hardware

tellusim.com

tellusim.com

Hmpf. First Nanite and now this. Why are they even a thing if mesh shaders are so useless? Is that why nobody uses them?Here are some Tests from Mesh Shader and Compute Shader for Frontend. Looks like Compute Shaders are much faster then Mesh Shaders ^^

Mesh Shader Emulation - Tellusim Technologies Inc.

Tellusim Technologies Inc. Mesh Shader Emulationtellusim.com

Mesh Shader Performance - Tellusim Technologies Inc.

Tellusim Technologies Inc. Mesh Shader Performancetellusim.com

Mesh Shader versus MultiDrawIndirect - Tellusim Technologies Inc.

Tellusim Technologies Inc. Mesh Shader versus MultiDrawIndirecttellusim.com

Compute versus Hardware - Tellusim Technologies Inc.

Tellusim Technologies Inc. Compute versus Hardwaretellusim.com

I don't understand. Mesh shaders are supposed to be a revolution in the geometry pipeline

3D pipeline.Hmpf. First Nanite and now this. Why are they even a thing if mesh shaders are so useless?

I don't understand. Mesh shaders are supposed to be a revolution in the geometry pipeline

Mesh shaders effectively use GPU ALU for the front end of geometry processing vs the legacy front end FF hardware on the 3D pipeline. Both will still output to ROPs in the end after the unified shader stages.

Vs compute shaders which use ALU on the compute shader pipeline. The key is understanding when you need one or the other or in the case of Nanite both.

As games continue to get more geometrically rich, some older methods of processing geometry may not be as scalable as compute or Mesh.

Last edited:

You'd have to redo the way ROPs are done.I wonder why nobody implement a new fixed function hardware stage for micro polygon?

Why? Rops are putting out pixels but the rasterizer is transfering polygons to pixel. You need onyly something for culling a lot of polygons and which polygon should not be culled.You'd have to redo the way ROPs are done.

ROPs write pixels per second per triangle. The standard size right now is 16 pixels per triangle is optimal, which is 4x4 IIRC on AMD cards. Once you get it 8px because it's still good. If you get to 4 pixel triangle it's starts to choke. Anything less than 4 pixels really starts to have an impactWhy? Rops are putting out pixels but the rasterizer is transfering polygons to pixel. You need onyly something for culling a lot of polygons and which polygon should not be culled.

And old article, but still good and useful for today. This is filling a 1080p screen with triangle size. The top is just a huge triangle, the bottom are 1x1 pixel triangles.

How bad are small triangles on GPU and why?

www.g-truc.net

I believe there is some issue with scanning. But the ROPS can only output 2 triangles per clock in a 16 pixel space. 1x1 pixels will never fill, the fill rate falls off a cliff. I think it makes sense with diagrams listed. This is why nanite uses compute shaders for tiny pixels, you can still complete full blocks all at once because each individual thread represents a triangle.

quick preview:

I don't think we have a rops issue. Rops will not get filled when rasterizes is not puting out enough pixels?

I don't get it how can a frame buffer be filled when ther is no triangle to fill it? With samller triangles you get a smaller rasterizer output with less pixels. So why is the frame buffe stalling? If the Rasterizer at max output you get 16 pixels for 1 polygon so you have more pixels for a polygon to store. So the pixel buffer must be faster full than with a small polygon. What do i understand wrong?

I don't get it how can a frame buffer be filled when ther is no triangle to fill it? With samller triangles you get a smaller rasterizer output with less pixels. So why is the frame buffe stalling? If the Rasterizer at max output you get 16 pixels for 1 polygon so you have more pixels for a polygon to store. So the pixel buffer must be faster full than with a small polygon. What do i understand wrong?

Last edited:

Not all ideas pan out in practice. Although I think the jury is still out on whether or not mesh shaders are useful. The extended cross gen period could be the issue as no developer is going to write 2 entirely different geometry solutions. Over the next few years we should have some tech papers of devs explaining their benefits or why they were not used.Hmpf. First Nanite and now this. Why are they even a thing if mesh shaders are so useless? Is that why nobody uses them?

I don't understand. Mesh shaders are supposed to be a revolution in the geometry pipeline

Yea, so this answer isn't going to be perfect, but I'm sure someone here can correct me.I don't think we have a rops issue. Rops will not get filled when rasterizes is not puting out enough pixels?

I don't get it how can a frame buffer be filled when ther is no triangle to fill it? With samller triangles you get a smaller rasterizer output with less pixels. So why is the frame buffe stalling? If the Rasterizer at max output you get 16 pixels for 1 polygon so you have more pixels for a polygon to store. So the pixel buffer must be faster full than with a small polygon. What do i understand wrong?

There are barely enough pixels per second to be able to fill 1-pixel triangles if you are looking at the raw numbers. But that is sort of the gotcha. Because if this was the only challenge, we'd be doing 1px triangles for some time now.

I believe the real issue comes down to the ROPS because they are responsible for depth, stencil, blending, and AA. My understanding is that what's going to happen is that a lot of these 16 pixel triangles can be blended and the final answer rapidly converged. But what happens in that 4x4 render backend grid when everything is nearly empty? How does one blend, test, and stencil, as well as AA blank space? I think the RBs fall into a bad space for calculation which causes performance there to drop off entirely. Like for these functions to occur, it grabs a sample of nearby pixels and attempts to blend them together. What is provided to them is all blank and 1 pixel or 2 pixels, it suddenly can't converge to a final answer.

Think about any of our sampling algos, they take 4 pixels in a block and average them, and that's the sample in the middle. And then you work off that sample to determine how that will affect the other surrounding pixels. What happens when everything is blank, except for that 1 pixel, or even worse, a sub pixel? I think the FF hardware falls into algorithm hell because those are use cases it doesn't know how to deal with, whereas software rendering can dispatch those cases quickly.

I rally want to understand how the algorythem is working. When you have 4 Polygons which are all in one pixel. The polygons are red, blue, green and yellow. What coller gets the pixel? I think that only 1 polygon is alive after culling?

This pixel is than transfert to the shaders and after this for this 1 pixel there are waiting for example 32 rops. I think this is the ratio of rasterizer and rops? This means 31 rops are ideling and one is doing the rendering work. Thats why i wonder why we have such a small frontend. Would it be not more efficent to have 8 rasterizer and 16 rops for each rasterizer when you want to render small polygons?

This pixel is than transfert to the shaders and after this for this 1 pixel there are waiting for example 32 rops. I think this is the ratio of rasterizer and rops? This means 31 rops are ideling and one is doing the rendering work. Thats why i wonder why we have such a small frontend. Would it be not more efficent to have 8 rasterizer and 16 rops for each rasterizer when you want to render small polygons?

Hmpf. First Nanite and now this. Why are they even a thing if mesh shaders are so useless? Is that why nobody uses them?

I don't understand. Mesh shaders are supposed to be a revolution in the geometry pipeline

Because they are standard as long as the gpu supports it. You are free to roll your own solution but it’s up to you to ensure it works by design across the gpus you plan or want to support.

Last edited:

There are several reasons why IHVs won't do so ...I wonder why nobody implement a new fixed function hardware stage for micro polygon?

Primitive assembly and scan conversion hardware would need significant increases to be able to handle more geometry. GPUs shade 2x2 quads to provide easy access to derivatives. Introducing hardware to compute analytic derivatives for 1x1 pixels wouldn't be a trivial implementation. If a hardware vendor cares about occupancy, they won't let the issue of helper lanes keep persisting. The HW blending unit would also need a redesign to be optimized for blending micropolygons in primitive order as is the case with the traditional graphics pipeline ...

Also trying to make micropolygon rendering more flexible than what we already have with Nanite such as supporting deformable topologies would work against the interests of some IHVs trying to pivot the future into ray tracing. RT performance would be abysmal on many dynamic scenes if micropolygon rendering was made more accessible ...

For general view of GPU and ROPs, the old good A trip through the Graphics Pipeline 2011 should give some answers.

AMD Fixed the Mesh Shader on N31. Can sombody test it maybe compare it to 4090?

hardforum.com

hardforum.com

RDNA 3, Driver 23.1.2 fixes AMD Mesh Shader performance issue, 7900 XT/XTX (Update: Maybe Not)

There was a concern about a possible hardware issue with Mesh Shaders with the 7900 XT/XTX due to rather poor results in 3DMark Mesh Shader feature test. With driver 23.1.1 with Mesh Shader on, it was only 11.9% faster then with it off. With 23.1.2, look at the difference between the two: AMD...

hardforum.com

hardforum.com

Similar threads

- Replies

- 18

- Views

- 7K

- Replies

- 2K

- Views

- 236K

- Replies

- 32

- Views

- 10K