DegustatoR

Legend

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

What's memory usage like when using mesh shaders compared to fancy texture implementations to fake geometric detail?

I know we don't have any talks etc on what has been learned from implementing mesh shaders, so hopefully they'll do one.

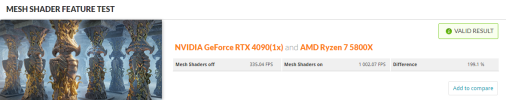

AMD Fixed the Mesh Shader on N31. Can sombody test it maybe compare it to 4090?

RDNA 3, Driver 23.1.2 fixes AMD Mesh Shader performance issue, 7900 XT/XTX (Update: Maybe Not)

There was a concern about a possible hardware issue with Mesh Shaders with the 7900 XT/XTX due to rather poor results in 3DMark Mesh Shader feature test. With driver 23.1.1 with Mesh Shader on, it was only 11.9% faster then with it off. With 23.1.2, look at the difference between the two: AMD...hardforum.com

Most likely a seperate compute shader path of meshlet rendering.I wonder how an "unsupported" h/w would run something which it can't unless there is an effort put into supporting it...

Which would mean that they are explicitly "supporting" such h/w.Most likely a seperate compute shader path of meshlet rendering.

I wonder how an "unsupported" h/w would run something which it can't unless there is an effort put into supporting it...

Which again imply that they are supporting the "unsupported" h/w for some reason despite it not being capable to run at good enough performance.its in the vid i think older HW will struggle.

"Disadvantages:

1. Unsupported hardware

- Imitate effect with Vertex Shaders

- Hard to get performance without new GPU"

Which again imply that they are supporting the "unsupported" h/w for some reason despite it not being capable to run at good enough performance.

I'm just wondering if there is really any benefit in spending resources on such support if all you will get out of it is a bunch of old h/w owners unhappy with the performance.

Awesome.future capcom games will support mesh shaders. unsupported hardware will run, but it's gonna struggle

www.dsogaming.com

www.dsogaming.com

RDNA1 still benefit from the majority of optimizations done for RDNA2. Pascal obviously wasn't even considered as a target for development so such difference isn't very surprising.Hopefully someone will bench a Pascal GPU. Curious if the drop off is as severe as for RDNA 1.

Have my answer. 5700xt 65-85% faster.

With Pascal being superior at geometry I thought it may have fared better than RDNA 1.RDNA1 still benefit from the majority of optimizations done for RDNA2. Pascal obviously wasn't even considered as a target for development so such difference isn't very surprising.

Does make the point about questionable waste of resources on that support even stronger though.