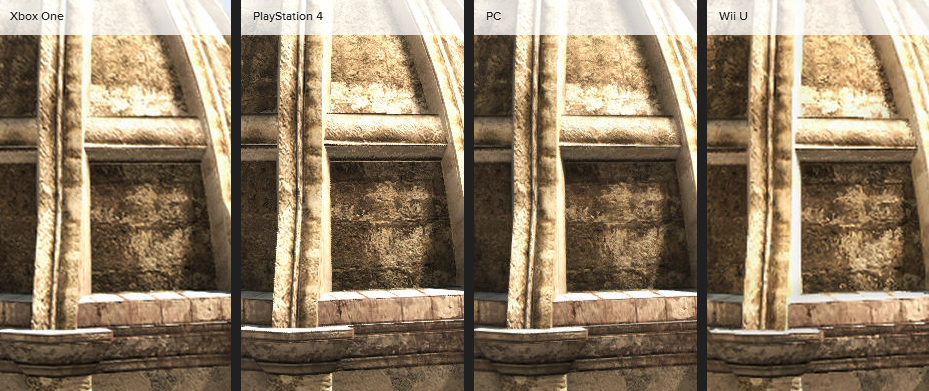

Here AC4 PS4/PC comparison. Notice the textures are blurred on PC version compared to PS4. Well cheap FXAA is on by default on any PC aa version. And the PC version is 4xMSAA also by the way.

All modes except SMAA on the PC version are combined with FXAA. The reasoning behind this is that FXAA provides the transarency AA element that MSAA and other methods don't.

I wouldn't describe it as cheap though. The blurring effect is extremely subtle and in combination with just 2x TXAA the image quality is completely sublime. Well in excess of what's acheivable with SMAA alone despite the slightely sharper image produced by the SMAA option.