Try out 2.5.1 in my opinion from Tech Power Up - it is the "model" that works best in the 3.1.X verisons without the ghosting. Or you could change the submode of 3.1.x if you want to be the same as the 2.5.1 version. There are posts out there on the web from people testing this via google.Alex is worth playing around with DLSS versions to get a better image from using DLSS performance mode at 4k?

I'm happy with the image I'm getting but is there mush improvement in the image quality from changing DLSS versions?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Current Generation Games Analysis Technical Discussion [2023] [XBSX|S, PS5, PC]

- Thread starter BRiT

- Start date

- Status

- Not open for further replies.

Double post -Alex is worth playing around with DLSS versions to get a better image from using DLSS performance mode at 4k?

I'm happy with the image I'm getting but is there mush improvement in the image quality from changing DLSS versions?

Preset "f" in 3.1.x is the 2.5.1 model.

Great test example to very clearly demonstrate the difference in motion clarity.

Not really related to any game but i cannot find any thread about upscaling technologies comparisons. Very interesting video that shows weakness and strengths of different upscaling techs.

Surprised to see that DLSS and FSR2 so badly fall apart during motion, XeSS looks really really good.

snc

Veteran

Also interesting response in commentsGreat test example to very clearly demonstrate the difference in motion clarity.

I am not surprised and this is what I have being telling here since the first FSR2 games. Compared to say Insomniac or Guerrilla latest solutions, FSR2 is simply not a good reconstruction / TAA tech. I also easily prefer UE TAAU tech than FSR2.

Not really related to any game but i cannot find any thread about upscaling technologies comparisons. Very interesting video that shows weakness and strengths of different upscaling techs.

Surprised to see that DLSS and FSR2 so badly fall apart during motion, XeSS looks really really good.

D

Deleted member 2197

Guest

Alex is worth playing around with DLSS versions to get a better image from using DLSS performance mode at 4k?

I'm happy with the image I'm getting but is there mush improvement in the image quality from changing DLSS versions?

Last edited by a moderator:

I'm not sure if this is the right topic, but... is it possible for Sony to unlock 2 more CUs on the PS5's GPU? 4 are deactivated. I'm curious if actually there are chips in retail PS5 consoles with more than 2 CUs defected. If not... it means that in theory Sony can unlock 2 more CUs (if I'm not wrong).

davis.anthony

Veteran

I'm not sure if this is the right topic, but... is it possible for Sony to unlock 2 more CUs on the PS5's GPU? 4 are deactivated. I'm curious if actually there are chips in retail PS5 consoles with more than 2 CUs defected. If not... it means that in theory Sony can unlock 2 more CUs (if I'm not wrong).

And get such a small amount of performance increase it wouldn't be worth it, in fact they may even get a performance decrease due to how their GPU boost works.

Absolutely no for retail consoles because of yields. Some devkits could theorically use all 40CUs for testing / debugging purposes. Microsoft is already doing this for their devkits but we have no definitive info for Sony.I'm not sure if this is the right topic, but... is it possible for Sony to unlock 2 more CUs on the PS5's GPU? 4 are deactivated. I'm curious if actually there are chips in retail PS5 consoles with more than 2 CUs defected. If not... it means that in theory Sony can unlock 2 more CUs (if I'm not wrong).

Yeah, this is a great test. It could be improved heavily by also having something that transforms with motion vectors on screen, like a skinned mesh rapidly switching shapes -- quality without motion vectors and quality with motion vectors are separate variables, and (based on all of our anecdotal experiences with dlss) I suspect dlss is by far the best when provided motion vectors.That's actually a really clever stress test and also shocked to see how poor DLSS faired compared to XeSS.

This is something I think deserves more attention -- we've gotten past the weird backlash against temporal upscaling where everyone thought it was a bad idea, but we've seemingly swung too far the other way and totally lost scrutiny of motion stability -- DF videos for example frequently highlight the upscaled target resolution and don't thoroughly investigate ghosting or other motion artifacts.

Interesting that Jedi Survivor puts a 8 GB VRAM as a minimum. I'm pretty sure Series S, at its best, can allocate around 5.5-6 GB for GPU to use. Are minimum specs supposed to look better than Series S? I thought at least S would be the min. spec. So 8 gb vram "min requirement" is baffling. Wouldn't Series S memory optimizations translate well to PC?

Flappy Pannus

Veteran

Interesting that Jedi Survivor puts a 8 GB VRAM as a minimum. I'm pretty sure Series S, at its best, can allocate around 5.5-6 GB for GPU to use. Are minimum specs supposed to look better than Series S? I thought at least S would be the min. spec. So 8 gb vram "min requirement" is baffling. Wouldn't Series S memory optimizations translate well to PC?

Potentially a case of utilizing the hardware texture decompression to swap out more frequently vs. what is possible on the PC without DS 1.1?

Also a little curious that it's 8GB VRAM for minimum, but also recommended.

Frankly, this is a good thing. Hopefully we can move on from 8gb. The series consoles only reserve 2gb of memory for the os however, Microsoft freed up some more memory for the series S due to dev complaints. I’ve linked the article below. As a result, I think the series s probably has access to max 6.5gb instead of the standard 6gb. Again, the series s will never be the base. It will always be the ps5 because it’s sold more units and it sells significantly more software. The s will just get whatever Frankenstein port they can shove on it. In the past, we’ve seen console games use settings that are lower than pc’s low settings and that’s what the series s will get.Interesting that Jedi Survivor puts a 8 GB VRAM as a minimum. I'm pretty sure Series S, at its best, can allocate around 5.5-6 GB for GPU to use. Are minimum specs supposed to look better than Series S? I thought at least S would be the min. spec. So 8 gb vram "min requirement" is baffling. Wouldn't Series S memory optimizations translate well to PC?

Article ref: Tom Warren Confirmation of Increased Ram allocation for Series S

The entire 8GB of Game memory on Series S can be accessed by the GPU. The OS use is outside of that memory (2GB of slower access memory from the 10GB machine total). Yes, there will be base memory use from that 8GB for the game executable and objects that will drop that down for what is usable for VRAM. However this is a unified memory pool architecture so you don't need to copy from CPU memory space to GPU memory space. On the PC you might need to keep shuffling items between GPU and CPU, so there is some loss due to inefficiency of split pool.

Or they looked at performance of the game on PC and found anything suitable had at least 8GB VRAM.

Or they looked at performance of the game on PC and found anything suitable had at least 8GB VRAM.

Thanks for the correction. I for some reason forgot that the Series s shipped with 8gb and not 10gb. My previous post should say max 8.5gb.The entire 8GB of Game memory on Series S can be accessed by the GPU. The OS use is outside of that memory (2GB of slower access memory from the 10GB machine total). Yes, there will be base memory use from that 8GB for the game executable and objects that will drop that down for what is usable for VRAM. However this is a unified memory pool architecture so you don't need to copy from CPU memory space to GPU memory space. On the PC you might need to keep shuffling items between GPU and CPU, so there is some loss due to inefficiency of split pool.

Or they looked at performance of the game on PC and found anything suitable had at least 8GB VRAM.

I know, I was talking about regular CPU data that can make do with residing only in CPU memory on PC, whereas it would have to use from total budget on consoles. Game logic, physics sounds, etc... I don't know how much the consoles would be using however, as PC RAM usage is overly inflated compared to consoles.The entire 8GB of Game memory on Series S can be accessed by the GPU. The OS use is outside of that memory (2GB of slower access memory from the 10GB machine total). Yes, there will be base memory use from that 8GB for the game executable and objects that will drop that down for what is usable for VRAM. However this is a unified memory pool architecture so you don't need to copy from CPU memory space to GPU memory space. On the PC you might need to keep shuffling items between GPU and CPU, so there is some loss due to inefficiency of split pool.

Or they looked at performance of the game on PC and found anything suitable had at least 8GB VRAM.

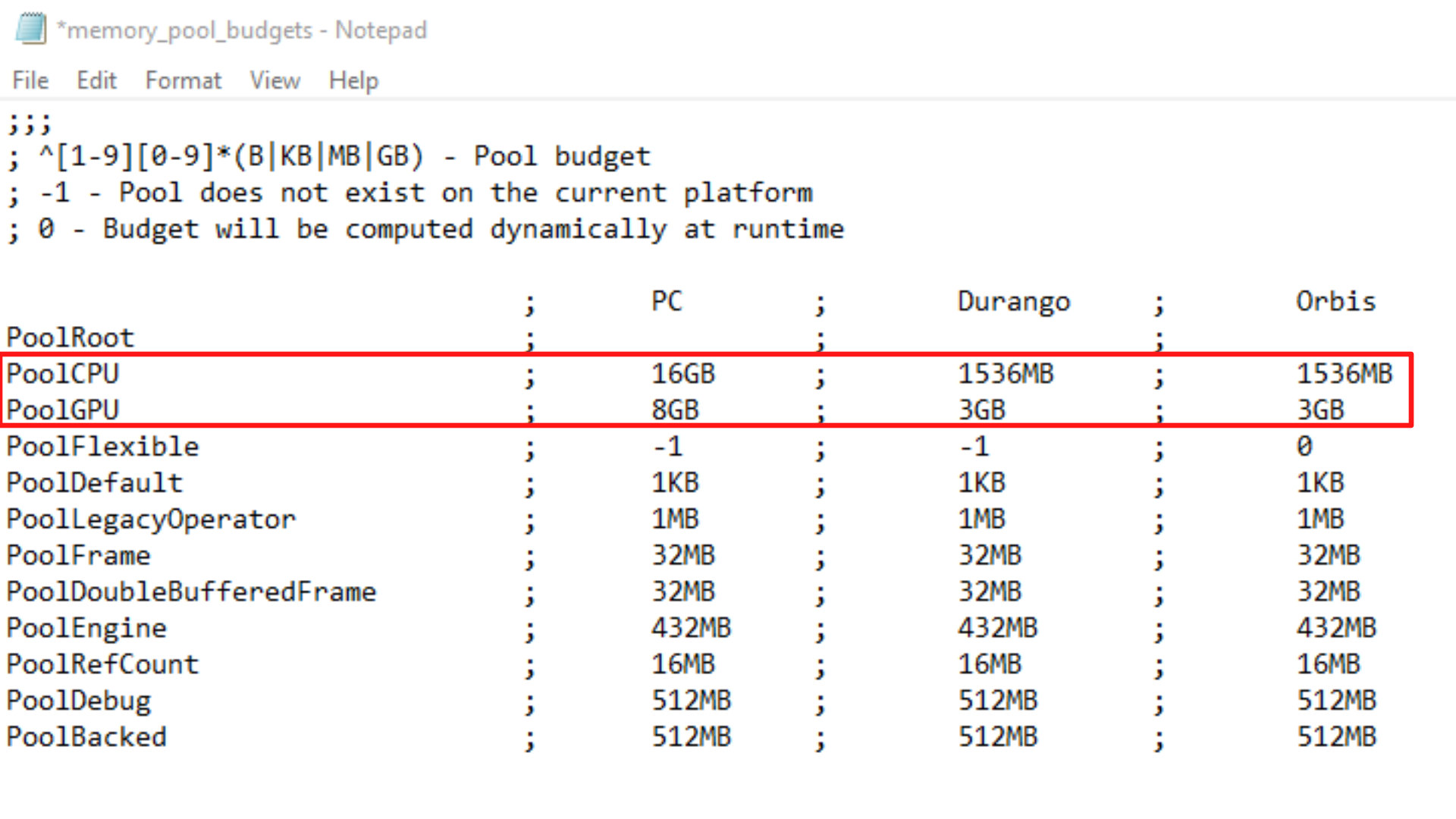

I always remember the memory pool budgets file found on Cyberpunk's files, and I kind of assumed most games would follow a similar trend, 1.5-2 GB CPU memory, 3.5-4 GB for GPU memory for a total of 5.5 GB allocatable memory. For nextgen games, CPU memory usage would increase a bit, I'd guess. But to where exactly, I don't know.

Silent_Buddha

Legend

Oh one other surprising thing about the RE4 demo on PS5: It's one of the few games that has some fine-grained control of graphical settings beyond just performance/quality/RT, specifically you can toggle Depth of Field and 'Hair Strands', which provides for more detailed hair + fur.

Default hair looks good enough, but this setting seems to up the individually animated stands and just provides a slightly mode detailed mesh. It does have a performance cost though, in framerate mode without RT, you cannot get a stable 60 in the opening forest area with it on, but the drop may be minimal enough throughout the campaign that it will make sense for VRR users, albeit the drop may be higher in other scenes with more hair/fur than just the main character as well. Glad it's an option at least.

Very interesting to see these kinds of settings usually reserved for the PC version exposed though.

Considering the fixed hardware nature of consoles, it would make sense that as time goes on, developers expose more and more settings, PC style so that users have more fine grained choices of how they wish to achieve 60/40/30 FPS.

For console purists, you can still have the "default" resolution/framerate options that are now generally provided. Just put the more fine grained options in an "advanced" menu so people that don't want to mess with it don't even have to see it without explicitly going there.

IMO, it makes less and less sense to me to not provide settings like those to console players as long as they still provide the historical "console defaults". Even better is that on console, they can do a better job of explaining the impact of each change if they wanted to as it's a fixed platform so performance impacts of each change should be consistent across like consoles.

Regards,

SB

Flappy Pannus

Veteran

Apparently some RE4 patches just dropped, seeing reports that it improved the PS5's image quality. Any owners of it can confirm?

From what I read on resetera, it seems sharper but vegetation still has the same broken rendering issue; on the other hand XSX now dead zone is improved but IQ take an huge hit as same shitty vegetation of ps5 which is ridiculous. Seems stable vegetation rendering cause worse responsiveness on control, such a weird stuff.Apparently some RE4 patches just dropped, seeing reports that it improved the PS5's image quality. Any owners of it can confirm?

Last edited:

Flappy Pannus

Veteran

Hardware Unboxed Looks at DLSS vs. FSR in recent games

tl/dv: Just a whitewash for DLSS unfortunately. I say unfortunate, as I really would not like to be tied to one architecture in order to get competent reconstruction, but it is what it is. FSR 2.1 Quality at 4K can be 'ok' in some spots, but once you go below that it falls apart quickly. Even at 4k/Quality that there can still be some egregious shimmering issues, aspects which really stand out to me and I hate when I see it in DLSS.

What it ultimately means is that effectively, for low to midrange cards, you really don't have an effective reconstruction technique on Radeon, which really has to be factored into their price/performance IMO. Better than before...I guess? Certainly not as much as I would have hoped. I mean if you're comparing cards and one is with DLSS at 1440P and another is FSR 2, are you really comparing the same thing if one image is flickering in and out of existence? Unreal 4's TAAU looks to be far superior, it may not be as crisp but it's far more stable - there's probably a reason you're not seeing FSR 2 take the console world by storm compared to other reconstruction techniques, albeit on a closed platform I reckon it will receive more attention from devs on tailoring it to games to avoid these poor edge cases.

I mean, yikes man:

(Also this means if a PC publisher adds just FSR 2 because it's 'good enough' or (more likely) due to AMD sponsorship, fuck off. You deserve to be critiqued as harshly as not including any reconstruction at all.)

tl/dv: Just a whitewash for DLSS unfortunately. I say unfortunate, as I really would not like to be tied to one architecture in order to get competent reconstruction, but it is what it is. FSR 2.1 Quality at 4K can be 'ok' in some spots, but once you go below that it falls apart quickly. Even at 4k/Quality that there can still be some egregious shimmering issues, aspects which really stand out to me and I hate when I see it in DLSS.

What it ultimately means is that effectively, for low to midrange cards, you really don't have an effective reconstruction technique on Radeon, which really has to be factored into their price/performance IMO. Better than before...I guess? Certainly not as much as I would have hoped. I mean if you're comparing cards and one is with DLSS at 1440P and another is FSR 2, are you really comparing the same thing if one image is flickering in and out of existence? Unreal 4's TAAU looks to be far superior, it may not be as crisp but it's far more stable - there's probably a reason you're not seeing FSR 2 take the console world by storm compared to other reconstruction techniques, albeit on a closed platform I reckon it will receive more attention from devs on tailoring it to games to avoid these poor edge cases.

I mean, yikes man:

(Also this means if a PC publisher adds just FSR 2 because it's 'good enough' or (more likely) due to AMD sponsorship, fuck off. You deserve to be critiqued as harshly as not including any reconstruction at all.)

Last edited:

- Status

- Not open for further replies.

Similar threads

- Replies

- 797

- Views

- 77K

- Locked

- Replies

- 3K

- Views

- 311K

- Replies

- 465

- Views

- 34K

- Replies

- 3K

- Views

- 312K