davis.anthony

Veteran

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I don't know about ray tracing and 7700x but my 2700 at 3.7 GHz is pretty cool on this title without all that stuff.the 4090 is sitting on its laurels, I guess.

Wish Eurogamer also did a technical review.I am gonna miss that a lot, 'cos I like the native vs pixel reconstruction techniques comparisons and so on and wanted to know how this game behaves with XeSS and stuff like that.

I have a good ol' 3700X and I wonder what's with AMD not improving single thread performance that much on a new 7700X. Also wish there was a demo so I could test performance myself. According to this guy the game runs perfectly on a very similar CPU compared to mine -the 3900X- and the A770 (which is included in the recommended settings).

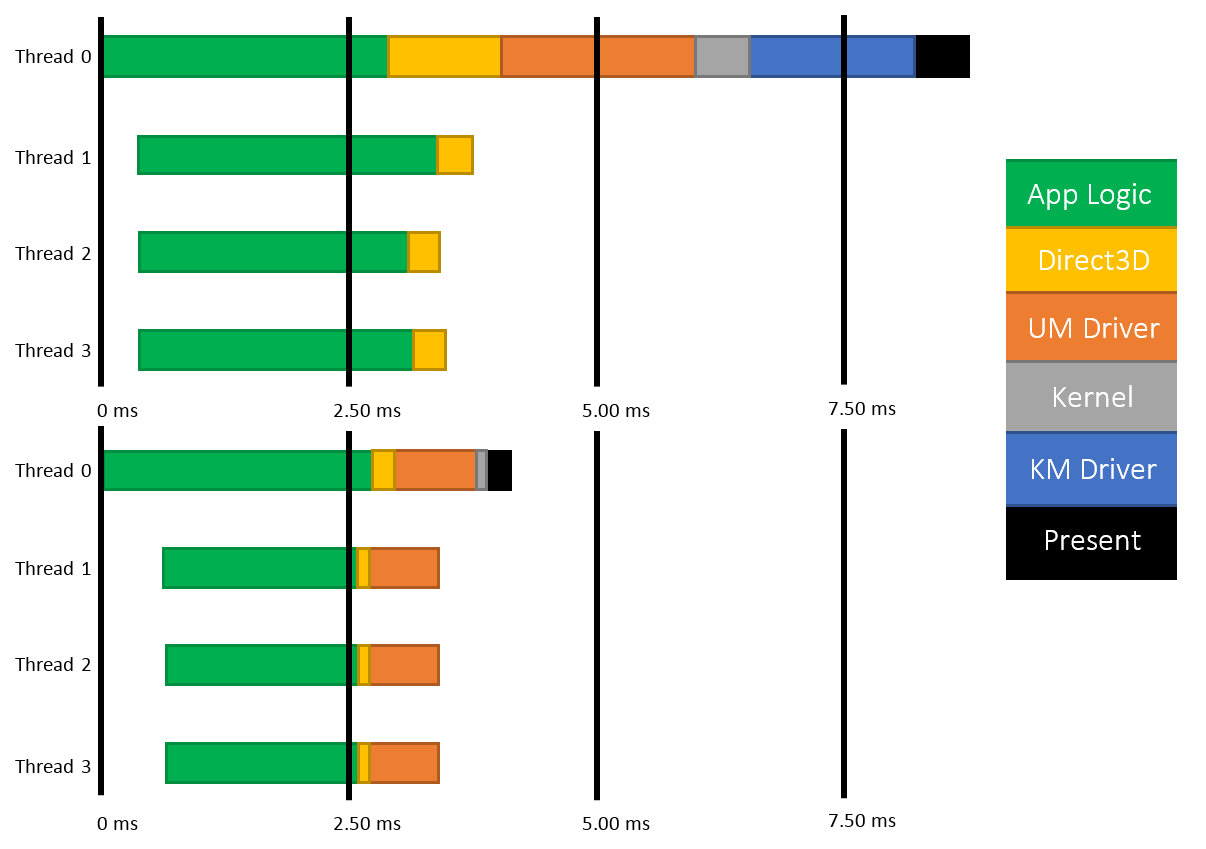

the 3700X ... only a 20% slower than the 7700X or a monster like the 13900K? Something doesn't compute here, the difference should be much bigger.Guru3D do an IPC test with all CPU's locked at 3.5Ghz.View attachment 8291

the 3700X ... only a 20% slower than the 7700X or a monster like the 13900K? Something doesn't compute here, the difference should be much bigger.

7700x and 13900k clock higher5 GHz and above IIRC. Yours run around 4-4.2 GHz, IIRC. IPC improvements are indeed pathetic on the CPU side for the last 10 years. Even a mere %20 improvement is seen as "holy shit %20???". While GPUs get 2x improvements over mere 2 years, we can't even get 2x IPC improvements over like... 5-7 years. This is why I guess in the end NVIDIA said "damn it" and procured frame generation. They're aware that CPUs won't be able to keep up with their GPUs, especially in such a convoluted platform where CPU bound optimizations are non existent.the 3700X ... only a 20% slower than the 7700X or a monster like the 13900K? Something doesn't compute here, the difference should be much bigger.

The real improvement here is also multi core performance.7700x and 13900k clock higher5 GHz and above IIRC. Yours run around 4-4.2 GHz, IIRC. IPC improvements are indeed pathetic on the CPU side for the last 10 years. Even a mere %20 improvement is seen as "holy shit %20???". While GPUs get 2x improvements over mere 2 years, we can't even get 2x IPC improvements over like... 5-7 years. This is why I guess in the end NVIDIA said "damn it" and procured frame generation. They're aware that CPUs won't be able to keep up with their GPUs, especially in such a convoluted platform where CPU bound optimizations are non existent.

Only wish more games exploited the vast power of multicore CPUs. Seems like we've almost regressed in that regard, with Gotham Knights and Hog Legacy being extremely single threaded and not very multi threaded. The argument of how it is hard to make an engine super multi threaded always pops up in such discussions, so I'm aware of the limitations as well. I wish whatever Doom Eternal was doing would be the standard. One of the rare AAA games that my 2700 can scale above 200 framerate average at low resolutions, while utilizing tons of threads gracefully. But then again, I'd like to see how that engine fares in an actual open world game too.The real improvement here is also multi core performance.

The x3d performance is a bit odd. I really expected it to be much higher but maybe this will also only show in multithreaded applications.

well, just let's say, Doom has almost no AI. Doom is filled with "random" enemies that just have one target (to kill the player). There is nothing in the world that is much more complex than this. They can purely concentrate on the graphics pipeline. In games like Hogwarts legacy it is a bit more complex, even if most NPCs, ... are just scripted. The open-world setting of that game also doesn't make it easier. But in the end, it has much to do with the development time and how experienced the developers are.Only wish more games exploited the vast power of multicore CPUs. Seems like we've almost regressed in that regard, with Gotham Knights and Hog Legacy being extremely single threaded and not very multi threaded. The argument of how it is hard to make an engine super multi threaded always pops up in such discussions, so I'm aware of the limitations as well. I wish whatever Doom Eternal was doing would be the standard. One of the rare AAA games that my 2700 can scale above 200 framerate average at low resolutions, while utilizing tons of threads gracefully. But then again, I'd like to see how that engine fares in an actual open world game too.

Making something single-threadded is much, much easier and less bug-intensive than something multithreaded. E.g. this is also one reason why nodejs is so successful it is just easy to not be aware that multiple threads can change a resource you are working with. I only develop business logic but even there multithreading can be a nightmare when you want to analyze a buggy behavior.

So, not only the i7-9900 is limiting the 4090, a 7700X is as bad.

Its great to be a PC gamer. Maybe Microsoft should use these $69 billions to design an API which is not as broken as DX12...

Surely it depends ultimately on the engine, not the API, to appropriately schedule work?So, not only the i7-9900 is limiting the 4090, a 7700X is as bad.

Its great to be a PC gamer. Maybe Microsoft should use these $69 billions to design an API which is not as broken as DX12...

I blame the mid 2000's when quad cores just hit the market and their PR was all about spreading everything over a 'x' CPU cores, it gave people the wrong expectations as to what can and can't scale across multiple cores which is why people now expect everything to fully load their 24 core CPU.

I think it's also worth mentioning that certain things just do not scale very well over multiple cores.

devblogs.microsoft.com

devblogs.microsoft.com

Because DX12 demands optimization for every architecture and doing proper MT renderer on their own without the help of the DX11 driver. This is the job of the developer. But this should never be a topic for the PC plattform. What we are seeing now are these unoptimized console ports which run worse than Battlefield 5 from 2018. I can play it with over 170 FPS on my 13600K with Raytracing. So there is obvisously something very wrong with these games which cant even do proper raytracing while running so much more worse than than a four years old game which was the first to implement real time Raytracing.Why do people keep blaming DX12? It's just an API.

Did they ever think it's the developers who are responsible for ensuring it works as it should?

We do after all have some good DX12 implementations so it can't explicitly be down to just DX12.

Surely it depends ultimately on the engine, not the API, to appropriately schedule work?

Because DX12 demands optimization for every architecture and doing proper MT renderer on their own without the help of the DX11 driver. This is the job of the developer. But this should never be a topic for the PC plattform. What we are seeing now are these unoptimized console ports which run worse than Battlefield 5 from 2018. I can play it with over 170 FPS on my 13600K with Raytracing. So there is obvisously something very wrong with these games which cant even do proper raytracing while running so much more worse than than a four years old game which was the first to implement real time Raytracing.

No, it is a DX12 problem because this is enforced by DX12 itself.What you've described is a developer issue and not DX12 itself.