@defghik They should probably have options to turn off vignette, depth of field and lens distortion. I can see why Remedy would not want those changed as artistic choices to the game, but they do allow to turn off film grain, so I'm not sure why it's inconsistent. Field of view is probably narrow as it's a horror game of sorts. Maybe performance too, but I think they probably wanted a more up close view to add to the jump scare and "scary" elements. Seems like they should have offered in-game sharpening slider too.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Current Generation Games Analysis Technical Discussion [2023] [XBSX|S, PS5, PC]

- Thread starter BRiT

- Start date

- Status

- Not open for further replies.

Flappy Pannus

Veteran

@defghik They should probably have options to turn off vignette, depth of field and lens distortion. I can see why Remedy would not want those changed as artistic choices to the game, but they do allow to turn off film grain, so I'm not sure why it's inconsistent. Field of view is probably narrow as it's a horror game of sorts. Maybe performance too, but I think they probably wanted a more up close view to add to the jump scare and "scary" elements. Seems like they should have offered in-game sharpening slider too.

They should, but if those also should not cause severe artifacting with reconstruction if they're implemented properly, or maybe it's a limitation of the engine preventing this. The severe aliasing you get around specular edges moving through the world is exactly what you see in older games that had DLSS patches and when you enable motion blur/dof, later games though work fine with those when they're implemented post-reconstruction. Put the post in postprocess, people.

They should, but if those also should not cause severe artifacting with reconstruction if they're implemented properly, or maybe it's a limitation of the engine preventing this. The severe aliasing you get around specular edges moving through the world is exactly what you see in older games that had DLSS patches and when you enable motion blur/dof, later games though work fine with those when they're implemented post-reconstruction. Put the post in postprocess, people.

From the quote it sounded more like they made the image more blurry. The lens distortion could do that for sure. I don't know how sophisticated lens distortion normally is. If it's just warping, or if they're actually simulating a type of film lens. There are film lenses that are intentionally kind of weird looking.

This is one of the lenses that was used in the filming of the Batman ... I think mounted to the car during the chase scenes, but they had other anamorphic lenses with the same character.

gamervivek

Regular

FWIW Techpowerup's conclusion supports this theory.

"The settings menu of Alan Wake 2 has a long list of options for performance tuning, but there will be a ton of drama around the forced upscaling. Yup, you can't render at native. There's only options for "DLSS" and "FSR"—nothing else. Both upscalers come with the option to render at native resolution, but will still use the image enhancement techniques of the upscaler. Not sure why Remedy made such a choice—it will just antagonize players. What makes things worse is that the sharpening filter that's part of both upscalers is disabled, but can be enabled manually with a config file edit. Still, we want native, just as an option, even if it comes with a performance hit. The good thing is that you can enable this in settings manually, by changing m_eSSAAMethod to 0 or 1. During gaming, even with DLAA enabled, and Motion Blur and Film Grain disabled, I noticed that at sub-4K resolutions the game looks quite blurry, like there was a hidden upscaler at work. I played with all the settings options—no improvement. After digging through the config file I noticed that there's several important settings that aren't exposed in the settings menu, no idea why. Once I set m_bVignette, m_bDepthOfField and m_bLensDistortion to "false," the game suddenly looked much clearer. If you plan on playing Alan Wake 2 definitely make those INI tweaks manually. You can also change the field of view here (m_fFieldOfViewMultiplier). I found the default too narrow and prefer to play with a setting of 1.3."

Alan Wake 2 Performance Benchmark Review - 30 GPUs Tested

Alan Wake 2 is out soon, with incredible graphics, but demanding hardware requirements, too. There's forced DLSS/FSR, but we show you how to tweak the config files to get native back. In our performance review, we're taking a closer look at image quality, VRAM usage, and performance on a wide...www.techpowerup.com

Had to do this with Far Cry 6 using a mod. It's not as bad at 4k, but at 1080p then, the image looked drastically cleaner with all these 'ehancements' turned off.

DavidGraham

Veteran

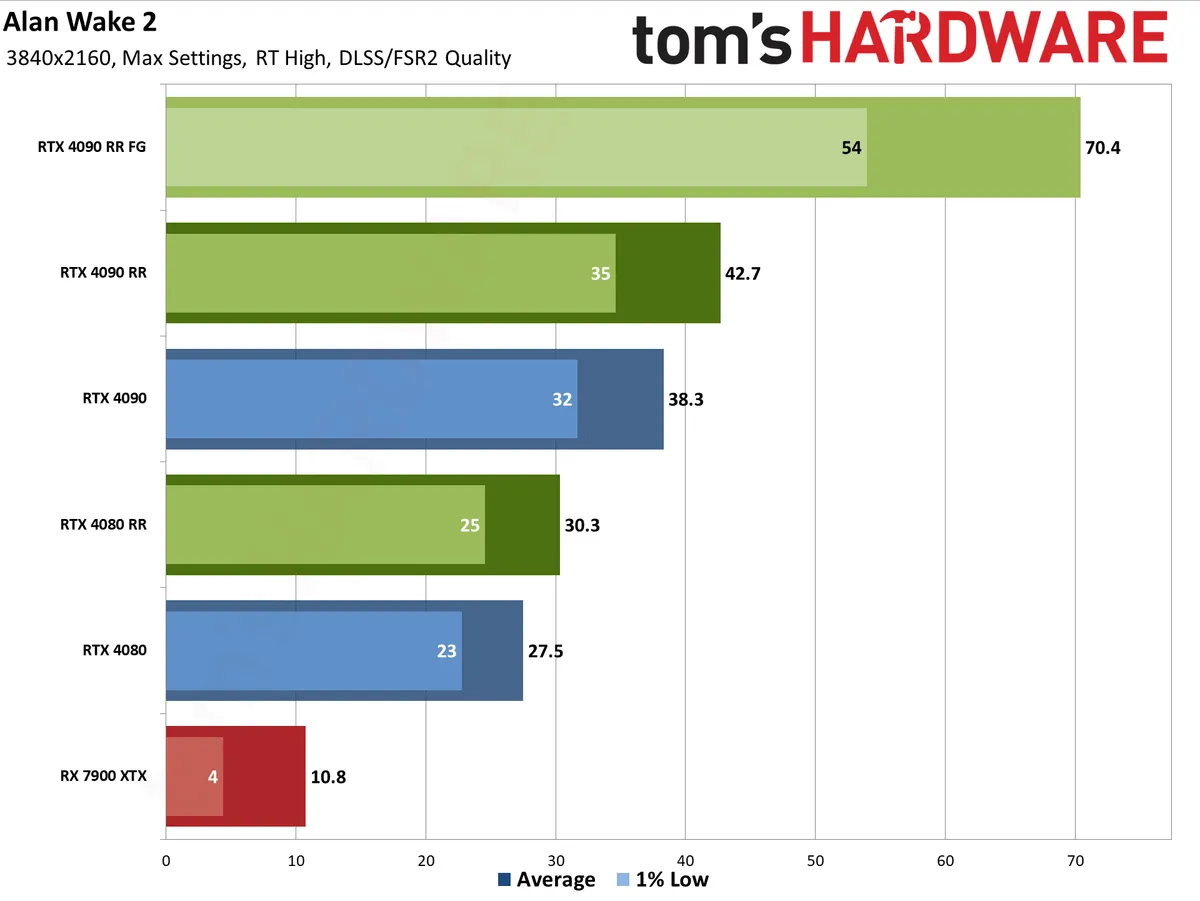

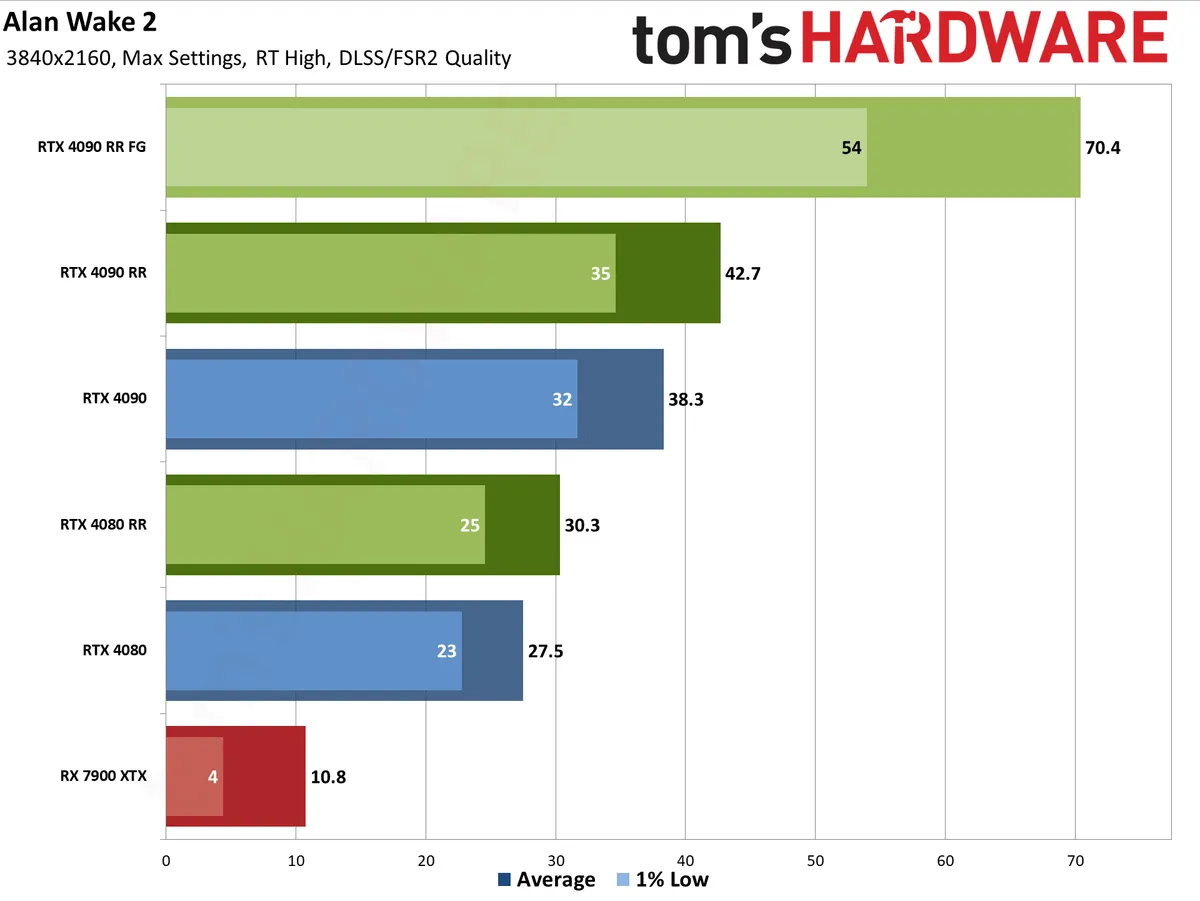

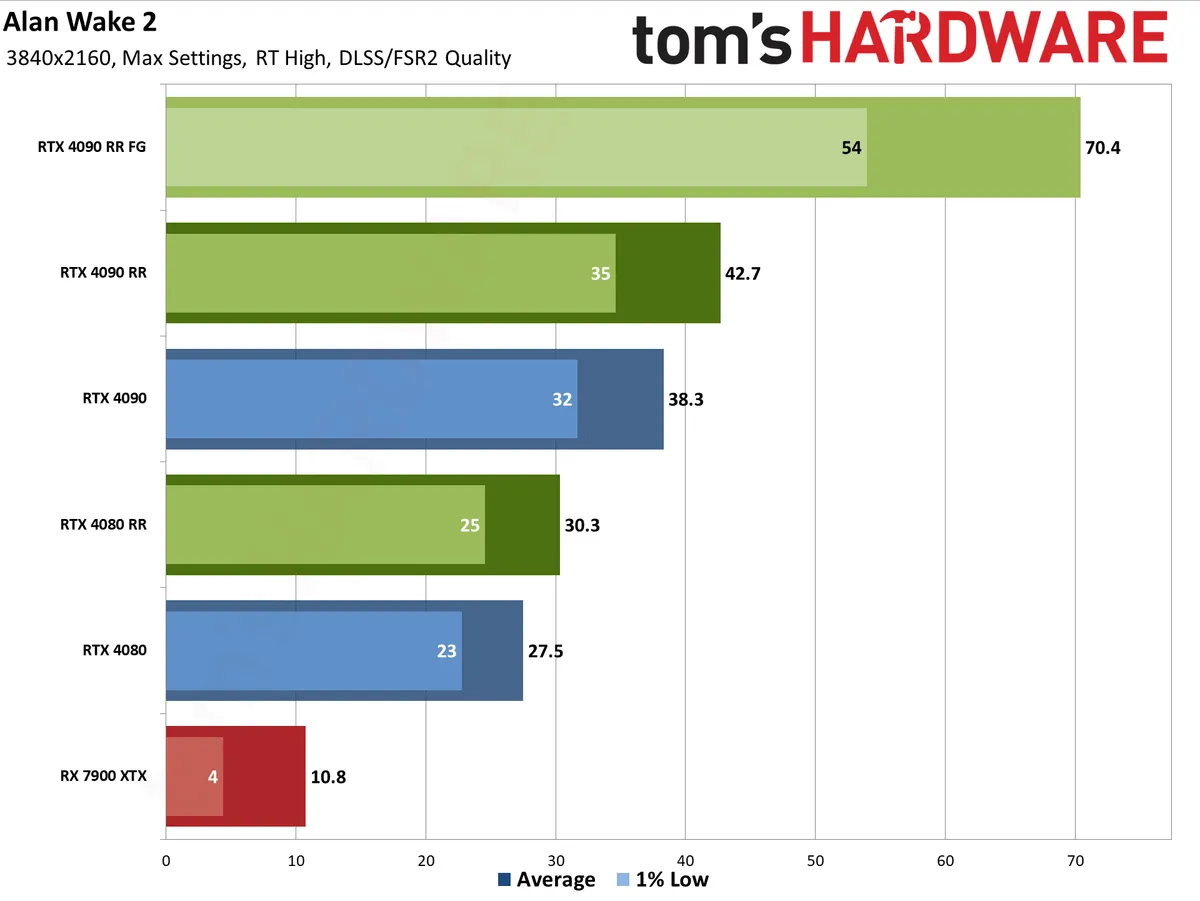

New Alan Wake 2 tests from GameGPU using foliage heavy area and Path Tracing. The 4080 alone is 3x times faster than 7900XTX @2160p and 1440p.

gamegpu.tech

gamegpu.tech

Edit: same results from TomsHardware too.

www.tomshardware.com

www.tomshardware.com

Alan Wake 2: PC Performance Benchmarks for Graphics Cards and Processors | Action / FPS / TPS | GPU TEST

We tested Alan Wake 2 at the highest graphics settings, on video cards from the GEFORCE RTX and RADEON RX series. Also, during the tests we conducted, we assessed the performance and quality of the

Edit: same results from TomsHardware too.

Testing Alan Wake 2: Full Path Tracing and Ray Reconstruction Will Punish Your GPU

The first game to launch with full ray tracing support.

Last edited:

Series X performance mode is far more stable than PS5. Visually, as far as I can tell on my tablet, they both look identical.

Flappy Pannus

Veteran

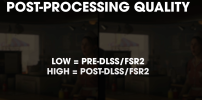

This is Quality mode:

Man alive. It almost looks like they're not doing post-processing after reconstruction

And indeed they are. From Alex's video:

I can always recognize when post processing isn't really post.

Welp. It looks like that unless AMD adds some tensor like hardware and more performant RT cores while adopting ML based super resolution and frame generation, it’s discrete PC GPU business is going to be non-existent.New Alan Wake 2 tests from GameGPU using foliage heavy area and Path Tracing. The 4080 alone is 3x times faster than 7900XTX @2160p and 1440p.

Alan Wake 2: PC Performance Benchmarks for Graphics Cards and Processors | Action / FPS / TPS | GPU TEST

We tested Alan Wake 2 at the highest graphics settings, on video cards from the GEFORCE RTX and RADEON RX series. Also, during the tests we conducted, we assessed the performance and quality of thegamegpu.tech

Edit: same results from TomsHardware too.

Testing Alan Wake 2: Full Path Tracing and Ray Reconstruction Will Punish Your GPU

The first game to launch with full ray tracing support.www.tomshardware.com

This is bound to get worse as more and more AW2 like titles are released.

Last edited:

Flappy Pannus

Veteran

So, nabbed a copy of AW2 just to see how it would run/walk on my 3060, and for the most part, without hardware RT and using PS5 settings, I probably could get 60fps at 1440p with DLSS performance mode...except it has wonky vsync, at least with a 60hz display. So what happens is it will bounce the GPU usage from the ~80% range to 95%, losing 5-15 fps over non-vsync depending upon the scene. The only way to get a locked 60fps would be to choose graphics settings that make it so I'm hardly ever going above 80% GPU usage, so in other words make it look awful.

It's especially annoying when DX12 games mess up their vsync, as being DX12 there's really no way to fix it - not even Special K and forcing Reflex works here, which is about the only way you can mess with frame pacing in DX12 games. Control had 2 issues with vsync and fixed refresh displays as well, in DX11 it has a hard double-buffer implementation so drops to 30 the millisecond you go below 60, so Fast Sync to the rescue (also annoying how often that needs to be used, but at least it works)! But...the game's loading speed is tied to the framerate in DX11 and is far worse with any kind of cap (which you need with fast sync) and so you would get 3X slower loading. DX12 mode didn't have this deficiency, but instead has a microstutter problem in the corners of rooms at 60hz. So hopefully this gets fixed, but frankly I'm a little pessimistic Remedy devotes much time to testing these on fixed refresh rate displays.

Yeah I know, I'm in a minority of PC users that still use non-VRR displays so it's not surprising this isn't a priority, but considering in technical reviews the PC versions are compared against their console counterparts, and often in the context of seeing what settings get you that comparable 60fps target, it seems covering vsync implementations could get a cursory glance - any potential frame pacing issues with it has fallen by the wayside compared to several years ago it seems. Death Stranding was another one that I only found out after purchase it has an unfixable fucked up intermittent frame pacing issue at 60hz too, albeit I think that affects VRR displays as well). It's rare I ever find out about these deficiencies other than first-hand experience.

So I guess PS5 version, or Linux (thankfully Vulkan under Proton does fix Control's DX12 microstutter, dual booting to get framepacing working properly is a hassle but at least it's possible - alas Death Stranding remains my white microstuttering whale).

It's especially annoying when DX12 games mess up their vsync, as being DX12 there's really no way to fix it - not even Special K and forcing Reflex works here, which is about the only way you can mess with frame pacing in DX12 games. Control had 2 issues with vsync and fixed refresh displays as well, in DX11 it has a hard double-buffer implementation so drops to 30 the millisecond you go below 60, so Fast Sync to the rescue (also annoying how often that needs to be used, but at least it works)! But...the game's loading speed is tied to the framerate in DX11 and is far worse with any kind of cap (which you need with fast sync) and so you would get 3X slower loading. DX12 mode didn't have this deficiency, but instead has a microstutter problem in the corners of rooms at 60hz. So hopefully this gets fixed, but frankly I'm a little pessimistic Remedy devotes much time to testing these on fixed refresh rate displays.

Yeah I know, I'm in a minority of PC users that still use non-VRR displays so it's not surprising this isn't a priority, but considering in technical reviews the PC versions are compared against their console counterparts, and often in the context of seeing what settings get you that comparable 60fps target, it seems covering vsync implementations could get a cursory glance - any potential frame pacing issues with it has fallen by the wayside compared to several years ago it seems. Death Stranding was another one that I only found out after purchase it has an unfixable fucked up intermittent frame pacing issue at 60hz too, albeit I think that affects VRR displays as well). It's rare I ever find out about these deficiencies other than first-hand experience.

So I guess PS5 version, or Linux (thankfully Vulkan under Proton does fix Control's DX12 microstutter, dual booting to get framepacing working properly is a hassle but at least it's possible - alas Death Stranding remains my white microstuttering whale).

Last edited:

Path tracing in AAA games is not going to become some critical thing anytime soon. It'll be an optional setting for those with ultra high end rigs. It's largely unusable, or at least certain not worth the performance cost for the large majority of RTX users as well.Welp. It looks like that unless AMD adds some tensor like hardware and more performant RT cores while adopting ML based super resolution and frame generation, it’s discrete PC GPU business is going to be non-existent.

This isn’t bound to get worse as more and more AW2 like titles are released.

Considering what the Lead Lighting Artist said on Fable Trailer: "This is the game captured on a Series X." I still say that with a properly written engine, much more can be achieved from the current consoles. It probably requires a lot of manual code, but the results speak for themselves. The graphics shown in the Fable trailer are better than anything currently on the market. And the Series X is enough for that... Thought provoking

How about we wait until the game is out?Considering what the Lead Lighting Artist said on Fable Trailer: "This is the game captured on a Series X." I still say that with a properly written engine, much more can be achieved from the current consoles. It probably requires a lot of manual code, but the results speak for themselves. The graphics shown in the Fable trailer are better than anything currently on the market. And the Series X is enough for that... Thought provoking

What's the point of that comparison? Planar reflections by rendering a second viewport or doubling geometry is an effective hack for particular situations but it's limited like that.

Flappy Pannus

Veteran

What's the point of that comparison? Planar reflections by rendering a second viewport or doubling geometry is an effective hack for particular situations but it's limited like that.

There was a cheap and easy way to add accurate reflections to every surface before, modern devs just want their games to look worse. That of course is the only logical conclusion.

There was a cheap and easy way to add accurate reflections to every surface before, modern devs just want their games to look worse. That of course is the only logical conclusion.

But it's not cheap? That's why it was abandoned.

Flappy Pannus

Veteran

But it's not cheap? That's why it was abandoned.

I'd make the sarcasm heavier but I'd pull a muscle

The end result though amounted from pretty satisfactory to just perfect. Its been so long that I cant remember if they ever managed to replicate a transparent reflection using this technique ever (like seing your reflection on a window and at the same time whats behind it). I am checking MGS2's tanker section where Snake is fighting Olga, and it is just outststanding how well it mimics the result of RT and looks so clean at the same time.What's the point of that comparison? Planar reflections by rendering a second viewport or doubling geometry is an effective hack for particular situations but it's limited like that.

But I guess RT is a much easier implementation to apply in a heavy reflective environment and have full control with how the surfaces of different material and attributes should be dysplaying the reflections. I cant imagine the artists going through every puddle or reflectve surface and try to duplicate manually certain sections with transparencies with all the unpredictable action of particles and effects shown on the pseudo reflection. But in principle its kind of is the same thing that RT does without the crazy manual work and with better handling of materials.

But in principle RT does the same thing as the old school technique without the manual work.

Last edited:

- Status

- Not open for further replies.

Similar threads

- Replies

- 797

- Views

- 76K

- Locked

- Replies

- 3K

- Views

- 310K

- Replies

- 439

- Views

- 32K

- Replies

- 3K

- Views

- 312K