HBRU

Regular

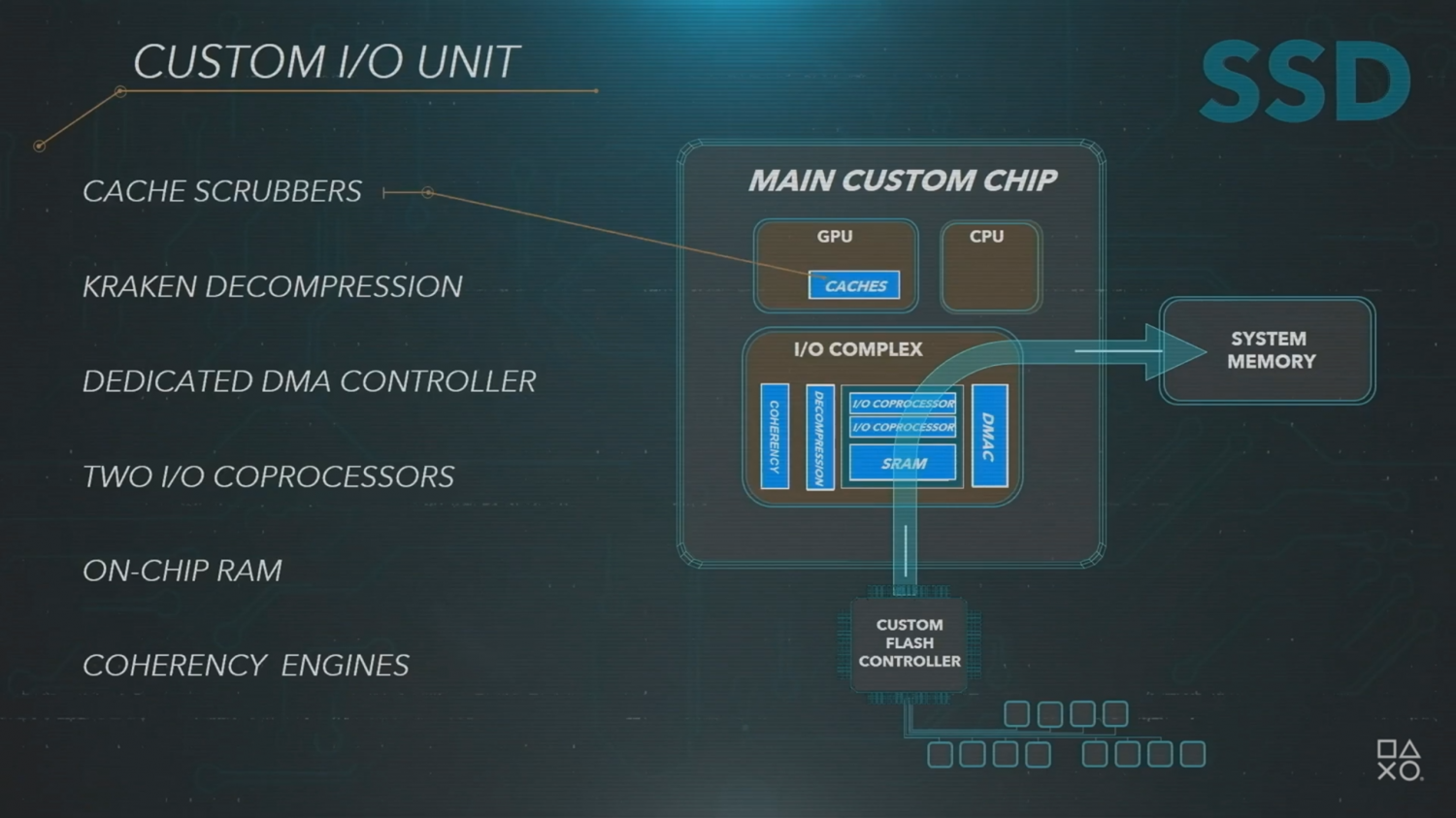

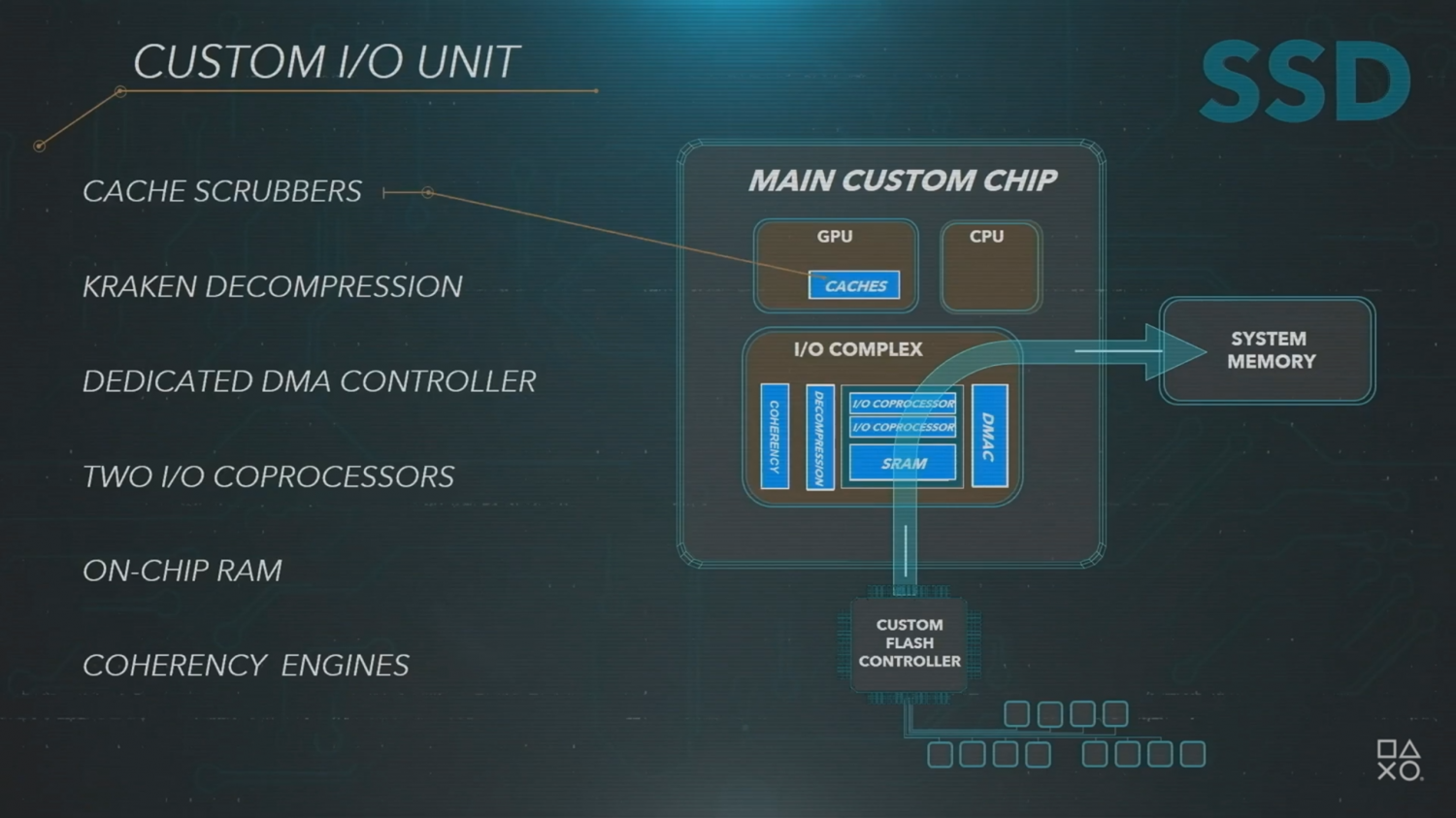

So CPU and GPU on ps5 are discrete chips ? I thought it was an APU [emoji16]This is interesting, the IO die is much big than GPU + CPU.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

So CPU and GPU on ps5 are discrete chips ? I thought it was an APU [emoji16]This is interesting, the IO die is much big than GPU + CPU.

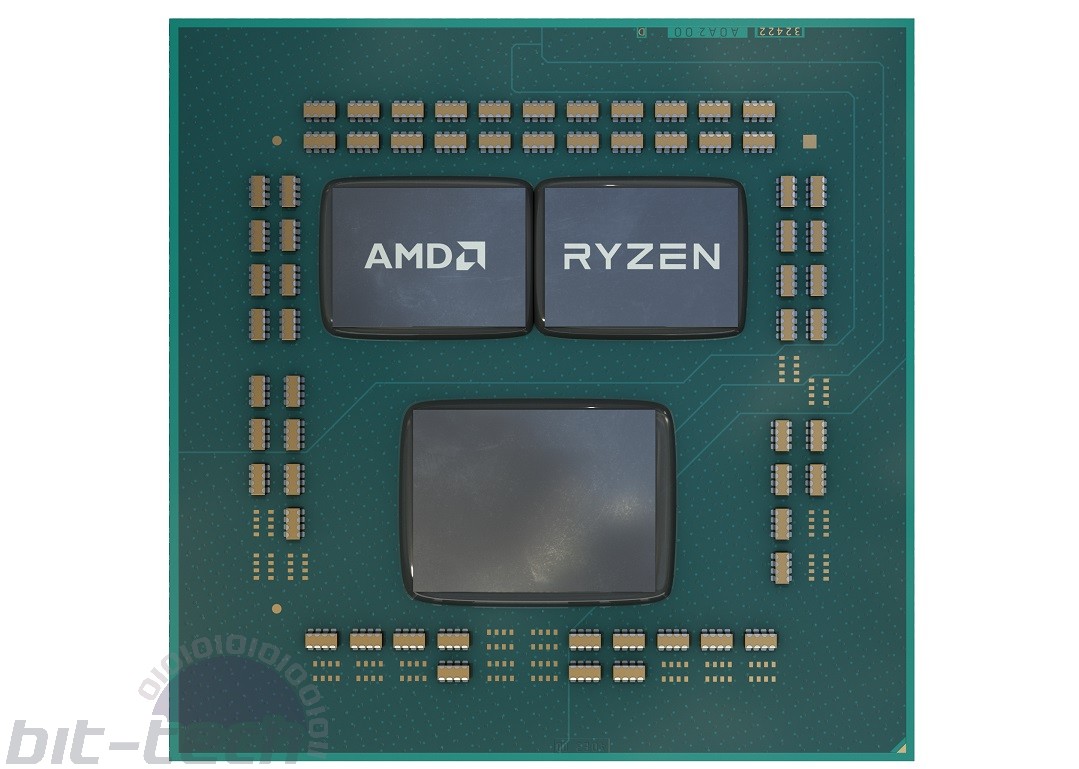

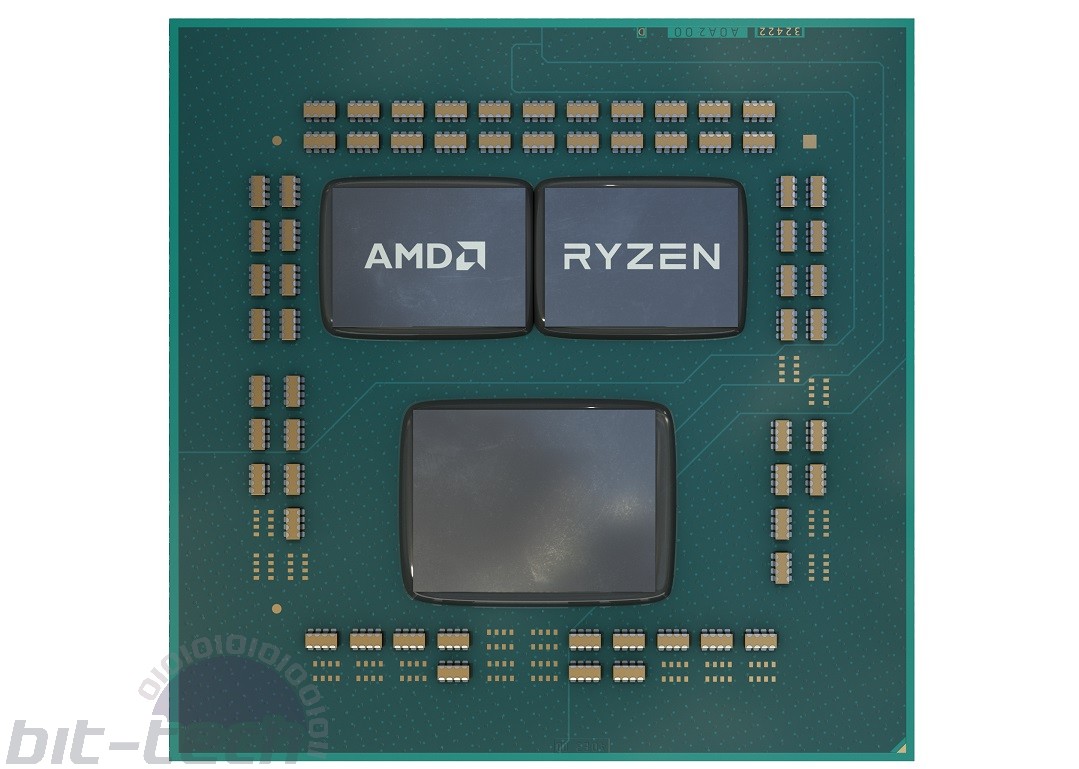

Amd chiplets designSo CPU and GPU on ps5 are discrete chips ? I thought it was an APU [emoji16]

Amd chiplets design

They probably had better results (and easy life) with 18 x 3 = 54 CU with a fixed max frequency at 2 x 911 ghz.... Really don't understand why they went so narrow and fast...

PC gamer's welcome these techniques.

I got the idea that the PS5 runs at boosted clocks by default and down clocks the CPU and GPU according to requirements.Yes but on ps5 its the other way around, they state max clocks instead of min. A 2080Ti is listed as a 13.5TF part, but if conditions allow, it will boost higher, not below.

With ps5, they state 10.2TF but it can be lower. This wouldnt work out well with pc gamers.

When did this place become ‘rumours presented as fact’ central?

We have threads for this shyte

Same opinion.the upclocking of the gpu is obviously a reaction to XSX 12 teraflops, together with the variable frequency, Sony did everything to come close to that mark and not look weak in comparison. Even Cerny slipped that if you drop the power consumption by 10% you only lose about 1-2% of performance but you can flip that and say if you add 10% of power you only gain 1-2% of performance that is a horrible performanc to watt ratio. And there were leaks that RDNA 2 did not scale really good with upclocking. In short Sony made a GPU and they are stuck with it and are now upcloking it to hell to look comparable in power to the XSX. I personally think they should drop the act downclock to normal levels at about 2.0 and call it a day.

Yes but on ps5 its the other way around, they state max clocks instead of min. A 2080Ti is listed as a 13.5TF part, but if conditions allow, it will boost higher, not below.

With ps5, they state 10.2TF but it can be lower. This wouldnt work out well with pc gamers.

I got the idea that the PS5 runs at boosted clocks by default and down clocks the CPU and GPU according to requirements.

I never understood how it works for the PS5 to be honest.

There is an abstract model of the GPU&CPU where at some power drain curve from socket (that is measured trough a lot of sensors that are actually counters of instructions) correspond a certain manageable possible frequency.... this abstract model si then fixed into the real silicon by adjustments. I explain: the real silicon curve is memorized and the gaps are recognized but not shown to the software. At the end the silicon is somehow "abstracted" and we have a predictable way it works for all the silicons.... I mean the silicons that have enough quality. So we have a variable frequency but steady performances trough all the chips. This is how I understood it.I got the idea that the PS5 runs at boosted clocks by default and down clocks the CPU and GPU according to requirements.

I never understood how it works for the PS5 to be honest.

Yes, but don't you see the different situation?So we're suddenly spitting out FUD in technical discussions using powerpoint slides as sources for die size?

Keep in mid that 11 million is actually roughly what the PS4 managed in the same time frame.

as in end of March they sold 7.5 million units to retail that respective year.

I'm not sure if this comparison is strictly useful. With a fixed console platform looking for uniformity you'd want a fixed upper bound....

The practical effect on the end user experience is effectively negligible.

Nvidia or Intel don't use the adaptive clocking system like AMD uses since around 2013 starting with steam roller. It's a very common technique for PC gamers if they especially use Ryzen CPUs.Yes but on ps5 its the other way around, they state max clocks instead of min. A 2080Ti is listed as a 13.5TF part, but if conditions allow, it will boost higher, not below.

With ps5, they state 10.2TF but it can be lower. This wouldnt work out well with pc gamers.

IIRC, Voltage for ideal processors wouldn't fluctuate when activity occurs. However, due to ohmic laws it fluctuates when current flows. Voltage drops when current is applied and it rises when the current is stopped. The amount of the drops and rises are bigger when the events occur faster and they are smaller when they occur slower (ohm's law for inductors).I got the idea that the PS5 runs at boosted clocks by default and down clocks the CPU and GPU according to requirements.

I never understood how it works for the PS5 to be honest.

I got the idea that the PS5 runs at boosted clocks by default and down clocks the CPU and GPU according to requirements.

I never understood how it works for the PS5 to be honest.

Nvidia or Intel don't use the adaptive clocking system like AMD uses since around 2013 starting with steam roller. It's a very common technique for PC gamers if they especially use Ryzen CPUs.