It was more then that (albeit not 512MB).Because in the beginning it was thought to be used 256 Mb of RAM.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Are PS3 devs using the two mem pools for textures?

- Thread starter Love_In_Rio

- Start date

8. The PS3 has 256MB of fast video RAM and 256MB of normal RAM. But I’ve heard that all memory can be used either by the Cell or the GPU anyway. Is this split an issue? Does it hinder or help? Would a unified memory architecture have been better? Do you have any clarifying comments about that?

Al Hastings, Chief Technology Officer: In practice, the split memory architecture hasn’t caused us many problems. The GPU can access main RAM at high speed and with very few restrictions. And while there are some restrictions when you want the CPU or SPUs to access video RAM at high speed, so far they’ve been easy enough to work with.

For the PS3, I think the split memory architecture was the right way to go. It allows the Cell and the GPU to both do heavy work on their local buses without contending with each other. It should really pay dividends a few years down the road in the PS3’s lifecycle when everyone’s code has gotten more efficient and bus bandwidth emerges as a one of the most important resources.

Link

Latency is always an issue, an issue that GPU can address very well if designed to do so.So the latency issues regarding RSX going through the Cell ring bus that people were arguing about a while back is a non-issue?

Latency is always an issue, an issue that GPU can address very well if designed to do so.

Does that mean it is or isn't? You probably can't say huh?

nAo meant exactly what he said. There are lots of issues in a console, all of which matter. Still, the answer you're after is 'no' as the devs would work around it. And I suppose 'yes' when they don't!

Perhaps nAo's reference to the GPU being 'designed' to offset that relates to changes reportedly made in the cache system. I'm not sure what the hit-rate is like with the cache before, but perhaps for data access going over flexio, they improved it further (via size or otherwise) so that more subsequent requests could be handled by cache rather than having to go back over flexio again.

That asides , as for what programmers can separately do to mitigate differences in latency between different data accesses, I guess whether it matters at all, or how much it matters, depends on the workload.

That asides , as for what programmers can separately do to mitigate differences in latency between different data accesses, I guess whether it matters at all, or how much it matters, depends on the workload.

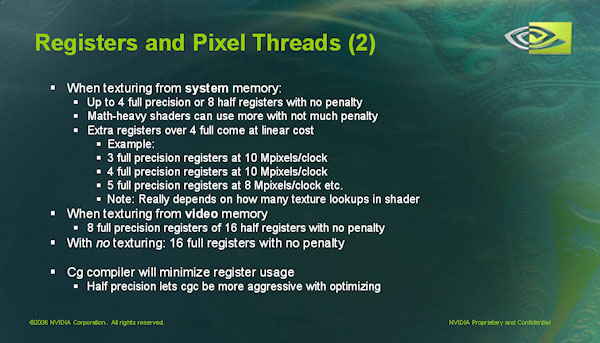

Yep, it's very clear that you didn't sign any NDARSX suffers doubled latency texturing from system RAM as it does texturing from GDDR.

No NDA, simple.

Texture caches can only help you when the chip is re-using texels, but they don't address double (or ten times more) latency per se, every texture cache miss is going to stall/starve your chip if you did not design it to cover your average latency.Perhaps nAo's reference to the GPU being 'designed' to offset that relates to changes reportedly made in the cache system. I'm not sure what the hit-rate is like with the cache before, but perhaps for data access going over flexio, they improved it further (via size or otherwise) so that more subsequent requests could be handled by cache rather than having to go back over flexio again.

RSX suffers doubled latency texturing from system RAM as it does texturing from GDDR.

No NDA, simple.

Now, what programmers do with that fact is a different kettle of fish.

Jawed

Does that figure also include grabbing geometry

How might one deal with the extra latency in a game situation? Perhaps streaming objects not in a player's field of view?

GPUs have been able to fetch vertices from system memory since ages, nothing really new here.Does that figure also include grabbing geometry

You shouldn't see it as "extra latency", it's just latency, it's not like GPUs started to handle hundred cycles latency yesterdayHow might one deal with the extra latency in a game situation? Perhaps streaming objects not in a player's field of view?

Yep, it's very clear that you didn't sign any NDA

No need to be rude bro. Are the specific NDA's still in place, and if so is there anything u can tell us about it?

No need to be rude bro. Are the specific NDA's still in place, and if so is there anything u can tell us about it?

I get the feeling that there should have been a smilie at the end of that. I certainly didn't see it as rude.

*nudge* I didn't say it but I'm just going to point out the person who said something *nudge* but I'm not going to confirm anything *wink*.

Leave it to AlStrong to ask the really newbie question.GPUs have been able to fetch vertices from system memory since ages, nothing really new here.

I suppose I'm having a hard time seeing the implications of the higher latency of accessing the XDR versus the GDDR3. It is a developer thing, but I'm too curious to know a specific example (one out of eleventy billion that might be used).You shouldn't see it as "extra latency", it's just latency, it's not like GPUs started to handle hundred cycles latency yesterday

No need to be rude bro. Are the specific NDA's still in place, and if so is there anything u can tell us about it?

Unlike press NDA's which are used to control the timing of information release, developer NDA's never expire.

I suppose I'm having a hard time seeing the implications of the higher latency of accessing the XDR versus the GDDR3. It is a developer thing, but I'm too curious to know a specific example (one out of eleventy billion that might be used).

IF the GPU can't hide the latency then it would cause the GPU stall fairly continuously resulting in slower rendering performance.

I was not rude, I was simply stating the obvious given that he was giving a blunt statement about stuff he can't possibly know.No need to be rude bro.

As I said you should see the implications of latency not higher latency, GPUs hide latency all the time, it does not seem a miracle to me if a GPU can hide latency.I suppose I'm having a hard time seeing the implications of the higher latency of accessing the XDR versus the GDDR3. It is a developer thing, but I'm too curious to know a specific example (one out of eleventy billion that might be used).

If you are a good GPUs architect you will design a GPU that will cope with your whole system latency, if you don't..well..they should fire you!

Similar threads

- Replies

- 2

- Views

- 1K

- Locked

- Replies

- 72

- Views

- 16K

- Replies

- 63

- Views

- 15K

- Replies

- 2K

- Views

- 216K