davis.anthony

Veteran

So, different clocks (as advertised) means its broken?

I'm confused by this comment, can you clarify please as I assume it's directed at me?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

So, different clocks (as advertised) means its broken?

At least five in any given GPU, probably.Do you know how many clock domains there actually are?

The speculation is that they separated these clocks domains in response to a bug that is preventing it from reaching clockspeed targets, and then marketed the separate clocks as a performance enhancing feature (which is technically true). It is a baseless but reasonable theory.So, different clocks (as advertised) means its broken?

Do you know how many clock domains there actually are?

Shader core isn't chiplets. Everything else is in one GCD, except for Infinity Cache and memory controllers which live on 6 chiplets (16MB + 64bit mem controller (4x16bit) each)So is it balancing power between chiplets ? The shader core chiplets are different than the chiplet with the memory interfaces? Does the cache also have its own clock? I haven’t looked at a diagram for rdna3

Shader core isn't chiplets. Everything else is in one GCD, except for Infinity Cache and memory controllers which live on 6 chiplets (16MB + 64bit mem controller (4x16bit) each)

No, IANEE, but let me guess:Do you know how many clock domains there actually are?

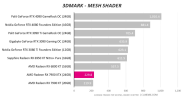

6900XT OC vs 7900XTX OC

Add from 30w to 50w on top of this, as software doesn't read AMD's TBP in it's entirety, unlike NVIDIA.460w lol

You know that has to be built into the hardware right?they separated these clocks domains in response to a bug

AMD said exactly this: decoupled clocks with front-end running at 2.5GHz and shaders running at 2.3GHz. That gets 15% frequency improvements and 25% power savings.I mean maybe they intended it to be a 1:1 ratio across the clock boundary or maybe it was supposed to be closer to a 2:1 (1:2?) but I don't imagine they built that capability into it just for shits & giggles.

In Blender, It could also be downclocking memory too to achieve sustained high core clocks.The fact it can achieve very high clocks on irregular workloads (blender?) where the CUs might be stalling a lot (i.e. not consuming as much power..) could suggest that it is using more power than expected.

AMD said exactly this: decoupled clocks with front-end running at 2.5GHz and shaders running at 2.3GHz. That gets 15% frequency improvements and 25% power savings.

So a mere 200MHz difference can save 25% of power? That's a HUGE difference. I think the key to understanding the power characteristics of RDNA3 is in understanding how this difference actually materizlized, it wasn't necessary in RDNA1/RDNA2, or even in the power hungry Vega, so why now?

This statment is not logical. It make sence when the Frontend is not delifering enough content for the shaders. Of cause than you see big efficenty gains when the shader are down clocked. But we do not know how good the shaders are scaling with power.AMD said exactly this: decoupled clocks with front-end running at 2.5GHz and shaders running at 2.3GHz. That gets 15% frequency improvements and 25% power savings.

460w lol

Poor amd. with zen4, they lost the ability to make fun of intel making hot chips and with rdna3, lost the efficiency bragging.

Intel remains the best for gaming CPU's, in special with alder lake and beyond.

The architecture is not efficient it has been exposed

Add from 30w to 50w on top of this, as software doesn't read AMD's TBP in it's entirety, unlike NVIDIA.

See here:

Power consumption for GPUs

Igorlab posted some major information on power consumption values for AMD and NVIDIA GPUs. As evidently they differ quite substantially on how they do their measurements. Igor used special equipment (shunt measurements/oscilloscope) to measure power consumption from the cards externally to...forum.beyond3d.com

Another AMD thread derailed and bombed by the usual suspects.Thats why amd kept the tdp low its too embarrassing