Then why did you make a mention of OpenCL ?

It’s an example of crowdsourced tech.

I don't think it's correct. OpenCL specs are open, but most driver implementations are proprietary closed source; and ROCm is an exact opposite of that.

OpenCL 1.x C-language spec was originally developed by Apple then submitted to the Khronos Group, so it was not 'crowdsourced'.

C++ language in OpenCL 2.x and 3.x was indeed developed by the Khronos consortium, but it didn't take off, and most OEMs stuck with OpenCL 1.x and C (NVidia obviously wanted to promote their own C++ dialect from CUDA; and other vendors probably didn't have the resources to rework their proprietary OpenCL C compilers to full-blown C++.)

That's why AMD decided to start ROCm (Radeon Open Compute), which is essentially an open-source implementation of AMD

Common Language Runtime that hosts both the OpenCL 2.x C-language dialect and the HIP C++ dialect, which is a subset of CUDA API and related C++ language extensions; there were also additional C++ based languages/compilers/runtimes like

HCC and C++ AMP, which have since been dropped.

ROCm does use open-source implementations of graphics driver and runtime (in Linux) and a fork of the open-source Clang compiler (maintained as a branch within the main repository), and most roc* / hip* libraries are ports of established open-source frameworks into HIP C++, which mirror Cu* versions shipped with CUDA.

So most (but not all) of critical code is indeed open-source - however in reality ROCm is primarily maintained by full-time AMD employees and is hosted in private repositories, so it's still far from being 'crowdsourced' (though AMD

plan to open-source more parts). But at least it does make finding and fixing trivial compiler / library bugs a lot easier comparing to purely proprietary code.

Also ROCm isn't really a 'specification', just a set of APIs and C++ language extensions and libraries that roughly mirror those supported by CUDA (although it's still not a 100% equivalent), so it's not 'crowdsourced' either (and its maintainers routinely make breaking changes through each minor release).

[Edit] ROCm is also 'open' and not proprietary because

the HIP runtime can run on CUDA hardware - so if you convert your code to HIP, you can still run it on both AMD and NVidia hardware.

So I don't really see many parallels with OpenCL and its C-language dialect, except that ROCm runtime and drivers were forked from OpenCL runtime and driver (in Linux) and implemented on top of a common foundation.

trini only attributed the crowdsourcing problem to OpenCL, ROCm wasn't even mentioned in any context.

But

the HPCWire article above never mentions OpenCL either, it only talks about ROCm - however, much of that talk seems like a misunderstanding on their part, because AMD's "

Unified AI Software Stack" effort started in 2022 has never been about unifying all of their proprietary APIs under the ROCm umbrella.

The plan was to continue supporting major AI frameworks through existing APIs/runtimes/compilers developed by Radeon and Xilinx teams, like ROCm / HIP and Vitis / AIE, but merge common parts of the stack into a unified middle layer based on the

ONNX runtime and MILR intermediate representation (which was specifically designed for graph-based workflows typical in ML frameworks).

This would enable real-time cross-compilation for specific accelerator hardware that's present on the end system (and may even potentially allow the stack to run on non-AMD hardware with a SPIR-V / Vulkan target).

www.phoronix.com

www.phoronix.com

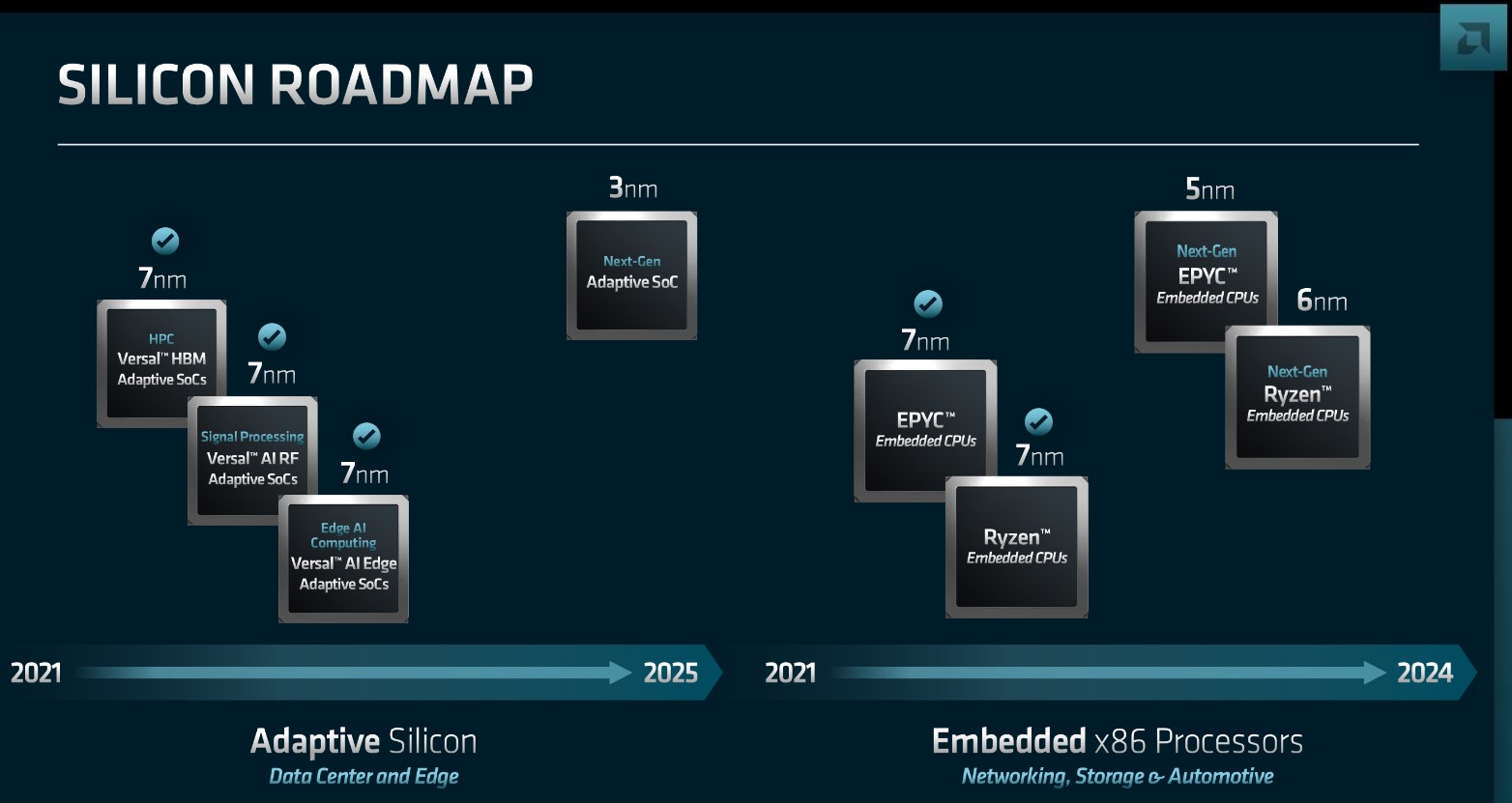

At AMD FAD 2022, the company discussed its embedded and AI strategy including AI Engine accelerator proliferation and unifying software

www.servethehome.com

www.hardwareluxx.de