I'm sorry but it's a bit confusing, most likely because you're not using the industry standard names like blendshapes or bones/skinning or mocap markers. Perhaps try to read back in this thread? I think I've made a few long posts trying to explain how the various techniques work.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Accurate human rendering in game [2014-2016]

- Thread starter babcat

- Start date

- Status

- Not open for further replies.

Anyway, I'm going to try a short summary...

Basically the human face can have skin deformations that range in scale from a few centimeters down to the sub-millimeter level at certain facial wrinkles. Creating a completely realistic CG representation would require some sort of control over details as small as this size.

So one aspect is the capture of the human performance (assuming there is one to drive the CG character). Traditional optical motion capture operates with reflective markers recorded by infrared cameras; the smallest practical marker size is about 4mm and only a few dozen can be realistically tracked. This does not give enough resolution for even the larger facial wrinkles, so a direct remapping of this marker motion to skin deformation will be lacking.

The other approach would be to abandon a direct remapping of skin movement to the CG face and aim for capturing the elemental facial expressions instead.

So with a direct remapping you'd try to find out how many millimeters the various points on the face have been moved; with this indirect capture you'd want to get a result like "the actor has raised his left eyebrow by 40%". You'd then use that intensity on the CG facial rig and make it execute an eyebrow raise expression at 40% intensity.

The up side is that you can keep sampling facial movement at just a few distinct points on the face, instead of trying to track every millimeter. The down side is that you need to figure out what elemental expressions the actor was making at any point; this means a lot of software development and / or some very talented people with a good eye to see what's happening with the face.

This method can also use 3D data, but since the markers are very small and far away from the motion capture cameras, that data is usually not precise enough. So the preferred approach is to put a helmet on the actor that has a face camera fixed to it, and use the 2D video feed from that camera to analyze the facial movement. Some studios use 2 or even 4 cameras to get more precise results.

Another advantage of this method is that since there's no direct remapping, you can work with an actor that looks different than your CG character. So this is the more appropriate approach for fantasy creatures, aliens, apes, aged or de-aged people and so on; not only can you get finer deformations on the CG face, but you can also translate a performance to practically anything, allowing the use of for example a talented actor for the performance, and a good looking but not so good actor for the facial likeness at the same time. Actors that look good and act well are usually big Holywood stars , and there are no aliens or apes to act.

, and there are no aliens or apes to act.

The other aspect is how you make the CG face move.

Skinning with bones basically means that you have a limited number of points anchored to the face model that you can move, these are your bones - typically a few hundred per face on current consoles. You'll probably have a lot more vertices in the face model, so you need to drive a couple (dozen) vertices with each bone; but in practice you want smooth movement, so the bones each have a smooth falloff and move some vertices with 100% strength, others at 66%, 50% and so on. This also means bone influences will overlap. So, the level of control that you can have will be a few millimeters at most, but usually closer to the centimeter range. It can also be very hard to control the motion of individual vertices as they're moved by multiple bones; and some of the skin deformations on human faces are so complex that it's very hard to reproduce the surface with just a coarse level of control. This is particularly true for the lips, and also somewhat to the eyelids.

This is not enough to have control over how wrinkles (like the crow's feet around the eyes) and larger skin folds (like the smile line) are deforming, so most game developers prefer to keep vertex density at a few millimeters and add the sub-mm deformations using texture maps. This is usually applied to the normal maps where the game engine can blend between the neutral, smooth face and the wrinkled face, separately in each major area, depending on the elemental facial expressions that are active. These blended normal maps are usually referred to as "wrinkle maps" and almost every advanced game since Uncharted 1 and Mass Effect 1 has been using them.

The other approach to deforming the facial model is to use blendshapes - this basically

means that you store multiple versions of the face model and you can control where any of the vertices should be moved to. This obviously gives the finest level of control over the model itself, and so it's the preferred method for movie VFX and realistic CG animation. You can also create a face model with varied vertex density and add extra detail where sub-mm deformations are required, so you don't have to rely on texture maps operating on top of the model itself.

However, GPUs aren't good at blendshapes, they require a lot of extra memory (storing a 3D vector for each vertex in each blendshape) and the math isn't performed as fast as skinning either. But bones are very very fast; almost free, as the GPU can easily store all the data in local memory and just use its ALUs to do the math.

So, most game devs use bones and wrinkle maps. The more advanced technologies are also using elemental facial expressions to drive the face, instead of trying to remap 3D motion capture marker movement data - as far as I'm aware, only Quantic Dream is still working with direct remapping, based on their Sorcerer demo.

Hopefully this will help you to describe your idea a bit better

Basically the human face can have skin deformations that range in scale from a few centimeters down to the sub-millimeter level at certain facial wrinkles. Creating a completely realistic CG representation would require some sort of control over details as small as this size.

So one aspect is the capture of the human performance (assuming there is one to drive the CG character). Traditional optical motion capture operates with reflective markers recorded by infrared cameras; the smallest practical marker size is about 4mm and only a few dozen can be realistically tracked. This does not give enough resolution for even the larger facial wrinkles, so a direct remapping of this marker motion to skin deformation will be lacking.

The other approach would be to abandon a direct remapping of skin movement to the CG face and aim for capturing the elemental facial expressions instead.

So with a direct remapping you'd try to find out how many millimeters the various points on the face have been moved; with this indirect capture you'd want to get a result like "the actor has raised his left eyebrow by 40%". You'd then use that intensity on the CG facial rig and make it execute an eyebrow raise expression at 40% intensity.

The up side is that you can keep sampling facial movement at just a few distinct points on the face, instead of trying to track every millimeter. The down side is that you need to figure out what elemental expressions the actor was making at any point; this means a lot of software development and / or some very talented people with a good eye to see what's happening with the face.

This method can also use 3D data, but since the markers are very small and far away from the motion capture cameras, that data is usually not precise enough. So the preferred approach is to put a helmet on the actor that has a face camera fixed to it, and use the 2D video feed from that camera to analyze the facial movement. Some studios use 2 or even 4 cameras to get more precise results.

Another advantage of this method is that since there's no direct remapping, you can work with an actor that looks different than your CG character. So this is the more appropriate approach for fantasy creatures, aliens, apes, aged or de-aged people and so on; not only can you get finer deformations on the CG face, but you can also translate a performance to practically anything, allowing the use of for example a talented actor for the performance, and a good looking but not so good actor for the facial likeness at the same time. Actors that look good and act well are usually big Holywood stars

The other aspect is how you make the CG face move.

Skinning with bones basically means that you have a limited number of points anchored to the face model that you can move, these are your bones - typically a few hundred per face on current consoles. You'll probably have a lot more vertices in the face model, so you need to drive a couple (dozen) vertices with each bone; but in practice you want smooth movement, so the bones each have a smooth falloff and move some vertices with 100% strength, others at 66%, 50% and so on. This also means bone influences will overlap. So, the level of control that you can have will be a few millimeters at most, but usually closer to the centimeter range. It can also be very hard to control the motion of individual vertices as they're moved by multiple bones; and some of the skin deformations on human faces are so complex that it's very hard to reproduce the surface with just a coarse level of control. This is particularly true for the lips, and also somewhat to the eyelids.

This is not enough to have control over how wrinkles (like the crow's feet around the eyes) and larger skin folds (like the smile line) are deforming, so most game developers prefer to keep vertex density at a few millimeters and add the sub-mm deformations using texture maps. This is usually applied to the normal maps where the game engine can blend between the neutral, smooth face and the wrinkled face, separately in each major area, depending on the elemental facial expressions that are active. These blended normal maps are usually referred to as "wrinkle maps" and almost every advanced game since Uncharted 1 and Mass Effect 1 has been using them.

The other approach to deforming the facial model is to use blendshapes - this basically

means that you store multiple versions of the face model and you can control where any of the vertices should be moved to. This obviously gives the finest level of control over the model itself, and so it's the preferred method for movie VFX and realistic CG animation. You can also create a face model with varied vertex density and add extra detail where sub-mm deformations are required, so you don't have to rely on texture maps operating on top of the model itself.

However, GPUs aren't good at blendshapes, they require a lot of extra memory (storing a 3D vector for each vertex in each blendshape) and the math isn't performed as fast as skinning either. But bones are very very fast; almost free, as the GPU can easily store all the data in local memory and just use its ALUs to do the math.

So, most game devs use bones and wrinkle maps. The more advanced technologies are also using elemental facial expressions to drive the face, instead of trying to remap 3D motion capture marker movement data - as far as I'm aware, only Quantic Dream is still working with direct remapping, based on their Sorcerer demo.

Hopefully this will help you to describe your idea a bit better

http://youtu.be/ot0MpgIaMC4

from 8min45

the expression of the guy agonising

with facial expression getting more and more realisting, it may make us feel inconfortable to "kill" people in games.

from 8min45

the expression of the guy agonising

with facial expression getting more and more realisting, it may make us feel inconfortable to "kill" people in games.

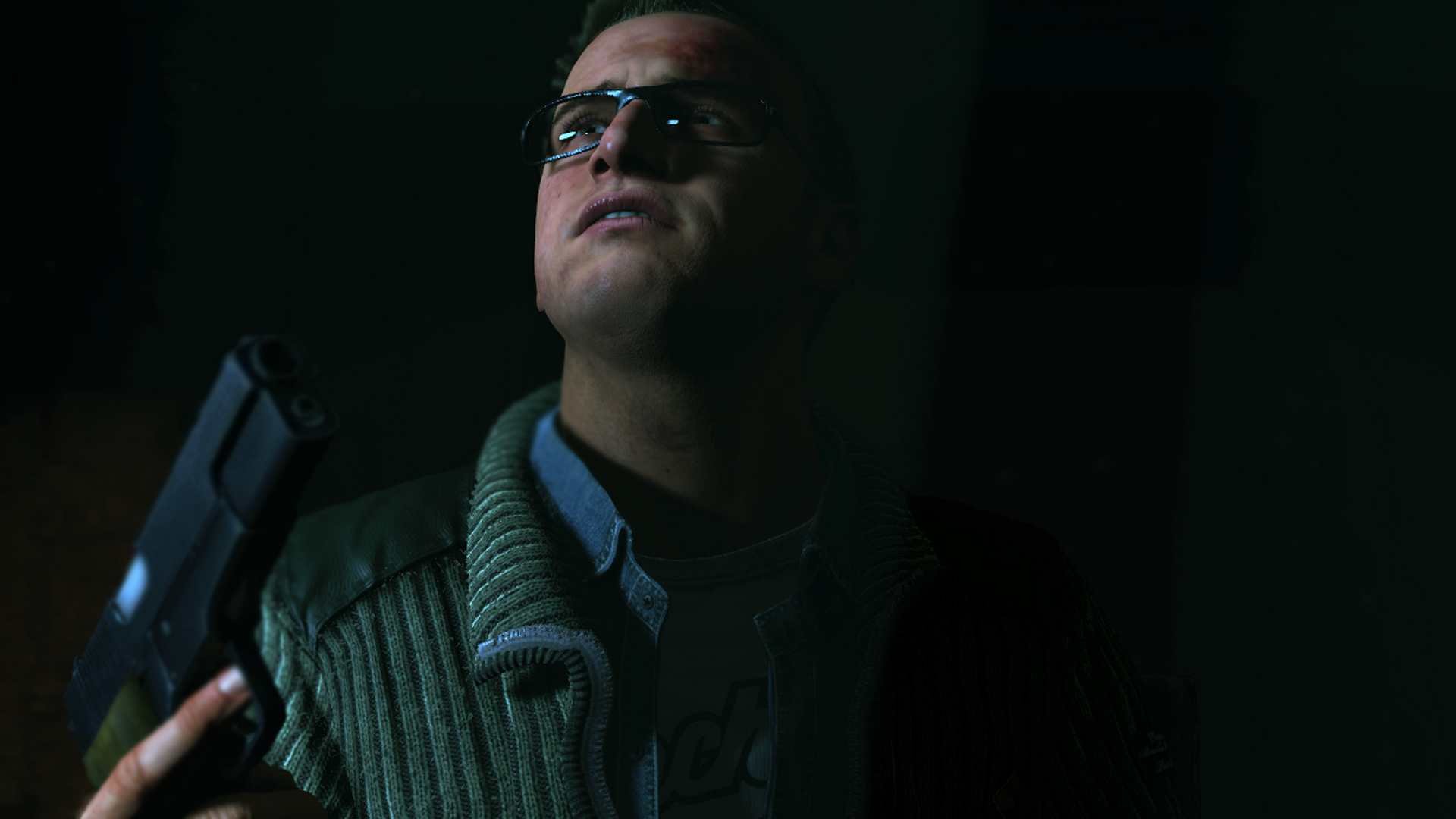

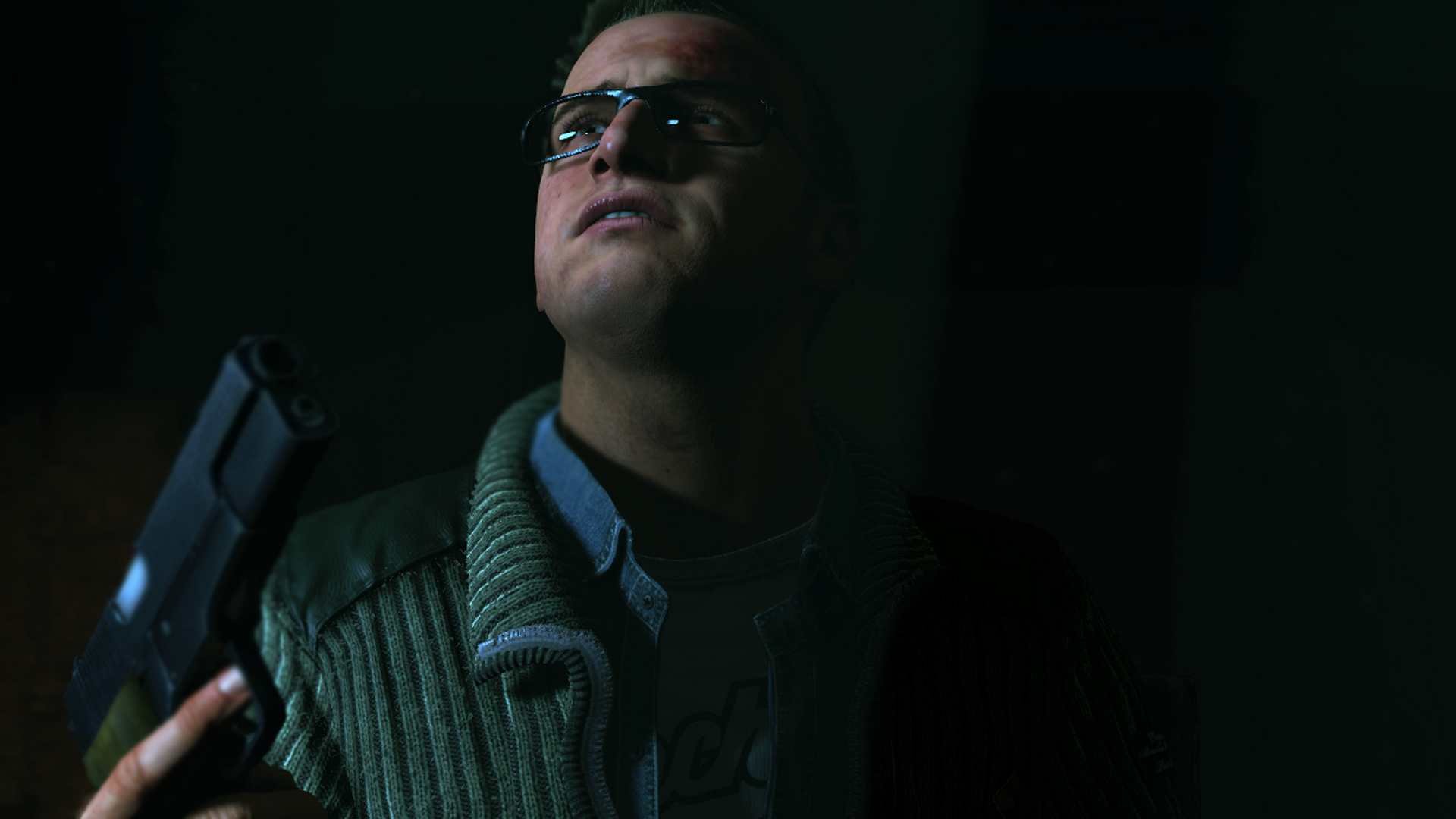

Until Dawn

and Hayden Panettiere:

http://www.supermassivegames.com/images/games/UntilDawnPS4/website_video5.webmhd.webm

and Hayden Panettiere:

http://www.supermassivegames.com/images/games/UntilDawnPS4/website_video5.webmhd.webm

steveOrino

Regular

Until Dawn

and Hayden Panettiere:

http://www.supermassivegames.com/images/games/UntilDawnPS4/website_video5.webmhd.webm

Pretty convincing, especially the lips

I wouldn't call such a smooth surfaced, fully symmetrical, stylized-faced creature a detailed or accurate human. Only thing looking truly good is the skin shading, and there's a closer than usual approximation of anatomy in the shapes.

I don't mean to say that it looks bad or anything, it's just pretty damn far from a human, but that has never been their goal anyway.

I don't mean to say that it looks bad or anything, it's just pretty damn far from a human, but that has never been their goal anyway.

A real living woman's hand has far more detail. Depending on body fat levels, even veins should be pretty visible and not only under harsh lighting.

We just did full body scanning for a girl today, I'm pretty interested in the results. Human bodies look very different with a simple shader, without translucency and such.

We just did full body scanning for a girl today, I'm pretty interested in the results. Human bodies look very different with a simple shader, without translucency and such.

I thought this was really impressive. I'm guessing this is the original Sinclair model (million of polygons) before being transferred to in-game?

Was this model made enirely in ZBRUSH?

Yes, although the render is probably something else, most likely Mental Ray.

You can easily export a multi-million polygon mesh from Zbrush, add a SSS skin shader and the existing color map and get a render like that. Problem is, games have to resort to approximation - low poly mesh with normal maps and crude realtime shaders.

You can easily export a multi-million polygon mesh from Zbrush, add a SSS skin shader and the existing color map and get a render like that. Problem is, games have to resort to approximation - low poly mesh with normal maps and crude realtime shaders.

These are just separate sculpts based on the same head. You can save different versions or you can just use the layer system in Zbrush for each of the expressions.

The way the tech is implemented in the game engine is that they then generate different normal maps for each of the expression sculpts, and blend them over the original normal map depending on what facial expressions are dialed in with the animation. This tech is actually quite old, the first Mass Effect and Uncharted games were already using it. It's usually referred to as "wrinkle maps", and current engines are usually able to use a somewhat more complex approach where maps can be assigned to regions of the face and so on. So the whole thing is basically an engine feature, there's no off-the-shelf software for that.

Still, you need good base deformations on the face model itself for them to work well, and that's where KZ4 didn't really shine... But new games like Ryse and Last of Us, or UC4 and QB are looking quite good.

The way the tech is implemented in the game engine is that they then generate different normal maps for each of the expression sculpts, and blend them over the original normal map depending on what facial expressions are dialed in with the animation. This tech is actually quite old, the first Mass Effect and Uncharted games were already using it. It's usually referred to as "wrinkle maps", and current engines are usually able to use a somewhat more complex approach where maps can be assigned to regions of the face and so on. So the whole thing is basically an engine feature, there's no off-the-shelf software for that.

Still, you need good base deformations on the face model itself for them to work well, and that's where KZ4 didn't really shine... But new games like Ryse and Last of Us, or UC4 and QB are looking quite good.

Dominik D

Regular

True. This seems to be the case for every single Quantic Dream demo too - Looks great in isolation, breaks when they try to achieve real time results in actual environments.Problem is, games have to resort to approximation - low poly mesh with normal maps and crude realtime shaders.

- Status

- Not open for further replies.

Similar threads

- Replies

- 28

- Views

- 3K

- Replies

- 5

- Views

- 407

- Replies

- 37

- Views

- 3K

- Replies

- 28

- Views

- 2K