That's amazing for 720p. Is the ghosting under control?

At 1440p DLSS Performance I think you could reasonably run path tracing on a 4070. Add on FG which I've found works fine at 45-50fps base and you could have a pretty decent experience. Wouldn't be good for competitive shooters but I don't think those games have PT anyway.

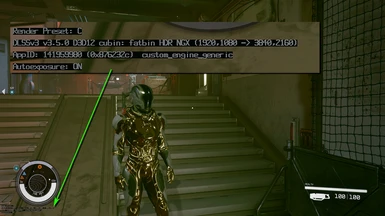

I've only done a really quick look, so not sure about ghosting. There wasn't any noticeable ghosting on those particles around the gemstone in Remnant 2, and those are constantly moving. Didn't see anything that stood out for me in Remnant but wasn't look for it. I do generally adjust my settings to get 120 fps or more, and that really helps minimize issues with DLSS anyway.