I am sorry, but this is simply not true.That's not true. The cost per transistor stopped dropping, that's not the same thing as an increase. It has remained stable for 4 generations now and you didn't have the sort of prices you have now on 20nm and 16nm. What you have is AMD not able to compete. Drop it, this argument has already lead to the forum being closed and you are bringing it again.

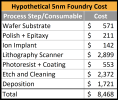

These numbers are vendor agnostic:

Big Trouble At 3nm

Big Trouble At 3nm Costs of developing a complex chip could run as $1.5B, while power/performance benefits are likely to decrease.

Even wafers are getting more expensive.

TSMC 3 nm Wafer Pricing to Reach $20,000; Next-Gen CPUs/GPUs to be More Expensive

Semiconductor manufacturing is a significant investment that requires long lead times and constant improvement. According to the latest DigiTimes report, the pricing of a 3 nm wafer is expected to reach $20,000, which is a 25% increase in price over a 5 nm wafer. For 7 nm, TSMC managed to...

I am sorry if these numbers offends you, but asking me to stop posting because you feel like I should stop is not a valid request from my perspective?

Please counter with numbers, if you feel that the numbers are false.

(No offence meant