In marketing materials, during unveil, etc.What do you mean 'spent so much time'?

It would make sense if all Nv had would be DP 1.4 but they also have HDMI 2.1 which kinda negates this in full.

In marketing materials, during unveil, etc.What do you mean 'spent so much time'?

HDMI2.1 monitors are of mucho noexisto kind so...but they also have HDMI 2.1 which kinda negates this in

Every new monitor supports HDMI2.1. Sure, dont buy one with the latest G-Sync module...HDMI2.1 monitors are of mucho noexisto kind so...

And DP 2.1 monitors are a plenty apparently.HDMI2.1 monitors are of mucho noexisto kind so...

That's not an excuse to ship outdated display cores.And DP 2.1 monitors are a plenty apparently.

With zero DP 2.1 products on the market there's nothing "outdated" in DP 1.4. It also provides enough b/w to hit 4K/240Hz - which is enough for the next several years still as we currently have only one product capable of such refresh rates.That's not an excuse to ship outdated display cores.

On PC you need a higher framerate because mouse causes much faster camera motion. 30fps feels often unplayable, while on console it's more acceptable.

Thats generally true, however it depends on the user, what your used to and what kind of display and control input you use. Those looking to play 30fps single player games are more often using a controller and/or TV than say someone aiming to play CSGO or fortnite at well above 144fps. PC>TV usage has drastically increased since last generation started, so has the usage of controllers. Though kb/m and a monitor are still the most common on the platform, most have the option to connect to a TV.

Also, with similar HW, you'll get worse performance because PC lacks some optimization options (both SW and HW) due to being an open platform spanning variable HW requiring compromised APIs, reducing options and value of low level optimizations.

Thus you need a bit more powerful HW in general on PC to have a good experience with the same games, even if you're not an enthusiast aiming for high gfx settings.

Im sure theres more overhead on the pc, but im also sure its not a huge deflict as has been as seen in previous generations. The mentioned 6600XT is actually somewhat more capable, and seeing comparisons on YT, its atleast a match, more often than not outperforming the PS5. Perhaps due to infinity cache, quite abit higher clocks etc which help out in raster and rt?

Anyway, a 6600XT should suffice for most pc gamers looking to get ballpark premium console experiences. Some settings might have to be lowered perhaps, some allow for higher settings.

Were not talking you need 6800, 3080 or 4080 etc products which arent really for the mainstream i think. I'd say a 3060Ti is a very good mainstream GPU with its current price, you get above baseline in raster and way more capable RT, aswell as dlss. A770 if you arent so much into older games is just as good a alternative aswell.

I REALLY like the design of that card. Looks sexy

I like the design aswell, i hope we see more of that also from NV.

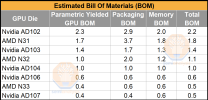

Are these the same guys that were suggesting AMD was paying ~$300 for HBM back in the day?*snip

For what it's worth Semianalysis's BOM estimates - https://www.semianalysis.com/p/ada-lovelace-gpus-shows-how-desperate

*snip*

I don't think that was dolan but yes he's one helluva grifter.Are these the same guys that were suggesting AMD was paying ~$300 for HBM back in the day?

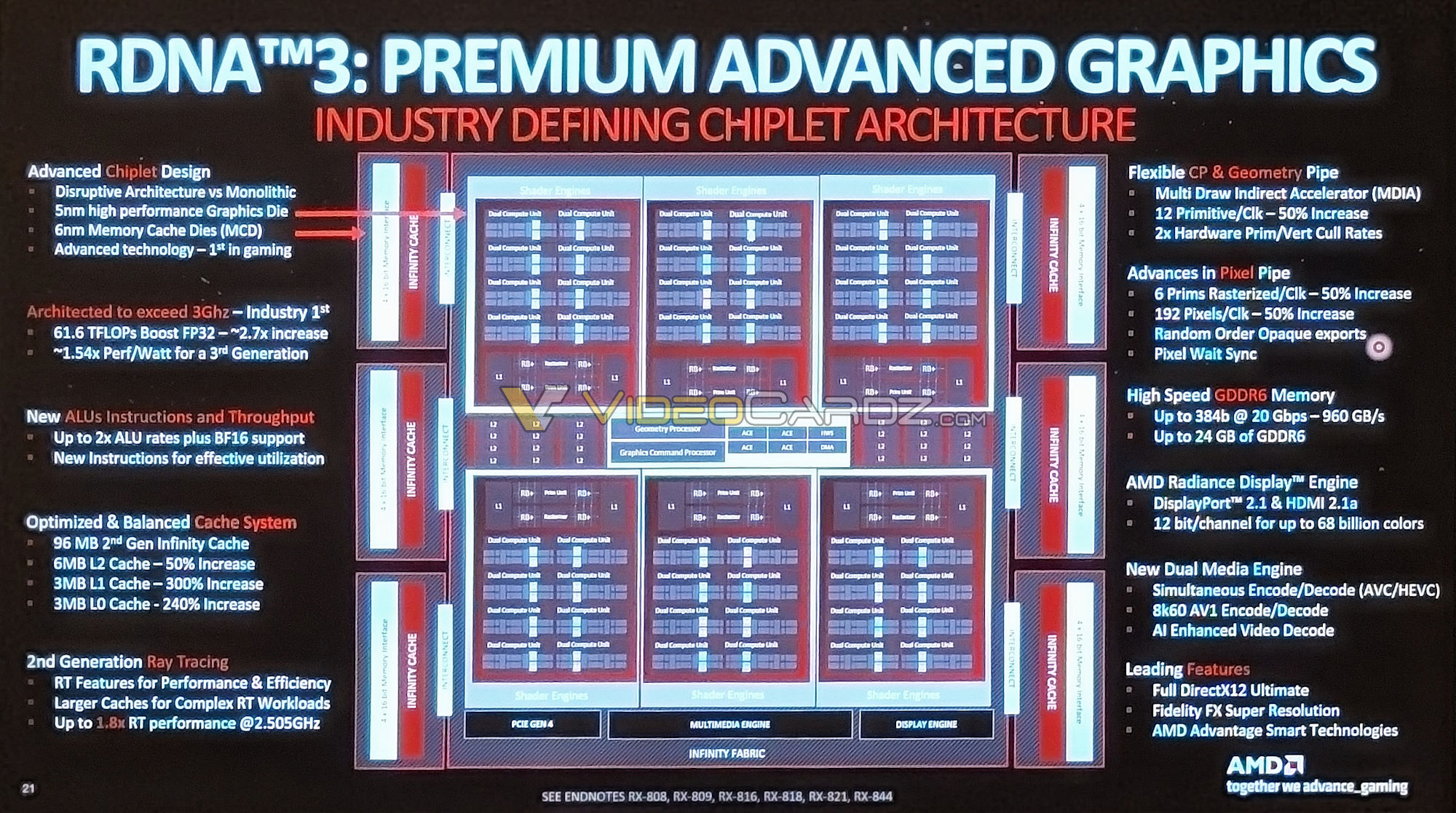

Command Processor?What ist hte New Flexible CP & Geometry Pipe? What does CP means?`

Source: https://videocardz.com/newz/alleged-amd-navi-31-gpu-block-diagram-leaks-out

Yeah, it's right there dead center in the middle of the chip.Command Processor?

If this is accurate, it means LDS has been practically doubled?

Well, at least they accounted for MCDs and packaging costs, so still looks way more believable in comparison with something like that "Nvidia would be lucky if AD102 is less than 3x Navi 31."Are these the same guys that were suggesting AMD was paying ~$300 for HBM back in the day?

Packaging costs are a small percentage of a product that has a "relatively" high BOM.Well, at least they accounted for MCDs and packaging costs, so still looks way more believable in comparison with something like that "Nvidia would be lucky if AD102 is less than 3x Navi 31."

Where did you get that? Any proofs?AD106 is only 1.5x when they are paying at least 2x more a wafer? How?

Again, not confirmed info on N33, so still highly inaccurate and speculative stuffJust look at N33 vs AD106... roughly the same size but one is on N6 and the other is on a custom 4nm.

5$ turned into 20$ would change a lotEven if AMD's packaging costs are 3-4x a monolithic GPU, it doesn't change the math very much.

Are there any real info on prices rather than just rumors?Forgot to mention that GDDR6x still has ~20-30% price premium over GDDR6.

I guess I just struggle to relate to this hypothetical person that would always use the RT mode on console games (which in many titles, can mean you're locked at 30fps or an unlocked framerate below 60), yet will also never use it on a card that will give them ~3X the performance with the same settings.

Like sure, I haven't taken an extensive survey, and perhaps it's a very biased example as it's based on people that use Internet forums. But when the topic of RT comes up, far more often than not, the biggest pushback against its value that I see comes from console gamers. I expect more often than not, when given the choice, it's just not enabled due to the resolution/performance hit.

So in reality, I don't think this individual who thinks in this binary between "RT is worthless/RT means the best graphics" is really that common. I think most make their judgment on a title by title basis, for some the more physically accurate lighting/shadows is worth it for the performance/resolution hit. For others, the scenes where these enhancements are actually noticeable are too rare to justify the drawbacks to the rest of the presentation that is ever-present. The person that would disable RT on their 7900 is sure as hell not going to enable it on their console tiles if given the choice, so it's not 'they're getting worse graphics' - they're getting far better graphics and performance vs. the mode they would also use on the consoles.

Don't forget too, that where the Ampere/ADA architecture really shines with RT is when the higher precision settings are used. When the lower settings that consoles employ are also used on RT titles, RDNA naturally doesn't suffer quite as catastrophically by comparison. Whether those lowered settings make RT pointless though of course, will be a matter of debate - but if they do, then the "but consoles will use RT" argument makes even less sense.

I guess the contention here is always going to be what 'into play' means, as it's such a variable impact depending on the title and the particular precision level used. The main argument is that in the future, this impact will be far more significant, as much more of the rendering pipeline will be enhanced, or fully based on RT. Ok then, but you can't expect people to fully embrace a hypothetical future - they're going to be buy the hardware they feel can enhance the games they're playing now.

I mean the extra outlay you spend on a PC over a console is justified for many reasons, but one of those is certainly that all of your existing library is enhanced immediately rather than hopes and prayers for it to be fully taken advantage of in an indeterminate future.

pjbliverpool said:The main thrust of my argument was that if we are to say RT performance doesn't matter because we'll just turn it off anyway, then you are accepting that on your $900 GPU, you are getting a lesser experience in some respects than even an Xbox Series S gamer. On the other hand if you turn RT on, then the AMD GPU is much slower than it's competing Nvidia GPU. Both options are bad IMO.

And on the other-other-hand, for the equivalent price bracket, the Nvidia GPU could end up being much slower than the 7900 in rasterization, which is still important for a massive number of games, especially when you value a high frame rate experience.

Yea.Will AMD allow that?

Kinda the point, really.it becomes a very pointless card