Thing is that the first to show Raytracing back in the day day were those of Intel, and when they did RT was still science fiction to say the least, so they have some experience in this regard that's for sure.Wow Intel has some really strong opinions on RT architecture and they mostly seem to be saying that AMD is doing it all wrong.

While Intel’s approach is very similar to Nvidia they’re doing some things differently that could give them a big advantage. SIMD execution is only 8-wide when running RT meaning fewer and shorter stalls compared to Nvidia’s 32-wide warps. Intel is also sorting rays after each bounce which would further reduce losses due to divergence.

- Don’t do BVH traversal on vector units

- DXR 1.0 style PSOs are better than DXR 1.1 ubershaders doing inline RT

Alchemist could make things really interesting!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Intel ARC GPUs, Xe Architecture for dGPUs [2018-2022]

- Thread starter DavidGraham

- Start date

-

- Tags

- intel

- Status

- Not open for further replies.

Jawed

Legend

In the video he says that target shaders (signatures) are sorted. Textures that make up materials are still an opportunity for memory divergence.View attachment 6373

Question: Can it keep grouping efficiency when it should call very divergent materials like ray traced reflections?

The sorting (backed by spilling state to cache hierarchy) looks pretty funky. The key is what happens after 2 or 3 ray bounces and whether there's enough targets to sort into "meaningfully full" hardware threads.

Wow Intel has some really strong opinions on RT architecture and they mostly seem to be saying that AMD is doing it all wrong.

While Intel’s approach is very similar to Nvidia they’re doing some things differently that could give them a big advantage. SIMD execution is only 8-wide when running RT meaning fewer and shorter stalls compared to Nvidia’s 32-wide warps. Intel is also sorting rays after each bounce which would further reduce losses due to divergence.

- Don’t do BVH traversal on vector units

- DXR 1.0 style PSOs are better than DXR 1.1 ubershaders doing inline RT

Alchemist could make things really interesting!

The Intel approach is not too dissimilar to that described by James McCombe at SIGGRAPH 2013 (See first video in https://dlnext.acm.org/doi/abs/10.1145/2504435.2504444 )

Last edited:

In the video he says that target shaders (signatures) are sorted. Textures that make up materials are still an opportunity for memory divergence.

The sorting (backed by spilling state to cache hierarchy) looks pretty funky. The key is what happens after 2 or 3 ray bounces and whether there's enough targets to sort into "meaningfully full" hardware threads.

Sorting may also incur unnecessary overhead for more trivial cases where there isn’t a lot of shader divergence. e.g. RT sun shadows. Hopefully it’s smart enough to know when to turn it off.

Nvidia says this about the benefits of separate shaders. They haven’t talked about sorting so I wonder how it helps on their hardware.

“In particular, avoid übershaders that manually switch between material models. When different material models are required, I recommend implementing each in a separate hit shader. This gives the system the best possibilities to manage divergent hit shading.”

Dayman1225

Newcomer

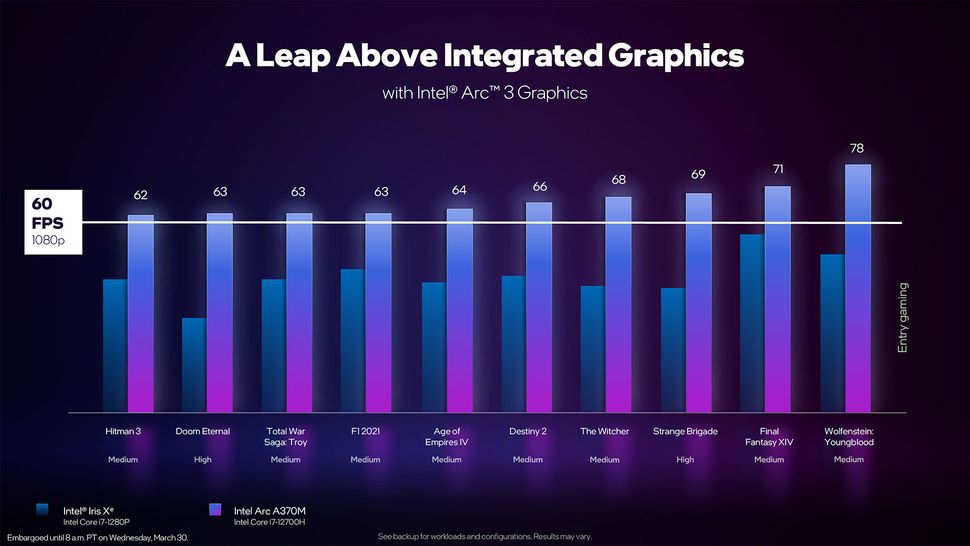

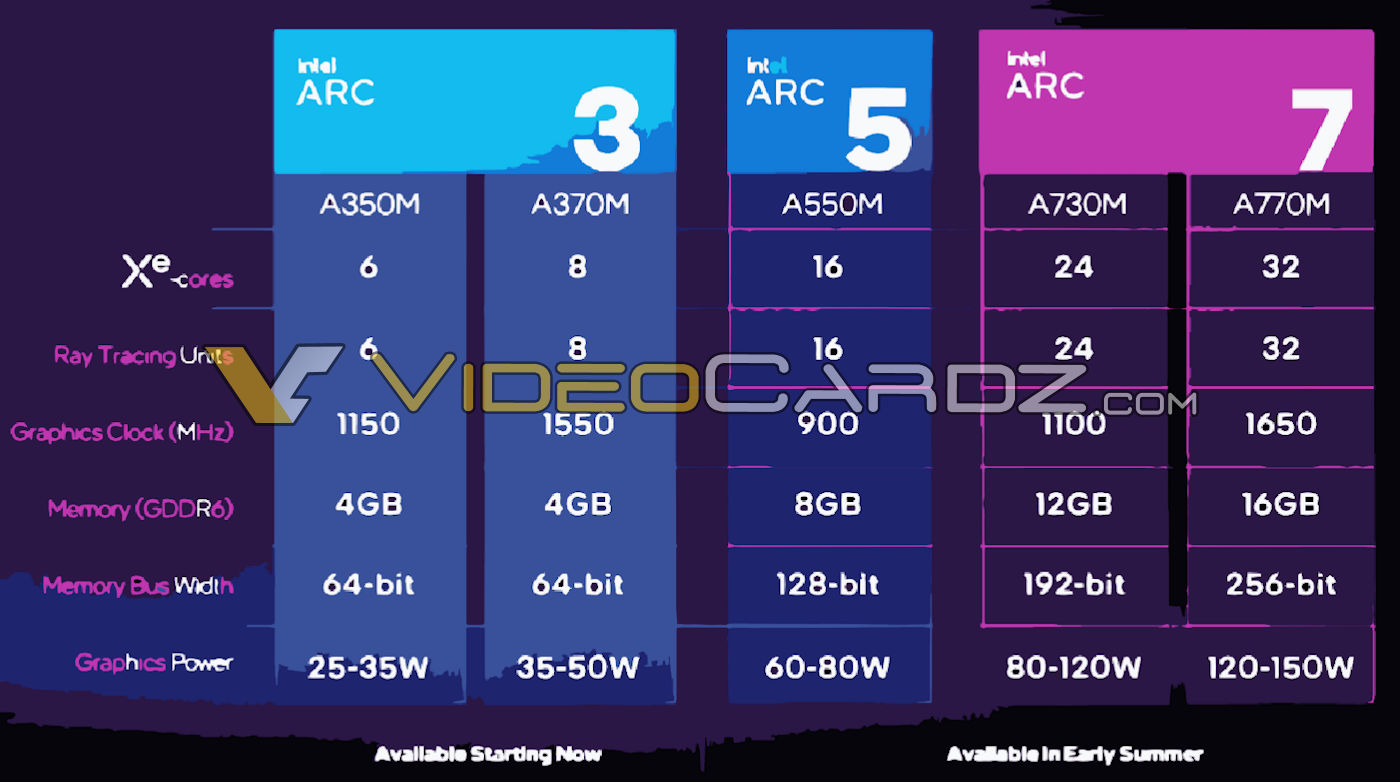

Videocardz leaking specs of the A770M which is perhaps launching tomorrow?

Dayman1225

Newcomer

Update on this since I can’t edit.Videocardz leaking specs of the A770M which is perhaps launching tomorrow?

article posted by videocardz.

Only A300M series available from tomorrow A500M and A700M in early summer.

https://videocardz.com/newz/intel-a...res-and-16gb-g6-memory-to-launch-early-summer

Silent_Buddha

Legend

At 25-35W for A350M, if it has really good video decode performance I'd be really interested in that product as a discrete video card depending on its price.

Regards,

SB

Regards,

SB

Leaks already? Quelle surprise!Videocardz leaking specs of the A770M which is perhaps launching tomorrow?

Seems amd APU with rdna2 have much lower TDP. Just need to compare the sustained performance then

If they turn out to be good, I might return to Intel, which were my favourite "GPUs" at the time. I want to buy a laptop in the future. Since 2005 'til 2017 I always bought laptops. all of them with Integrated Intel GPUs -and one with a GTX 1050Ti too-, I feel nostalgia and a warm affection for the unfortunately basic  GPUs of Intel.

GPUs of Intel.

I had a great time playing with low details -frustrating at times, specially when I purchased Diablo 3 day one-, but it was glorious to watch your games on a laptop at least.

Some more interesting info:

Intel Reveals Full Details for Its Arc A-Series Mobile Lineup | Tom's Hardware (tomshardware.com)

We suspect Intel will break 2GHz on desktop cards, but the mobile parts appear to top out at around 1.55GHz on the smaller chip and 1.65GHz on the larger chip. Do the math and the smaller ACM-G11 should have a peak throughput of over 3 TFLOPS FP32, with 25 TFLOPS of FP16 deep learning capability. The larger ACM-G10 will more than quadruple those figures, hitting peak throughput of 13.5 TFLOPS FP32 and 108 TFLOPS FP16.

GPUs of Intel.

GPUs of Intel.I had a great time playing with low details -frustrating at times, specially when I purchased Diablo 3 day one-, but it was glorious to watch your games on a laptop at least.

Some more interesting info:

Intel Reveals Full Details for Its Arc A-Series Mobile Lineup | Tom's Hardware (tomshardware.com)

We suspect Intel will break 2GHz on desktop cards, but the mobile parts appear to top out at around 1.55GHz on the smaller chip and 1.65GHz on the larger chip. Do the math and the smaller ACM-G11 should have a peak throughput of over 3 TFLOPS FP32, with 25 TFLOPS of FP16 deep learning capability. The larger ACM-G10 will more than quadruple those figures, hitting peak throughput of 13.5 TFLOPS FP32 and 108 TFLOPS FP16.

They've always been capable of playing some oldies. I remember playing with the i810 and being fairly satisfied with what it could do.  Sandy Bridge was when things started to get interesting.

Sandy Bridge was when things started to get interesting.

I suppose I'm most interested in how they behave with SteamVR. NVidia has a lot of VR functionality. Some games support DLSS. They have VRSS foveated rendering capability too which might become very important. AMD has much less going for them. I don't know if Intel has anything going for VR.

I suppose I'm most interested in how they behave with SteamVR. NVidia has a lot of VR functionality. Some games support DLSS. They have VRSS foveated rendering capability too which might become very important. AMD has much less going for them. I don't know if Intel has anything going for VR.

https://tenor.com/view/mando-way-this-is-the-way-mandalorian-star-wars-gif-18467370Intel is really stirring the pot with their more accurate definition of a core.

DavidGraham

Veteran

This method accounts for the change in architecture inside each GPU SM/Core.How are we supposed to compare to the other guys where every SIMD lane is a “core”.

Perhaps we should borrow from the CPU guys and introduce the concepts of cores and threads. Where a 3090Ti is composed of 84 cores, with each core having 128 threads.

Did you really have the Intel 740 from 1998? Isn't that the only dedicated GPU they have made before?If they turn out to be good, I might return to Intel, which were my favourite "GPUs" at the time. I want to buy a laptop in the future. Since 2005 'til 2017 I always bought laptops. all of them with Integrated Intel GPUs -and one with a GTX 1050Ti too-, I feel nostalgia and a warm affection for the unfortunately basicGPUs of Intel.

I had a great time playing with low details -frustrating at times, specially when I purchased Diablo 3 day one-, but it was glorious to watch your games on a laptop at least.

Some more interesting info:

Intel Reveals Full Details for Its Arc A-Series Mobile Lineup | Tom's Hardware (tomshardware.com)

We suspect Intel will break 2GHz on desktop cards, but the mobile parts appear to top out at around 1.55GHz on the smaller chip and 1.65GHz on the larger chip. Do the math and the smaller ACM-G11 should have a peak throughput of over 3 TFLOPS FP32, with 25 TFLOPS of FP16 deep learning capability. The larger ACM-G10 will more than quadruple those figures, hitting peak throughput of 13.5 TFLOPS FP32 and 108 TFLOPS FP16.

Silent_Buddha

Legend

some more leaks:

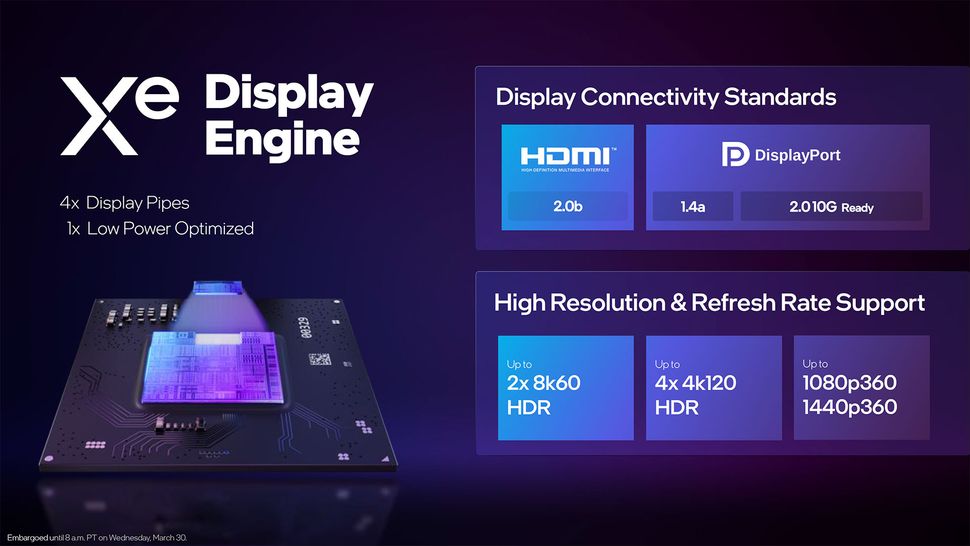

Damn, only HDMI 2.0b? All of my displays are HDMI only now and DP to HDMI 2.1 converters are still very much hit or miss as to whether or not they will work correctly. Bleh.

Regards,

SB

- Status

- Not open for further replies.

Similar threads

- Replies

- 286

- Views

- 43K

- Replies

- 200

- Views

- 30K

- Replies

- 53

- Views

- 7K

- Replies

- 7

- Views

- 2K