Arguing with the IHV slideware again huh

Have not seen this slide, so thanks, but I am not arguing with the "conceptual illustration purposes only" slides.

I am talking about the issues with low precision modes, something which nobody would want to highlight in marketing slides or in the academia papers on "low precision" research.

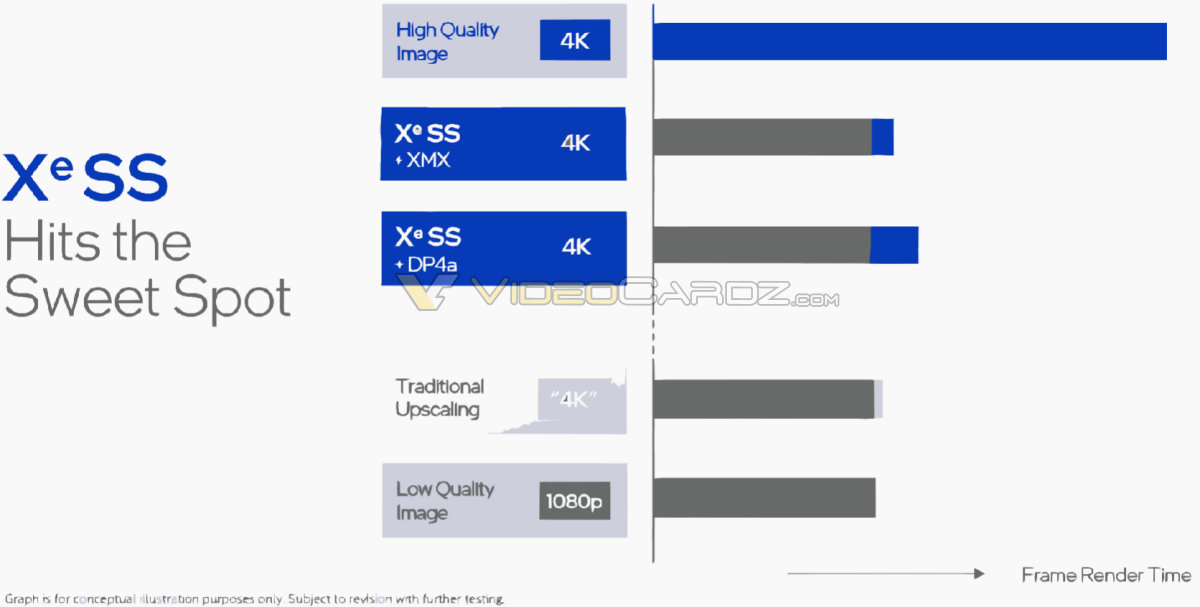

As for perf, DP4A takes 2.2x more time, this translates into something like 3-4x more time on NN's execution, matches quite well with expectations, i.e. theoretical difference in ops numbers between DP4A and XMX.

These bars are useless though, there is 0 information on actual frame times, so whether that DP4A mode is productizable for real games has to be proven, a lot of issues to solve with precision, performance, post-processing and generalization.

The best way to do it would be to offer decent, open-source and/or IHV-agnostic competition

Will be entertaining to see how open sourcing NN's training environment with data sets and all related quirks will accelerate adoption, there are so many data scientists with graphics engineering skills in game development that everybody would love to learn all that stuff immediately.