You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD: R8xx Speculation

- Thread starter Shtal

- Start date

Tridam's summaries are always easy on the eyes, but, as usual, check the individual game scores (from as many sites as possible) to see how it performs on your games. Or, in your case, an infinitesimal subset of your games.

Xbit's summary chart is great.

Tech Power up has such summaries as well. I really like them and really think they help put things in overall perspective, and I think bigger sites like Anand should adapt them, theyre a no brainer imo.

What do you guys think for "5890"? Just a clock boost I assume?

One thing I always wonder is, since they did the cap ring on 4890, shouldn't they know what will work and just build it in on succeeding chips right away? I mean, it seems kind of why wait.

I think a clock boost+GDDR5 speed boost sounds pretty tasty. This chip seems like it uniquely could be helped a lot by a little extra memory BW, so a refresh has extra potential. Also, theyre starting at an already blazing fast clock. A core increase should reach 950 or even 1000 mhz with ease, versus 850 for 4890.

Are more functional units ruled out?

I still wonder why this chip doesnt reach it's theoreticals 2X 4890 in games. It performs almost exactly like a 4870X2, when it should do significantly better, not using CF AND being clocked faster. I have to wonder if the whatever texturing thing they did was a bad move, but thats pure rampant and probably incorrect speculation on my part. Perhaps if it is the texturing thing, drivers will help a lot in the future. Perhaps if not, it's being dinged a bit by BW, + drivers. Perhaps it's something I would have no clue about (like the internal caches).

I have to say as an 4890 owner I'm kinda bummed about missing out on the 27W idle of this thing. Kinda feel a slight bit like ATI let me down or something. Knowing I'm going to have 90 watts heating up my room (I live in Texas heh) for the forseeable future in idle isn't the best.

One thing I always wonder is, since they did the cap ring on 4890, shouldn't they know what will work and just build it in on succeeding chips right away? I mean, it seems kind of why wait.

I think a clock boost+GDDR5 speed boost sounds pretty tasty. This chip seems like it uniquely could be helped a lot by a little extra memory BW, so a refresh has extra potential. Also, theyre starting at an already blazing fast clock. A core increase should reach 950 or even 1000 mhz with ease, versus 850 for 4890.

Are more functional units ruled out?

I still wonder why this chip doesnt reach it's theoreticals 2X 4890 in games. It performs almost exactly like a 4870X2, when it should do significantly better, not using CF AND being clocked faster. I have to wonder if the whatever texturing thing they did was a bad move, but thats pure rampant and probably incorrect speculation on my part. Perhaps if it is the texturing thing, drivers will help a lot in the future. Perhaps if not, it's being dinged a bit by BW, + drivers. Perhaps it's something I would have no clue about (like the internal caches).

I have to say as an 4890 owner I'm kinda bummed about missing out on the 27W idle of this thing. Kinda feel a slight bit like ATI let me down or something. Knowing I'm going to have 90 watts heating up my room (I live in Texas heh) for the forseeable future in idle isn't the best.

Last edited by a moderator:

Looking at the techpower up article, it seems clear that 5870 is pretty much a single chip 4870x2, with DX11, at lower price.

Even if GT300 is a 2x improvement over gt200 in specs like rv870, then it is highly unlikely that it will be a flat 2x improvement in performance. Such things rarely happen, as cypress shows. It's looking hard ATM, that it will be able to beat a Hemlock, even if hemlock shows ~30-40% scaling on average.

Even if GT300 is a 2x improvement over gt200 in specs like rv870, then it is highly unlikely that it will be a flat 2x improvement in performance. Such things rarely happen, as cypress shows. It's looking hard ATM, that it will be able to beat a Hemlock, even if hemlock shows ~30-40% scaling on average.

Looking at the techpower up article, it seems clear that 5870 is pretty much a single chip 4870x2, with DX11, at lower price.

You forget that bandwidth was not doubled.

looking at the techpower up article, it seems clear that 5870 is pretty much a single chip 4870x2, with dx11, at lower price.

Even if gt300 is a 2x improvement over gt200 in specs like rv870, then it is highly unlikely that it will be a flat 2x improvement in performance. Such things rarely happen, as cypress shows. It's looking hard atm, that it will be able to beat a hemlock, even if hemlock shows ~30-40% scaling on average.

gt300x2.

It will not be possible unless

1) you shrink gt300 to 32 nm, which cypress can be shrunk too, to get higher clocks. If GT300 x2 is overshoots power budget, then gt300b will have pretty much no room for increasing clocks.

OR

2) gt300 was designed to be packed into an x2 card from the get go, ie has the ~same power budget as 5870/4870/gt200b. And even then, it'll be a silent admission of how they can no longer make single uber gpu's, even if they want to and they say that they want to. For the most part nv releases x2 gpu's only when they have no other way to get the perf crown.

At this time, option 2 seems less likely to me.

1) you shrink gt300 to 32 nm, which cypress can be shrunk too, to get higher clocks. If GT300 x2 is overshoots power budget, then gt300b will have pretty much no room for increasing clocks.

OR

2) gt300 was designed to be packed into an x2 card from the get go, ie has the ~same power budget as 5870/4870/gt200b. And even then, it'll be a silent admission of how they can no longer make single uber gpu's, even if they want to and they say that they want to. For the most part nv releases x2 gpu's only when they have no other way to get the perf crown.

At this time, option 2 seems less likely to me.

I think it's a driver issue. RV770 -> RV870 is a bit similar change as X800->X1800 (lesser than X1900->HD2900, but bigger than RV670->RV770). Many things now work in a different way and it will take some time to optimize drivers. E.g. drivers for RV770 brought significant performance changes till this spring - and RV770 wasn't as big step as RV870. My personal expectation is at least +10% till the end of this year, maybe more.I still wonder why this chip doesnt reach it's theoreticals 2X 4890 in games. It performs almost exactly like a 4870X2, when it should do significantly better, not using CF AND being clocked faster. I have to wonder if the whatever texturing thing they did was a bad move, but thats pure rampant and probably incorrect speculation on my part. Perhaps if it is the texturing thing, drivers will help a lot in the future. Perhaps if not, it's being dinged a bit by BW, + drivers. Perhaps it's something I would have no clue about (like the internal caches).

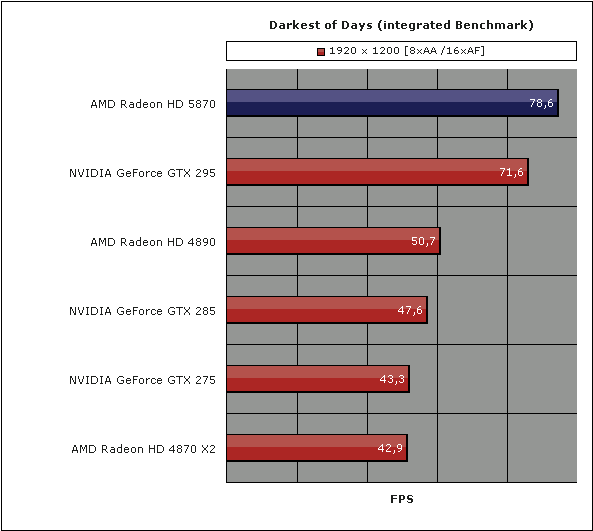

Btw. you can find situations, where the HD5870 shows its full potential (Darkest of Days, Anno 1404, ArmA2, so I expect it's not a HW issue:

Yes, in this particular case the performance is at the expected level, but not when compared directly to HD4890. Anyway, there are other examples, where the HD5870 is faster than HD4870X2 (CF working):That's only a 55% increase, far from its full potential.

http://www.legionhardware.com/document.php?id=858&p=3

http://www.hardware.fr/articles/770-12/dossier-amd-radeon-hd-5870.html

It was 90W for the initial 4870's, 4890 was 60W - you're about half the way there!I have to say as an 4890 owner I'm kinda bummed about missing out on the 27W idle of this thing. Kinda feel a slight bit like ATI let me down or something. Knowing I'm going to have 90 watts heating up my room (I live in Texas heh) for the forseeable future in idle isn't the best.

There were changes to the ASIC design to make that happen and that was a direct result of what we learnt with RV7xx.

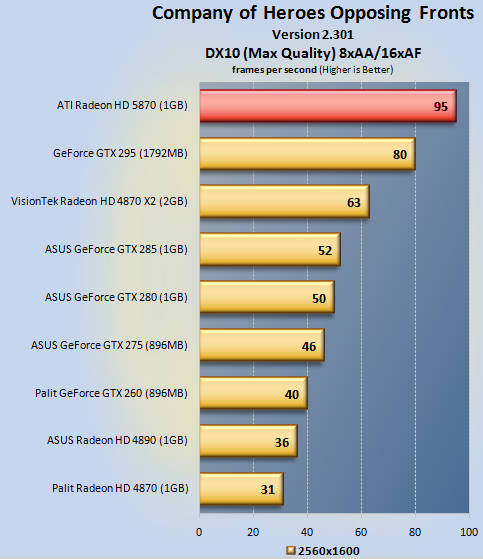

In Company of Heroes it's 2.64 times as fast as the 4890

That is what i wanted to ask. CoH was always a TWIMP game as you see the 4890 bm. Something in 5870 changed and we see the perfomance skyrocket! I wonder what happens with CoH engine. The TMU and ROP was limiting the 4800 series and Nvidia exploited that in games? Can we see/conclude something about AMD/Nvidia architecture...

It will not be possible unless

1) you shrink gt300 to 32 nm, which cypress can be shrunk too, to get higher clocks. If GT300 x2 is overshoots power budget, then gt300b will have pretty much no room for increasing clocks.

OR

2) gt300 was designed to be packed into an x2 card from the get go, ie has the ~same power budget as 5870/4870/gt200b. And even then, it'll be a silent admission of how they can no longer make single uber gpu's, even if they want to and they say that they want to. For the most part nv releases x2 gpu's only when they have no other way to get the perf crown.

At this time, option 2 seems less likely to me.

It's this time that the 2nd option is likelier. GT200b was the chip that went into the GTX295. They might only take another revision for it but a smaller manufacturing process doesn't seem necessary at this point. I think you're forgetting that they went from GT200@65nm to GT200b@55nm with the 2nd for the dual GPU/later dual chip thingy. Now they're starting with 40nm.

They may be starting with 40nm, but unless they planned to release an x2 part, or planned to keep an option of an x2 part (in reserve, should it be needed), it seems most likely that they would have used nearly all the power budget to build an uber GT300.

Any particular reason they would not do that?

Any particular reason they would not do that?

Similar threads

- Replies

- 17

- Views

- 3K

- Replies

- 220

- Views

- 88K

- Replies

- 90

- Views

- 13K

- Replies

- 172

- Views

- 19K