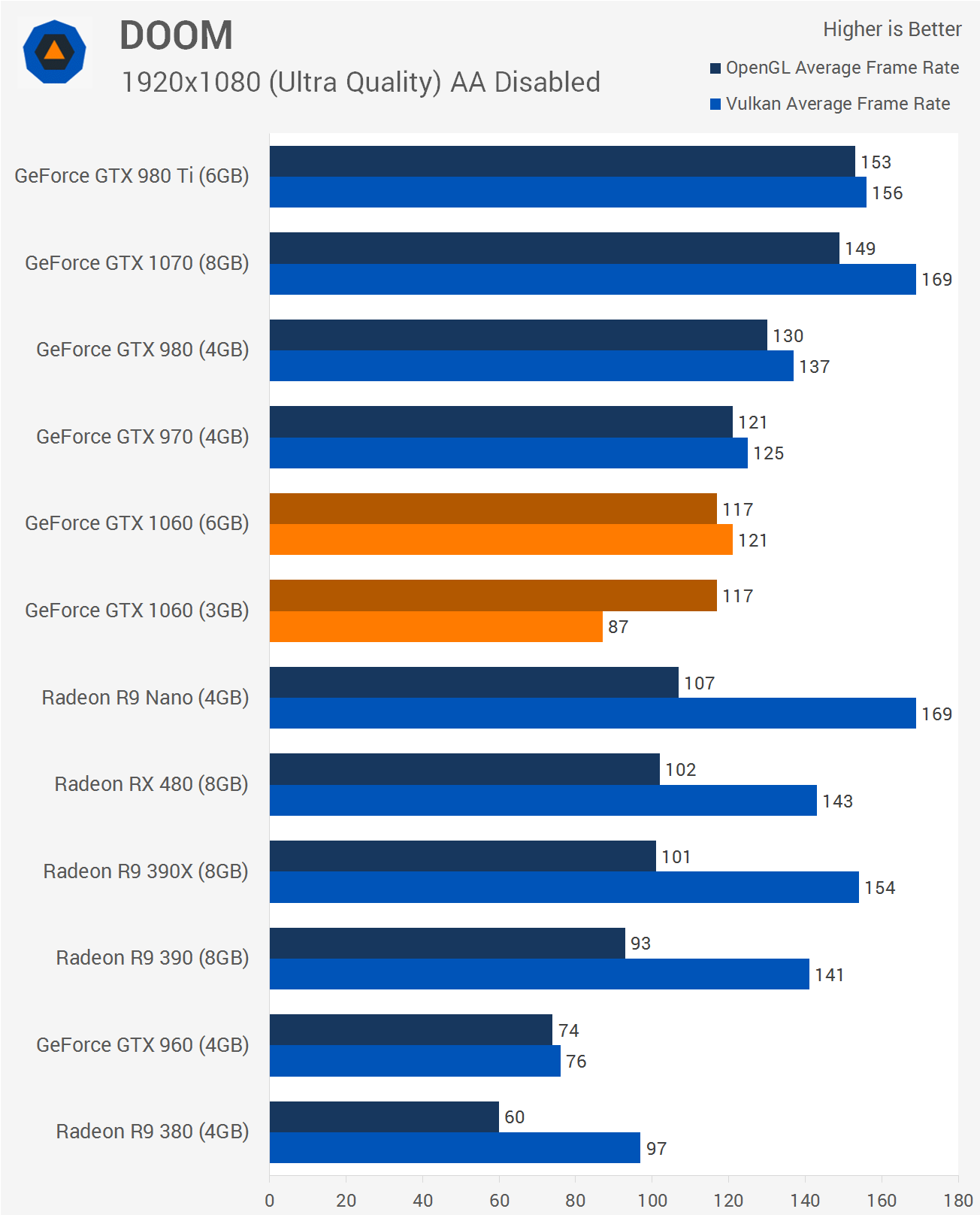

From the same review, it looks like in a GPU-limited scenario the AMD driver works just as well:

Though this isn't Vulkan, so unless we're also trying to make DX12 into a GCN construct, the point is a bit moot.

I actually saw some benchmarks of DOOM running in Vulkan on different older CPUs comparing a 1060 and a 480.

http://www.hardwareunboxed.com/gtx-1060-vs-rx-480-in-6-year-old-amd-and-intel-computers/

^This is before 372.54

372.54 brought driver support for vulkan runtime version 1.0.0.17 and with it reduced CPU render times by a factor of 4 in one benchmark I saw, but obviously the effects of that need to be seen using higher end graphics cards

http://www.sweclockers.com/test/22533-snabbtest-doom-med-vulkan