You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Value of Hardware Unboxed benchmarking *spawn

- Thread starter Phantom88

- Start date

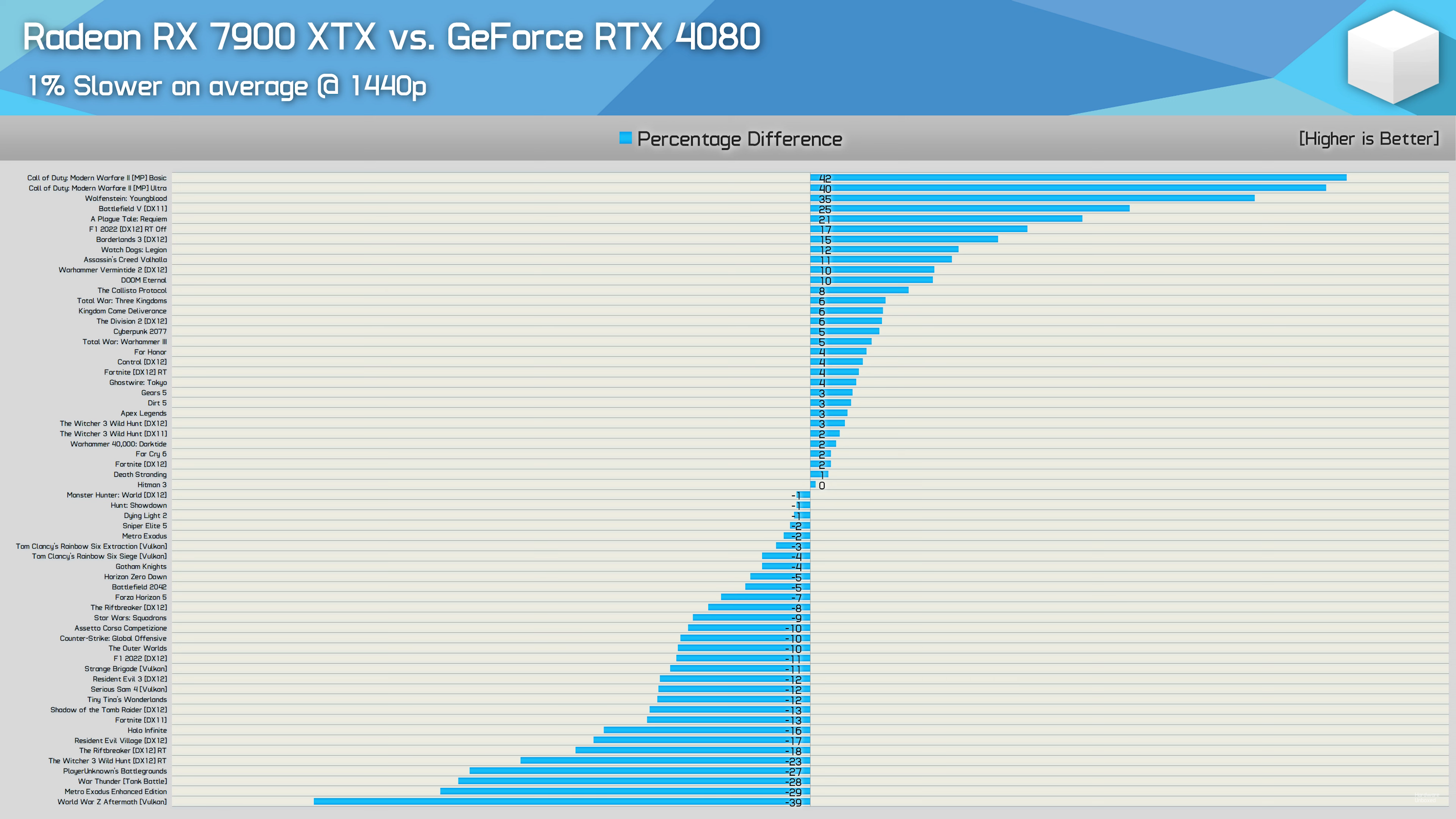

It's crazy that it can be 40% faster or slower depending on the game.

Although including Modern Warfare in there twice at different settings blatantly skews the average in a nonsensical way.

EDIT: also why would you include Cyberpunk without RT and then class it as a win for the XTX? That's not how most people with these class of GPU's would play the game, especially at 1440p.

Although including Modern Warfare in there twice at different settings blatantly skews the average in a nonsensical way.

EDIT: also why would you include Cyberpunk without RT and then class it as a win for the XTX? That's not how most people with these class of GPU's would play the game, especially at 1440p.

Jawed

Legend

Think about it for a second: "it's crazy that the RTX 4080 can be 40% faster or slower depending on the game".It's crazy that it can be 40% faster or slower depending on the game.

There are more games with multiple settings, so I don't think they are trying to skew the average.Although including Modern Warfare in there twice at different settings blatantly skews the average in a nonsensical way.

Cyberpunk's non-RT settings above "high" or even "medium" are awful bang for the buck visuals.It's crazy that it can be 40% faster or slower depending on the game.

Although including Modern Warfare in there twice at different settings blatantly skews the average in a nonsensical way.

EDIT: also why would you include Cyberpunk without RT and then class it as a win for the XTX? That's not how most people with these class of GPU's would play the game, especially at 1440p.

There are more games with multiple settings, so I don't think they are trying to skew the average.

Yes but as far as I can see they are all RT on vs off (with Cyberpunk RT noticeably absent) or API differences, I.e. things that could genuinely change the state of play between the two architectures, not a simple (non RT) settings change in a title that is already a performance outlier which is never going to change the state of play. They're effectively just counting the XTX's biggest win twice.

DavidGraham

Veteran

That's Hardware Unboxed for you, no testing standards whatsoever, they change them on a regular basis on their whims.They're effectively just counting the XTX's biggest win twice.

Are Battlefield, Plague Tale and Wolfenstein also broken DX12 games?Not really. It does better in broken DX12 games like Modern Warfare 2. Performance difference isnt hardware related anymore with these GPUs outside of Raytracing.

It's funny. When NVIDIA isn't performing as well as expected it's broken game, when AMD isn't performing as well as expected it's AMDs fault.Not really. It does better in broken DX12 games like Modern Warfare 2. Performance difference isnt hardware related anymore with these GPUs outside of Raytracing.

Other than AMD cards running it better than average, how exactly is CoD DX12 "broken"?

What a ridiculous and hyperbolic statement.That's Hardware Unboxed for you, no testing standards whatsoever, they change them on a regular basis on their whims.

This claim has been multiple times by the Nvidia crowd both on here and Twitter, each time with no proof or substance. HUB literally goes through test setups and procedures in each of their video and articles.

HUB's tests are also cross-checked with LTT and GN, and the three channels regularly communicate and coordinate test procedures with one another. Are you also claiming that GN and LTT have "no testing standards whatsoever" too?

Possibly it is in this workload. RDNA 3 in its current iteration is a disaster.Maybe because the 320W 4080 is using less than 200W in 1440p with the balance setting:

Makes this 4080 2,45x times more efficient than a 3080 12GB...

DavidGraham

Veteran

LTT? Plz .. that clown doesn't know how to test shit if his life depneded on it, that ship has sailed long time ago, their benchmarks are full of mistakes and contradictions that are constantly corrected by the community on a regular basis.Are you also claiming that GN and LTT have "no testing standards whatsoever" too?

GN is above all of this of course. But they test so few games to begin with unfortunately. Their methodology is to sample enough games to establish a trend, after that they stop the testing.

The proof is here, they tested COD twice (one for low settings and one for Ultra), and added both results to the averages, despite COD not offering any ray tracing or any other API. How is that a valid testing?each time with no proof or substance. HUB literally goes through test setups and procedures in each of their video and articles.

You do realize its hardly affecting the net result of the summary right?LTT? Plz .. that clown doesn't know how to test shit if his life depneded on it, that ship has sailed long time ago, their benchmarks are full of mistakes and contradictions that are constantly corrected by the community on a regular basis.

GN is above all of this of course. But they test so few games to begin with unfortunately. Their methodology is to sample enough games to establish a trend, after that they stop the testing.

The proof is here, they tested COD twice (one for low settings and one for Ultra), and added both results to the averages, despite COD not offering any ray tracing or any other API. How is that a valid testing?

DavidGraham

Veteran

It's not about that, it's about establishing a standard and sticking with it, not winging it according to his whims. They tested COD twice, but tested Cyberpunk once without RT, despite the game offering RT. They did the same with Battlefield V, Control, Resident Evil Village, Ghostwire, Watch Dogs Legion, Dying Light 2 and Hitman 3 .. etc. They all got tested once, with no RT. Fortnite/Witcher 3 were tested 3 times, one for each API! While The Division, Battlefield V and Borderlands 3 get tested only for one API despite each having two! Where is the logic!You do realize its hardly affecting the net result of the summary right?

Last edited:

Similar threads

- Replies

- 112

- Views

- 12K

- Replies

- 157

- Views

- 7K

- Replies

- 21

- Views

- 2K