The pump may have failed, not sure how you test that in an aio (easy in a custom loop). How old is it?

I want to say 2017 or 2018. Using a 5800x3d which isn’t super demanding.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

The pump may have failed, not sure how you test that in an aio (easy in a custom loop). How old is it?

Interestingly the .exe shows 5.6.

What processor are you running? Could it be Intel 13/14 gen instability?

They normally have a warranty between 5 to 10 years, it might be in the time frame the pump is a serious thing to try look at. It's a pity those cpu's didn't come with a standard cooler you could try. Quick google search seems to say you can try listen to see if you can hear the pump and squeeze a tube to restrict flow to try to make it make a bit more noise, the more reliable way seems to be feel the tubes near the radiator end while the cpu is heating up and if they arn't warm to the touch the water isn't circulating.I want to say 2017 or 2018. Using a 5800x3d which isn’t super demanding.

Thinking of games that eventually got to that level, I think we really only have something like Metro Exodus. Any other games that used tessellation stage so much anyone can think of?

They normally have a warranty between 5 to 10 years, it might be in the time frame the pump is a serious thing to try look at. It's a pity those cpu's didn't come with a standard cooler you could try. Quick google search seems to say you can try listen to see if you can hear the pump and squeeze a tube to restrict flow to try to make it make a bit more noise, the more reliable way seems to be feel the tubes near the radiator end while the cpu is heating up and if they arn't warm to the touch the water isn't circulating.

It seems to need some good cooling, a cheap air cooler doesn't look like it will cut it for a quick and cheap fix. Looks like you need something like a noctua d15 which (in my country anyway) is not much cheaper than an aio.

Metro 2033 and Metro Last Light also used it heavily, there was also Crysis 2, Dragon Age 2, Batman Arkham City, Hitman Absolution, several other games uses it extensively to render water waves, such as Forza Horizon 3.Thinking of games that eventually got to that level, I think we really only have something like Metro Exodus. Any other games that used tessellation stage so much anyone can think of?

Yes Nanite tessellation will generally be slower than the equivalent pre-tessellated mesh, as one should really expect it to be. With a pre-tessellated/displaced mesh Nanite can work out more optimal simplifications because it can consider the final mesh shape and put triangles where they matter the most. With late/dynamic displacement there are fewer possibilities for optimization, similar to the negatives of things like vertex shader animation/world position offset.I saw a vid about nanite tesselation that showed one of the drawbacks is that you lose the dynamic cluster lod. So you save space on disk and in memory by reducing your models, but the tessellated model can probably reduce real-time performance. I’d be curious to see how many places Fortnite uses full high-poly meshes vs using nanite tesselation.

What do you mean by "emulated"? If you mean ditching the fixed function GPU HW for tessellation, RDNA's Next Generation Geometry pipeline already did that. Hull shaders are compiled to surface shaders; domain and geometry shaders are compiled to primitive shaders.How long before hardware tessellation is emulated? Seems like a dead end tech.

How long before hardware tessellation is emulated? Seems like a dead end tech.

What do you mean by "emulated"? If you mean ditching the fixed function GPU HW for tessellation, RDNA's Next Generation Geometry pipeline already did that. Hull shaders are compiled to surface shaders; domain and geometry shaders are compiled to primitive shaders.

Wasn't it only AMD doing a fixed block tesselation unit(Tesselator), while NVIDIA did it in their Polymorph engine (inside each SM)?Hull and domain shaders were never fixed function. They always ran on the compute cores. I’m referring to the fixed function tessellation step.

The hull shader gets the source geometry, and does some calculations to decide how many new triangles to add (the magical tessellation factors). The tessellator then adds the extra triangles, and the domain shader can do some final calculations to position the new triangles correctly. The bottleneck in AMD’s approach is that it is implemented as a conventional pipeline. Where you’d normally pass a single triangle through the entire pipeline, you now get an ‘explosion’ of triangles at the tessellation stage. All these extra triangles need to be handled by the same pipeline that was only designed to handle single triangles. As a result, the rasterizer and pixel shaders get bottlenecked: they can only handle a single triangle at a time. This problem was already apparent in Direct3D 10, where the geometry shader could do some very basic tessellation as well, adding extra triangles on-the-fly. This was rarely used in practice, because it was often slower than just feeding a more detailed mesh through the entire pipeline.

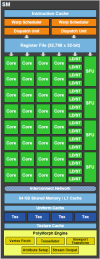

nVidia decided to tackle this problem head-on: their tessellator is not just a single unit that tries to stuff all the triangles through a single pipeline. Instead, nVidia has added 16 geometry engines. There is now extra hardware to handle the ‘explosion’ of triangles that happens through tessellation, so that the remaining stages will not get bottlenecked. There are extra rasterizers to set up the triangles, and feed the pixel shaders efficiently.

With AMD it is very clear just how much they are bottlenecked: the tessellator is the same on many of their cards. A Radeon 5770 will perform roughly the same as a 5870 under high tessellation workloads. The Radeon 5870 may have a lot more shader units than the 5770, but the bottlenecking that occurs at the tessellator means that they cannot be fed. So the irony is that things work exactly the opposite of what people think: AMD is the one whose shaders get bottlenecked at high tessellation settings. nVidia’s hardware scales so well with tessellation because they have the extra hardware that allows them to *use* their shaders efficiently, ie NOT bottlenecked.

Yes, it is integratedd with SM's so more SM's = greater tesselation performance.I thought Nvidia used FF hardware as well, they just distribute it better by having it integrated at the SM level.

Which, tmk, is still on by default? Making benchmarks not 1:1 maybe?Might also be why they introduced the option to cap tesselation levels in the driver.

I honesttly do no know, all I remeber from that time was that...(was Huddy his name?) went from something aka "A beast called tessellation" to "TOO much tesselation" and then the false info about Crysis and tesselation that did not factor in Z-culling on forums.Which, tmk, is still on by default? Making benchmarks not 1:1 maybe?

Wasn't it only AMD doing a fixed block tesselation unit(Tesselator), while NVIDIA did it in their Polymorph engine (inside each SM)?

I know, their solution was mere "elegant though as your tesselation performance scaled with the number of SM's meaning the tessellation performance wasn't bottledkncked the same way as AMD's was/is.Nvidia scaled up geometry processing by distributing work across TPCs. However the tessellator in each TPC is fixed function. Hull and domain shaders run on the CUDA cores like any other compute workload.

If it was programmable Nvidia would’ve been shouting that from the rooftops since Fermi and would’ve done some proprietary tricks with it since then.