Why ?

DDR 4 3200 32GB ($125) https://www.amazon.com/Crucial-Ballistix-Desktop-Gaming-BL2K16G32C16U4B/dp/B083TSLDF2/ref=sr_1_9?crid=1I2N9U2AR7S0O&dchild=1&keywords=ddr4+3200+32gb&qid=1609880332&sprefix=ddr+4+3200,aps,164&sr=8-9

DDR 4 3200 64gigs ($200) https://www.amazon.com/TEAMGROUP-3200MHz-PC4-25600-Unbuffered-Computer/dp/B086X24BZY/ref=sr_1_9?crid=RZ7Z81LRP6ZX&dchild=1&keywords=ddr4+3200+64gb&qid=1609880397&sprefix=ddr4+3200+64,aps,156&sr=8-9

Gen 4 nvme drives are what

500GB $110 https://www.amazon.com/Corsair-Force-Gen-4-MP600-500GB/dp/B07WS1BRX4/ref=sr_1_3?crid=ZDTRVDXCA0BU&dchild=1&keywords=pci+gen+4+m.2+nvme+ssd&qid=1609880485&sprefix=pci-4+nvme,aps,170&sr=8-3

You can change from the 500GB to the 2TB on that link and its $379 bucks the 1TB is out of stock so i can't tell you the price.

I mean i can add 32gigs of ram vs just 500GB of storage. If I already have a sata ssd or even a slower nvme drive and I unreal will make use of the extra ram too , wouldn't it be better to go with the extra ram?

The 1 TB is around 150 to 180 dollars depending of the price of the NAND or some discount.

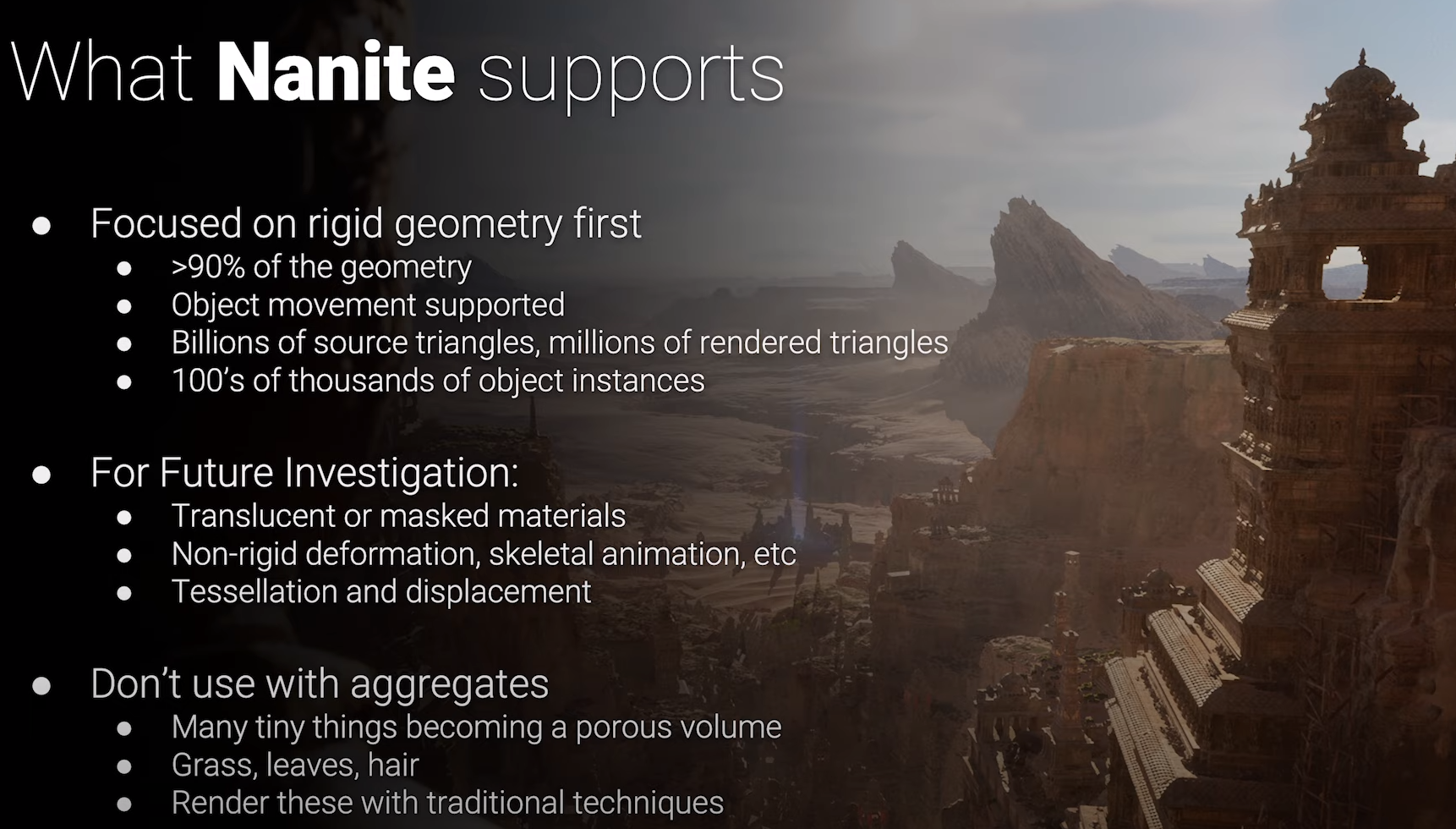

The data is streamed based on current view and this is virtualized geometry. This is exactly what you want to avoid load data in advance what will you load into mip 1 or 2 of UE 5 technology because you are unable to load the LOD0 as fast as possible and this is sad if the GPU is able to render the mip 0 LOD. It means too load the full model because you don't know what you will render on screen. And Direct Storage is their to avoid having to copy the data between RAM and VRAM.

They talked about mip level of geometry, it means you will have less polygons because your storage is not fast enough to load the data. In the demo the streaming pool is only 768 MB because of fast storage but if you begin to load full model basically the VRAM will maybe be a problem.

The level of detail scale with the storage speed.

EDIT:

https://www.amazon.com/Sabrent-Inte...+Nvme+PCIe+4.0+M.2+2280&qid=1609881697&sr=8-1

This one is 169 dollars a 1TB PCIE 4