Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

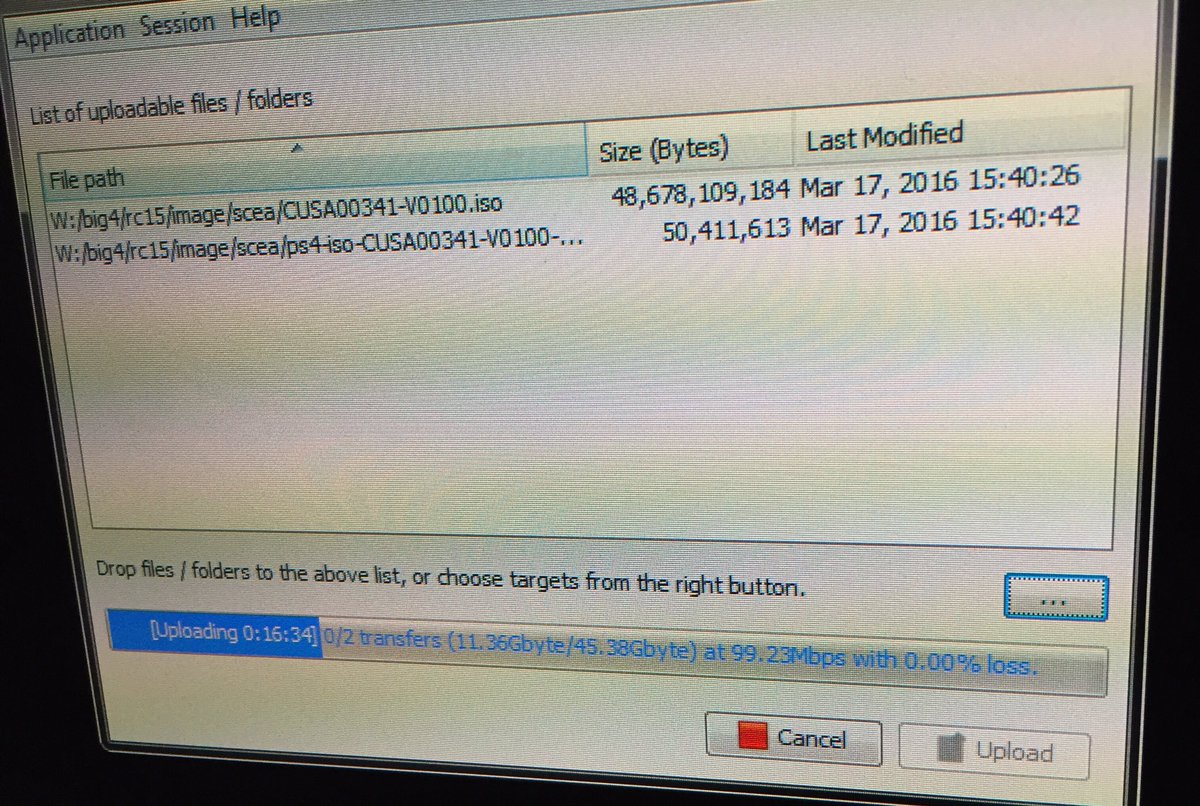

Uncharted 4: A Thief's End [PS4]

- Thread starter Strange

- Start date

- Status

- Not open for further replies.

Digic == bastards! Always ruining everyone's expectations.

Heads or Tails trailer was Digic again. Sorry to rain on your parade hereand the game still looks fantastic anyway.

Amazing work as usual

Have to ask though, is my hutch correct, that it's based on in-game assets, or is it just this asset specifically?

Also, you guys must feel proud about these Uncharted trailers, everyone seems to be praising them to high heavens on social media(the trailers specifically, not the game) :smile:

Can't really talk much about any details, sorry about that. With that said, some things written here may be correct and others may be wrong...

And thanks, it was a great honor to work on another UC4 trailer and we're really glad if the fans like it

And thanks, it was a great honor to work on another UC4 trailer and we're really glad if the fans like it

Only SP? Maybe unique assets are to blame, the city looked very dense in the E3 demo for example.

The game is huge and many assets don't forget UC4 is the proof of concept of a game asset creation with substance designer(major part of the texture done with the tool) it is procedural texture into the disc and after change into normal texture. At runtime? Or during installation of the game? 80% of texture in E3 demo use substance designer.

All cutscene are real-time no video.

Last edited:

Er, I don't think that's how the Substance pipeline works, even at ND.

For a start, the software is built around bitmaps and not procedurals; either hand painted, extracted from the high res source model (things like normals, cavity, AO, direction, edge detection etc) or based on photographs.

Edit: thinking about it a bit more, there may be some default fractal / Perlin noise functions in the package as well, but they're probably only used to add variation to the masks generated from the highres model. And yeah, maybe you can call the cavity and edge maps procedural as well, after all they're generated from the normals and maybe height maps

They might do some of the complex texture map combinations in real time, although it's probably still be better (ie. faster) to just bake out everything into bitmaps. But Substance is AFAIK a content creation tool and not a runtime middleware.

By the way there was a GDC presentation from last year, about the Halo 2 anniversary pipeline, that was already talking about a lot of the possibilities and workflows possible in Substance, combining it with Zbrush etc. Not to take away any credit from Naughty Dog - especially because they seem to had a LOT of input on how to develop the software - but this approach to asset creation isn't such a big news.

Also I think Ready at Dawn had a pretty similar pipeline for The Order, but without using Substance and developing a completely new inhouse tool instead.

For a start, the software is built around bitmaps and not procedurals; either hand painted, extracted from the high res source model (things like normals, cavity, AO, direction, edge detection etc) or based on photographs.

Edit: thinking about it a bit more, there may be some default fractal / Perlin noise functions in the package as well, but they're probably only used to add variation to the masks generated from the highres model. And yeah, maybe you can call the cavity and edge maps procedural as well, after all they're generated from the normals and maybe height maps

They might do some of the complex texture map combinations in real time, although it's probably still be better (ie. faster) to just bake out everything into bitmaps. But Substance is AFAIK a content creation tool and not a runtime middleware.

By the way there was a GDC presentation from last year, about the Halo 2 anniversary pipeline, that was already talking about a lot of the possibilities and workflows possible in Substance, combining it with Zbrush etc. Not to take away any credit from Naughty Dog - especially because they seem to had a LOT of input on how to develop the software - but this approach to asset creation isn't such a big news.

Also I think Ready at Dawn had a pretty similar pipeline for The Order, but without using Substance and developing a completely new inhouse tool instead.

The guys at Allegorithmic said it worked both ways during development in U4, it shaped both the game but also the software tool as well, so i'm guessing they worked closely with ND for U4.

There was a GDC presentation about it, a summary can be found here: http://www.dualshockers.com/2016/03...more-naughty-dog-talks-texture-design-at-gdc/

There was a GDC presentation about it, a summary can be found here: http://www.dualshockers.com/2016/03...more-naughty-dog-talks-texture-design-at-gdc/

The relationship with Allegorithmic worked both ways. Allegorithmic helped Naughty Dog define its internal pipeline, and Naighty Dog helped define Substance Designer 3 and 4.

Last edited:

Er, I don't think that's how the Substance pipeline works, even at ND.

For a start, the software is built around bitmaps and not procedurals; either hand painted, extracted from the high res source model (things like normals, cavity, AO, direction, edge detection etc) or based on photographs.

Edit: thinking about it a bit more, there may be some default fractal / Perlin noise functions in the package as well, but they're probably only used to add variation to the masks generated from the highres model. And yeah, maybe you can call the cavity and edge maps procedural as well, after all they're generated from the normals and maybe height maps

They might do some of the complex texture map combinations in real time, although it's probably still be better (ie. faster) to just bake out everything into bitmaps. But Substance is AFAIK a content creation tool and not a runtime middleware.

By the way there was a GDC presentation from last year, about the Halo 2 anniversary pipeline, that was already talking about a lot of the possibilities and workflows possible in Substance, combining it with Zbrush etc. Not to take away any credit from Naughty Dog - especially because they seem to had a LOT of input on how to develop the software - but this approach to asset creation isn't such a big news.

Also I think Ready at Dawn had a pretty similar pipeline for The Order, but without using Substance and developing a completely new inhouse tool instead.

I never said they are the only one to use it but from what I understand of the presentation the version 3 and 4 were mostly done following Naughty Dog advice and they are by far the biggest user of the tools in the industry. It think I did an error for texture generation. It is only useful during content creation and bulding the project and it helps saving space during a project.

https://forums.unrealengine.com/sho...e-a-lot-of-textures-to-stay-low-on-build-size

Chris, we have a Substance pipeline here at the studio, so even though I'm not responsible for texturing, I still kinda now stuff about it

Also, while it may save run time memory to use just the source maps and combine them at rendering, there are two issues with that:

- It has to blend together a LOT of layers for the results and that is very, very slow. The dualshockers article has some images of all the nodes going into an average final texture, there are dozens of them.

- It means that you can't really take the final result and add some hand painted final polish to it.

It's still a very good and innovative tool and it helps a lot in content creation.

And yeah, of course it's going to be even better when we get to a point in interactive graphics where it will be possible to procedurally accumulate dirt on characters and environment elements, or tear off pieces of clothing, and so on

But for now, that processing power can be better spent elsewhere most of the time. And hey, you can still get that jeep muddy in UC4

Also, while it may save run time memory to use just the source maps and combine them at rendering, there are two issues with that:

- It has to blend together a LOT of layers for the results and that is very, very slow. The dualshockers article has some images of all the nodes going into an average final texture, there are dozens of them.

- It means that you can't really take the final result and add some hand painted final polish to it.

It's still a very good and innovative tool and it helps a lot in content creation.

And yeah, of course it's going to be even better when we get to a point in interactive graphics where it will be possible to procedurally accumulate dirt on characters and environment elements, or tear off pieces of clothing, and so on

But for now, that processing power can be better spent elsewhere most of the time. And hey, you can still get that jeep muddy in UC4

I hope to have fiber at home when it will be the case.

This doesn't guarantee you will be able to download fast enough from PSN

This doesn't guarantee you will be able to download fast enough from PSN

My friend have a PS4 and the fiber and the download are faster than DSL in France. I am on VDSL and the download are pretty good.

100mbit here but I have like 4Mb/s at best with psn. Sometimes it can be less than 1Mb/s. So it's kinda luck or not.My friend have a PS4 and the fiber and the download are faster than DSL in France. I am on VDSL and the download are pretty good.

100mbit here but I have like 4Mb/s at best with psn. Sometimes it can be less than 1Mb/s. So it's kinda luck or not.

Since 6 month PSN download speed are much better on my side. Before it was so slow. If the download is less than 10 GB. I launch the download from my smartphone at work and when I am at home most of the time it is done.

- Status

- Not open for further replies.

Similar threads

- Replies

- 25

- Views

- 3K

- Replies

- 10

- Views

- 924

- Replies

- 166

- Views

- 12K

- Replies

- 7

- Views

- 1K