Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

throw your ndas away

- Thread starter muted

- Start date

My blind guess under extreme bandwith situations (Doom3 with FSAA) Parhelia will eat any 128bits GPU by at least 50%.Dont' bash me..but am I the only one that think this is not enough?

I have the feeling R300 and NV30 will eat Parhelia alive, without being as much expensive, IMHO. (no prof, just a feeling )

Ascended Saiyan

Newcomer

If I may intervene here but where did the 40 bit color part come from?pascal said:Yeah, very exciting time.Mfa:

WOW Pascal, just gonna think out loud a bit ...

- Fragment FSAA

- Displacement mapping

- Lots of bandwith

- high quality 3D (40bits, better accuracy, etc...)

- Lots of vertex and pixel shader

- The price is not absurd (128MB $400)

- Very nice frequency response filter

- 10Bit DAC (including HDTV)

- on sale soon

Really powerfull. I dont care about DX9 for now.

Kudos to Matrox.

Ascended Saiyan said:If I may intervene here but where did the 40 bit color part come from?

From here:

Contents of the buffers of sequence in the floating size they cannot be brought out directly to the monitor through RAMDACH or the digital interface.they are used only for the internal targets/purposes.For the increased accuracy of the idea of the integral information of that concluded on the screen is introduced new size 10:10:10:2 (RGBA), where each color component is transferred with the accuracy of 10 bits.A similar accuracy with the surplus covers/coats the possibilities of contemporary display units and substantially enlarges dynamic range, and, therefore, and the quality of the resulting image.

Translation:

... Surprisingly, P512 is not equipped with any of the bandwidth-saving techniques. This is astounding - while such techniques are present in all of the latest ATi and Nvidia products, it would seem that Matrox decided to speed up/simplify the P512 development...

Ascended Saiyan

Newcomer

Pascal,from your quote it would seem to be only 32 bit color but this time it's quite different with 10 bits for R,G & B but there is only a 2 bit alpha remaining.

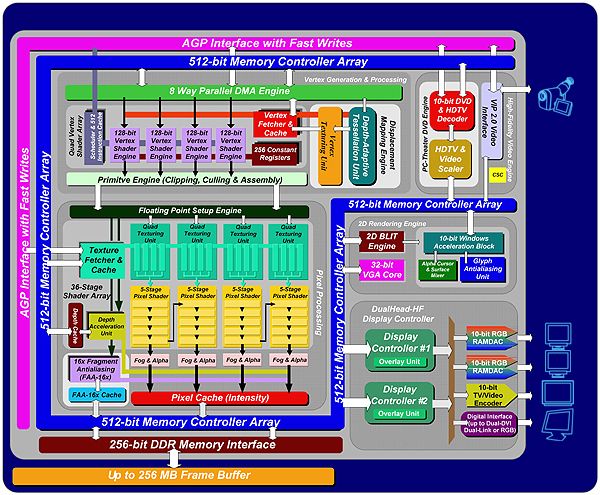

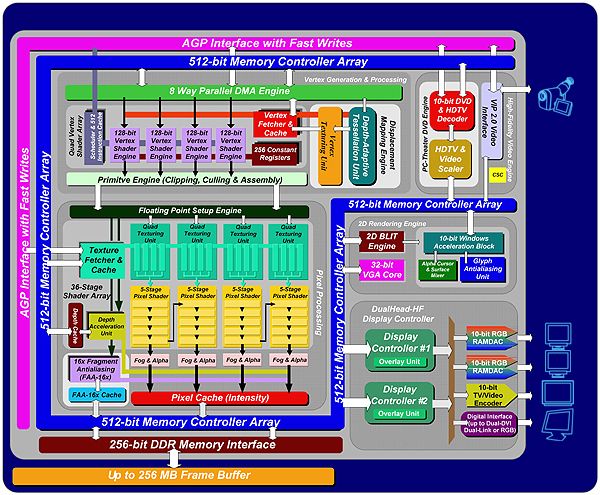

Geeforcer,that quote might be incorrect because if you look at the diagram there seems to be some form culling.

Geeforcer,that quote might be incorrect because if you look at the diagram there seems to be some form culling.

Geeforcer,that quote might be incorrect because if you look at the diagram there seems to be some form culling.

Thats sitting the the Geometry/Vertex section of the block diagram, so its probably just normal backfaced culling that anything with a T&L engine will do. Both Radeon and GF3/4's overdraw removal scheme's act on the Z-Buffer and theres no mention of anything like that in there.

Just, a question, how playable is it with that 3 wide screen setup, with that kind of setup, do they limit the resolution and the amound of AA that is allowed ? What about using 3 wide screen instead, would that be possible ?

Can't wait for an F1 game to support this, awsome.

Another question, does this thing require an external power supply ?

Can't wait for an F1 game to support this, awsome.

Another question, does this thing require an external power supply ?

Ascended Saiyan said:Geeforcer,that quote might be incorrect because if you look at the diagram there seems to be some form culling.

That's what the article says (I've translated it myself). Whether Ixbt info is correct, I do not know.

Geeforcer said:One thing that I can't figure out is why does it have 4TMUs per pipe? What's the use for that? In addition, the lack of any bandwidth saving techniques is strange as well.

... it's also a little strange that, despite the talk of using three displays, the chip only seems to have two display controllers. That suggests that the support for three displays will be rather limited... granted, to do all of the "surround gaming" examples shown so far, you only need to render a triple-wide image, and spread that single image over three output devices, which wouldn't take particularly elaborate display controller hardware to accomplish. Rendering a unique viewpoint for each of those displays would be a much more demanding task...

4 TMUs per pipe doesn't seem particularly strange to me, since more simultaneous textures is a logical progression. What would be strange is if they have no mechanism to support more than four texture sources per rendering pass.... would this pipeline uniting thing allow 8 (or even 16) textures per clock?

Joe DeFuria

Legend

Actully, I think I read that the third display is driven by an external RAMDAC. So it would seem to me the perhaps not all Parhelia variants will have "triple head". Some models might not be equipped with the third, external RAMDAC, and therefore only be dual head.

MfA said:Theyll like have a DVI output for the third signal though, so you can add your own external RAMDAC retroactively.

While that's not an uncommon approach to adding additional analog outputs, an additional output without its own display controller isn't much fun. From iXBT's writeup, Parhelia would seem to still be a DualHead design, despite supporting configurations that exploit three displays. Earlier hardware could do something similar, such as when TV-output is used simultaneously with two analog or digital displays, with the catch being that the third output is simply mirroring some part of one of the other two displays.

Ugh you are right, I had not looked at the diagram ... isnt this way of designing the hardware a bit silly in this day and age? You would think that as long as you are going to dedicate the pins for a given output you might as well dedicate the minute corner of the die needed for that output to individually access memory ... the controllers have shrunk tremendously in relation to the rest of the chip, you would think they are hardly significant anymore.

MfA said:If they can ship that within a couple of months Ill have to admit it will be far more than I ever expected.

Hell who would think Matrox would be the very first to implement a smart anti-aliasing method!!! (since the Warp5 anyway, but that doesnt count) My prayers answered by the least likely source, have not been so pleasently surprised since 3dfx bought Gigapixel ...

!

Not very often we see such positive statements from MfA.

1. WOW

2. 10bits ? I'm sure i recall the card which none dare speak it's name was 12 ( and had overbright ;-) )

3. Whats the "depth acceleration unit" with "depth cache" ? Just wondering if that was a "z-buffer bandwith saving device"

4. You have to wonder what nv30 is gunna be like if 3dlabs and matrox seem to have these amazing cards coming out..

5. Love the aa idea. The dungeon siege shots seem to show it off well..

6. End of summer ?? ... aww nuts...........

-dave-

AND, its gunna have matrox quality output :-O

[ edit , i got an error posting this ,, something about couldnt find a german_lang template.. ]

2. 10bits ? I'm sure i recall the card which none dare speak it's name was 12 ( and had overbright ;-) )

3. Whats the "depth acceleration unit" with "depth cache" ? Just wondering if that was a "z-buffer bandwith saving device"

4. You have to wonder what nv30 is gunna be like if 3dlabs and matrox seem to have these amazing cards coming out..

5. Love the aa idea. The dungeon siege shots seem to show it off well..

6. End of summer ?? ... aww nuts...........

-dave-

AND, its gunna have matrox quality output :-O

[ edit , i got an error posting this ,, something about couldnt find a german_lang template.. ]

Whole article translation

DigitLife has the complete article translated from Russian into English.

http://www.digit-life.com/articles/matroxparhelia512/index.html

DigitLife has the complete article translated from Russian into English.

http://www.digit-life.com/articles/matroxparhelia512/index.html

Similar threads

- Replies

- 220

- Views

- 18K

- Replies

- 34

- Views

- 11K

- Replies

- 14

- Views

- 4K